Master of Data Science (Global) Program - Deakin University, Australia

Deakin University in Australia offers its students a top-notch education, outstanding employment opportunities, and a superb university experience. Deakin is ranked among the top 1% of universities globally (ShanghaiRankings) and is one of the top 50 young universities worldwide.

The curriculum designed by Deakin University strongly emphasises practical and project-based learning, which is shaped by industry demands to guarantee that its degrees are applicable today and in the upcoming future.

The University provides a vibrant atmosphere for teaching, learning, and research. To ensure that its students are trained and prepared for the occupations of tomorrow, Deakin has invested in the newest technology, cutting-edge instructional resources, and facilities. All the students will obtain access to their online learning environment, whether they are enrolled on campus or are only studying online.

Role of UT Austin and Great Lakes in this Master of Data Science, Deakin University

The University of Texas at Austin’s (UT Austin) McCombs School of Business and Great Lakes Executive Learning have collaborated to develop the following course:

-

Data Science and Business Analytics

Students must opt for either of the courses mentioned above in the 1st year of this Data Science Master’s Degree (Global) Program. These courses will familiarise them with the cutting-edge fields that are necessary for providing insights, supporting decisions, deriving business insights and gaining a competitive advantage in the modern business world.

In the 2nd year, students will continue their learning journey with Deakin University’s 12-month online Master of Data Science (Global) Program, where they will gain exposure and insights into advanced Data Science skills to prepare for the jobs of tomorrow.

Why pursue the Data Scientist Masters Program at Deakin University, Australia?

The Master's Degree from Deakin University provides a flexible learning schedule for contemporary professionals to achieve their upskilling requirements. Though it's not the sole benefit, you can also:

-

Obtain the acknowledgement of a Global Master's Degree from an internationally-recognised university, Deakin University, along with Post Graduate Certificates from the world’s well-established institutions, UT Austin and Great Lakes.

-

Learn Data Science, Business Analytics from reputed faculties with live and interactive online sessions.

-

Develop your skills by using a curriculum created by eminent academicians and industry professionals.

-

Grasp practical knowledge and skills by engaging in project-based learning.

-

Become market-ready with mentorship sessions from industry experts

-

Learn with a diverse group of peers and professionals for a rich learning experience.

-

Secure a Global Data Science Master’s Degree at 1/10th the cost compared to a 2-year traditional Master’s program.

Benefits of Deakin University Master’s Course of Data Science (Global)

Several benefits are offered throughout this online Data Science degree program, which includes:

-

PROGRAM STRUCTURE

Deakin University's 12+12-month Master of Data Science (Global) Program is built with a modular structure that separates the curriculum into basic and advanced competency tracks, allowing students to master advanced Data Science skill sets successfully with Business Analytics.

-

INDUSTRY EXPOSURE

Through industry workshops and competency classes led by professionals and faculty at Deakin University, UT Austin and Great Lakes, candidates gain exposure and insights from world-class industry experts.

-

WORLD-CLASS FACULTY

To effectively teach the latest skills in the current market, the faculty members of Deakin University, UT Austin and Great Lakes come with years of expertise in both academics and industry.

-

CAREER ENHANCEMENT SUPPORT

The program provides career development support activities to assist applicants in identifying their strengths and career paths to help them pick the appropriate competencies to study. These activities include workshops and mentorship sessions. Students will also have access to GL Excelerate, a carefully curated employment platform from Great Learning, via which they can apply for appropriate opportunities.

The Advantage of Great Learning in this Deakin University’s Master of Data Science Course

Great Learning is India’s leading and reputed ed-tech platform and a part of BYJU’s group, providing industry-relevant programs for professional learning and higher education. This course gives you access to GL Excelerate, Great Learning's comprehensive network of industry experts and committed career support.

-

E-PORTFOLIO

An e-portfolio illustrates all the skills completed and knowledge gained throughout the course that may be shared on social media. Potential employers will be able to recognise your skills through the e-portfolio.

-

CAREER SUPPORT

The course provides students with career support, empowering them to advance their professions.

-

ACCESS TO CURATED JOB BOARD

Students will acquire access to a list of curated job opportunities that match their qualifications and industry. Great Learning has partnered with 12000+ organisations through the job board, where they shared job opportunities with an average salary hike of 50%.

-

RESUME BUILDING AND INTERVIEW PREPARATION

They assist the students in creating a resume that highlights their abilities and prior work experience. With the help of their interview preparation workshops, they will also learn how to ace interviews.

-

CAREER MENTORSHIP

Acquire access to personalised career mentorship sessions from highly skilled industry experts and take advantage of their guidance to develop a lucrative career in the respective industry.

Eligibility for Master of Data Science (Global) Program

The following are the requirements for program eligibility:

-

Interested candidates must hold a bachelor's degree (minimum 3-year program) in a related field or a bachelor's degree in any discipline with at least 2 years of professional work experience.

-

Candidates must meet Deakin University’s minimal English language requirement.

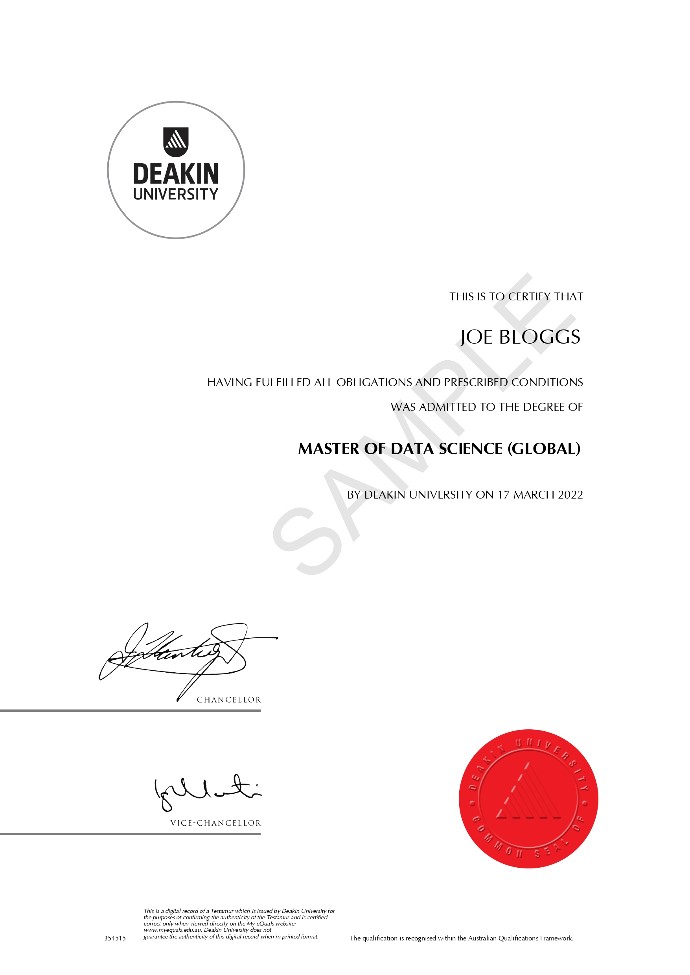

Secure a Master of Data Science (Global) Degree, along with PGP-DSBA Certificates

Students will be awarded three certificates from the world’s leading and reputed institutes:

PGP-DSBA and Master of Data Science (Global)

-

Post Graduate Certificate in Data Science and Business Analytics - The University of Texas at Austin

-

Post Graduate Certificate in Data Science and Business Analytics - Great Lakes Executive Learning

-

Master of Data Science Degree (Global) from Deakin University, Australia