Download Brochure

Check out the program and fee details in our brochure

Thanks for your interest!

An advisor will be reaching out to you soon.

Not able to view the brochure?

View BrochureGet details on syllabus, projects, tools, and more

Designed and delivered by IIT Bombay CSE faculty

Earn IIT Bombay credits which can be saved in the Academic Bank of Credits (ABC)

Campus Immersion at IIT Bombay

GATE Score not mandatory

IIT Bombay alumni status & In-person graduation ceremony at the IIT Bombay campus

Weekly live sessions from IIT Bombay CSE faculty for learning and query resolution

Online 6-course curriculum designed for both working professionals and fresh graduates

Access to IIT Bombay’s Lateral Hiring Group

Meet CSE faculty and experience IIT Bombay campus during campus visit

Personalised assistance with a dedicated Programme Manager

Home to 47 faculty members and top achievers of the First 50 ranks (JEE Advanced) and First 100 ranks (GATE CS)

Faculty honoured with accolades such as the Padma Shri, Fellow of the ACM and IEEE, Bhatnagar Award, Infosys Prize and many others

100+ publications annually in top-tier conferences and journals, and sponsored research projects worth Rs. 50 crores

Winners of the President of India Gold Medal and Distinguished Alumni Awards, leading researchers, entrepreneurs and influential policymakers

This e-Postgraduate Diploma requires candidates to successfully complete 36 IIT Bombay credits from CSE through a mix of courses, each of which will be of 6 credits. 9 courses are confirmed during the inaugural ePGD. These courses will be organised in three course baskets as mentioned below:

A. Theory and Practice of Advanced Programming: The courses offered in this bucket are The Program Developer's Toolbox; Algorithms and Complexity; Web and Software Security.

B. Computing Systems: The courses offered in this bucket are Cryptography and Network Security; Database and Big Data System Internals; Introduction to Blockchains.

C. Artificial Intelligence and Machine Learning: The courses offered in this bucket are Foundational Mathematics for Data Science; Natural Language Processing and Generative AI; Foundations of Computer Vision.

This e-Postgraduate Diploma in Computer Science and Engineering requires candidates to complete 6 or more courses. Out of these, a candidate has to pass at least 1 course from each one of the above course baskets. The remaining courses can be from any basket. The details of the courses offered are:

Read more

A: Theory and Practice of Advanced Programming

The Program Developer’s Toolbox (CSO606)

Description:

In this course, you will be introduced to essential tools and programming environments for software development. You will learn the Unix operating system, the C/C++ programming environment, and various software management tools. The course will cover the concepts behind Python programming, its popular libraries such as NumPy and SciPy, Web programming with HTML, CSS, and JavaScript, and elements of AI programming. To solidify your understanding, you will complete a course project that demonstrates your grasp of the key concepts covered in the course.

Topics:

Algorithms and Complexity (CSO605)

Description:

In this course, you will explore foundational concepts of algorithms and computational complexity. You will learn fundamental techniques for solving computational problems like induction, recursion, divide and conquer, dynamic programming and greedy algorithms. You will gain a strong understanding of complexity theory, by studying concepts of undecidability, polynomial-time problems, complexity classes, NP-hardness and NP-completeness.

Topics:

Web and Software Security (CSO602)

Description:

This course provides a comprehensive overview of web and software security, focusing on key

concepts such as web protocols, session management and server internals. You will explore

both server-side and client-side vulnerabilities, and also software and OS security, including the

fundamentals of Linux security. You will also learn the use of tools and frameworks like OWASP

Top 10 vulnerabilities, CVE database and CVSS scoring, integral to understanding and mitigating

cybersecurity risks.

Topics:

B: Computing Systems

Cryptography and Network Security (CSO601)

Description:

In this course, you will learn both cryptography and network security, starting with an overview of confidentiality, crypto-analysis, data integrity, and cryptographic protocols. You will explore various network attacks across different layers of the protocol stack, such as Eavesdropping, ARP spoofing and DHCP attacks. The course also covers secure network protocols, firewalls, and intrusion detection systems, providing you with the knowledge to secure and defend modern network infrastructures against potential threats.

Topics:

Database and Big Data System Internals (CSO603)

Description:

In this course, you will explore the internals of database systems, covering key concepts such as data storage, indexing, query processing, and transaction management. You will learn database system architectures, the internals of big data systems, and the challenges of parallel and distributed storage and query processing. The course will provide a strong foundation in building and managing real-world database systems through hands-on assignments with open-source databases and big data systems.

Topics:

Introduction to Blockchains (CSO607)

Description:

In this course, you will explore the motivation and real-world applications of blockchain systems. You will gain an understanding of peer-to-peer and distributed systems, and their core concepts such as consensus mechanisms, Byzantine fault tolerance, and impossibility results. The course will also introduce cryptographic tools essential for the functioning of blockchains. You will study Bitcoin, its Proof-of-Work consensus, and potential attacks like double spending and selfish mining. You will also examine energy efficiency in blockchain, comparing Proof of Stake with Proof of Work consensus models. You will also be introduced to layer-2 scalability solutions such as Lightning Network and Rollups. You will develop smart contracts in Solidity for Ethereum and test them on your personal Ethereum blockchain.

Topics:

C: Artificial Intelligence and Machine Learning

Foundational Mathematics for Data Science (CSO608)

Description:

In this course, you will learn mathematical techniques that are essential in solving problems traditionally considered challenging for computational machines. You will gain a strong understanding of key methods in linear algebra, statistics, and multivariate calculus that are widely used in machine learning. By the end of the course, you will be equipped to apply these mathematical concepts to real-world tasks such as automatic face recognition with pose variations, optimising neural networks, and generating realistic images.

Topics:

Natural Language Processing and Generative AI (CSO604)

Description:

In this course, you will learn the foundational concepts of machine learning, deep learning, and generative AI. The course will cover Feed-Forward Neural Networks (FFNN) backpropagation techniques, as well as applications of word vectors in Natural Language Processing. You will explore different kinds of Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and learn how they are built, trained, and used. You will also study LSTM networks, RCNN, Encoder-Decoder architectures, with a focus on autoregression, self-attention, and cross-attention mechanisms used in Large Language Models.

Topics:

Foundations of Computer Vision (CSO610)

Description:

In this course, you will learn the fundamental concepts and challenges in computer vision, starting with image formation and the camera matrix. You will study the techniques of homographies and calibration, stereo vision, image filtering, filter banks, as well as convolutional neural networks (CNNs) and visual transformers. You will also explore various applications of computer vision, such as classification, segmentation, inpainting, style transfer, motion analysis, and depth prediction.

Topics:

Curriculum review and changes are under the purview of the faculty and would be undertaken from time to time to ensure the programme coverage is in line with industry requirements.

The course electives offer cover a variety of languages and platforms, such as the following:

Python

Javascript

CSS

C/C++

Numpy

SciPy

Django

OWASP ZAP tool

SQL

Rust

Git

Docker

Wireshark

OpenSSL Toolkit

Note: Languages and tools used are under the purview of the faculty and a thorough review would be undertaken from time to time to ensure the curriculum coverage is in line with industry requirements.

IIT Bombay Alumni Status

Upon completion, you'll have the opportunity to attend an in-person graduation ceremony at the IIT Bombay campus

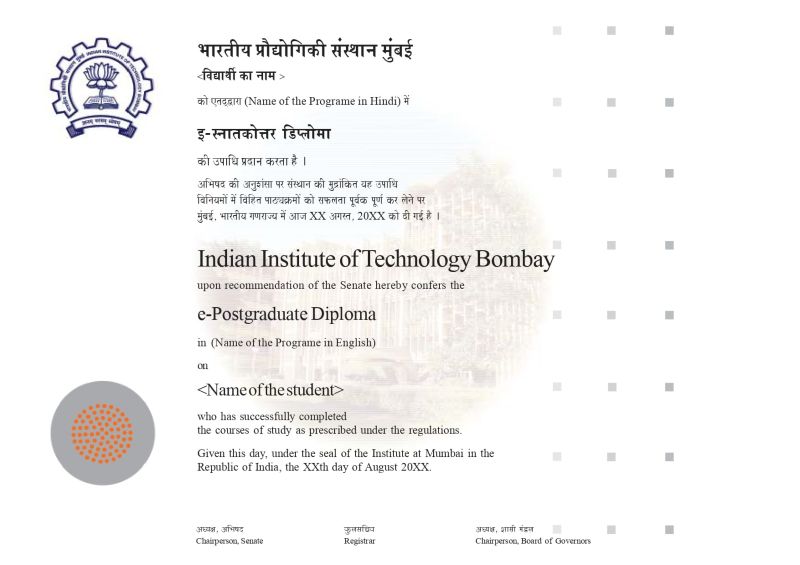

Note: Image for illustration only. Certificate subject to change.

Individual course completion certificates can be issued on request for all completed courses.

The course electives on offer enable you to:

Learn Computer Science and Engineering with a curriculum designed and delivered by IIT Bombay faculty

Natural Language Processing (NLP)

Large Language Models (LLMs) and Generative AI

Artificial Intelligence and Machine Learning

Computer Vision

Blockchains and Cryptocurrencies

Network Security

Web and Software Security

Big Data Management

*For more details on flexible fee payments, please get in touch with the Registration Team

Multiple courses will be offered simultaneously. Total fees can be paid accordingly.

Our registrations close once the requisite number of participants have registered for the upcoming batch. Apply early to secure your seats

Interested candidates can apply for e-Postgraduate Diploma by filling out a simple online application form.

Applicants must take an online test to assess their foundational knowledge and suitability for the ePGD. After passing the online test, applicants will go through a mandatory screening call with the Registration Office.

The selected candidates will receive an offer letter to join ePGD. They will need to pay the registration fee to secure their seat and complete the registration.

The eligibility criteria for the e-Postgraduate Diploma in Computer Science and Engineering requires a candidate to have at least one of the following from a recognised university:

Registrations Open

ePGD Details

The ePGD in CSE is a 12-month online e-Postgraduate Diploma (ePGD) designed and delivered by IIT Bombay CSE faculty. It is offered by the Department of Computer Science and Engineering (CSE), IIT Bombay.

This ePGD features a concept-based curriculum that focuses on areas such as advanced programming, computing systems, and machine learning. Upon successful completion of all ePGD requirements, students will receive an e-Postgraduate Diploma and an alumni status from IIT Bombay.

The weekly live sessions from the IIT Bombay CSE faculty will be conducted online for all courses. However, at the end of each course, the end term exams will be conducted in-person at IITB campus or any other designated location. The students will be required to visit the campus during this time and will also get an opportunity to meet the CSE faculty, network with their peers, and experience the IIT Bombay campus. On successfully completing the requirements for ePGD, students will get an opportunity to attend an in-person graduation ceremony at the IIT Bombay.

An e-Postgraduate Diploma (ePGD)from IIT Bombay will be offered to students upon successful completion of all the requirements. All examinations and evaluations related to the ePGD will be carried out by IIT Bombay.

Post-completion, an in-person graduation ceremony will be held at the IIT Bombay campus.

Earn up to 36 credits from IIT Bombay, which can be saved in the Academic Bank of Credits (ABC) through a combination of courses offered in the ePGD. To earn these credits, you must successfully pass the respective courses as per the evaluation methodology for each course.

Yes, this is a formal online diploma offered and evaluated by the Department of CSE, IIT Bombay. IIT Bombay manages all assessments and the e-Postgraduate Diploma.

Each term typically spans 16 weeks, with 12 weeks of classes and the rest reserved for assessments and breaks. Expect to spend 6–8 hours on live sessions and another 6–8 hours on self-study and assignments weekly.

This is a diploma offered directly by IIT Bombay, which is an Institute of National Importance. The ePGD is fully recognized under IIT Bombay’s charter. UGC/AICTE approvals are not applicable to this ePGD.

Faculty, Curriculum and Projects

The ePGD is taught by the IIT Bombay CSE faculty. These are globally respected researchers with decades of teaching and research experience. Here are the details of some of the faculty members:

Note: This is an indicative list of faculty and is under the purview of IIT Bombay.

The curriculum is structured into three core baskets, and the learners are required to complete 6 or more courses from the following baskets:

Learners must pass at least one course from each basket, and the remaining courses can be from any basket.

The course electives cover a variety of languages and platforms, such as the following:

Languages and tools used are under the purview of the faculty, and a thorough review would be undertaken from time to time to ensure the curriculum coverage is in line with industry requirements.

Yes, the curriculum is reviewed by IIT Bombay faculty to stay aligned with academic and technological developments. Any updates will be communicated to enrolled learners.

The curriculum is organised into three baskets: Advanced Programming, Computing Systems, and Artificial Intelligence & Machine Learning. You must complete at least one course from each basket, while the remaining courses can be chosen based on your interests. This ensures both structure and flexibility.

Career-Related Queries

Successful ePGD graduates will gain membership in a Google group dedicated to lateral hiring opportunities, managed by the IIT Bombay Placement Cell.

Job postings and opportunities are shared in this group, but individuals are responsible for connecting with organizations independently and managing the job application process. IIT Bombay will not be responsible for the outcome of the job application process.

Campus placement opportunities are NOT provided for ePGD participants.

The ePGD course electives on offer enable you to:

All that helps you advance in your career in the computer science and software domain.

No. The ePGD is designed for working professionals. Live classes are held during evenings on weekdays and over weekends, and the structure allows for flexibility with a recommended commitment of 12–16 hours per week.

Yes, upon successful completion of the ePGD, you will receive alumni status from IIT Bombay and can register with the IIT Bombay Alumni Association.

Yes. The ePGD in CSE supports peer-to-peer learning and networking through live sessions, projects, and online discussions. You will get an opportunity to interact with peers from diverse professional backgrounds. The campus visits and alumni network further expand your opportunities for collaboration and networking.

Credit Requirements

This e-Postgraduate Diploma requires candidates to successfully complete 36 IIT Bombay credits through a mix of core courses and electives. Every course will have its own set of exams and requirements, which must be fulfilled to successfully complete the course. Upon successfully completing a course, the candidates will receive a Certificate of Completion with the number of credits earned respectively.

Saving Credits in Academic Bank of Credits (ABC)

Candidates will need to create an APAAR ID and an account on Academic Bank of Credits (ABC). The credits earned from individual courses will be stored in their individual Academic Bank of Credits (ABC) account.

Using Credits Stored in Academic Bank of Credits (ABC)

Credits stored in the candidate’s ABC account can only be utilised once for any one degree or equivalent program. Upon successful completion of all 36 IIT B credits, the candidate will have the following options:

Credit Transfer to Higher Degrees

Eligibility, Fees, and Other Details

This ePGD in Computer Science Engineering is designed for motivated, technically adept individuals who seek to deepen their conceptual understanding and formalise their knowledge through a postgraduate qualification. These include:

Candidates must hold at least one of the following from a recognised university:

(B.E. / B.Tech / BS (4-year) / M.Sc.) or higher degree in Computer Science/Engineering, Information Technology, Artificial Intelligence, Data Sciences, mathematics, and Computing or equivalent (*) disciplines.

Or

(BS (4 years)/ B.E./B. Tech) or higher degree in any engineering discipline AND any one of the following:

Qualifying GATE score in Computer Science or Data Science

Two years of relevant work experience in Computer Science, Artificial Intelligence, or Data Sciences

A minor in Computer Science, Information Technology, AI and ML, Data Science, or equivalent (*) in programmes which offer such minors.

Or

MCA (with undergraduate degree BCA or B. Sc. with Mathematics as a subject)

*Final decision on equivalency will rest with the IITB CSE Department.

Yes. A GATE score or work experience is not mandatory if you have a relevant qualifying degree, i.e., (B.E. / B.Tech / BS (4-year) / M.Sc.) or higher degree in Computer Science/Engineering, Information Technology, Artificial Intelligence, Data Sciences, mathematics, and Computing or equivalent (*) disciplines.

No. Work experience is not mandatory for candidates who hold (B.E. / B.Tech / BS (4-year) / M.Sc.) or higher degree in Computer Science/Engineering, Information Technology, Artificial Intelligence, Data Sciences, mathematics, and Computing or equivalent (*) disciplines from a recognised university. While prior experience in relevant domains is recommended, fresh graduates who meet the eligibility criteria are welcome to apply.

To apply for the e-Postgraduate Diploma in Computer Science Engineering, candidates need to complete the online registration form available on our website.

Yes, applicants are required to take an online test to assess foundational knowledge and suitability for the ePGD.

Yes. OCIs who have completed their higher education in India are eligible to apply, provided they can comply with all ePGD requirements, including mandatory campus visits for exams. IIT Bombay’s Educational Outreach office can provide supporting documents if required for visa processing.

Yes, candidates who hold degrees from institutions outside India can apply, provided IIT Bombay recognizes the institution. The IIT Bombay CSE Department will verify such cases before confirming eligibility.

The total fee is ₹6,00,000 + GST. This includes a registration fee of ₹30,000 + GST and six course-wise installments of ₹95,000 + GST each (to be paid before the beginning of each course, 6 courses total).

Scholarships/fee waivers will be adjusted against the course fees.

Yes, applicants are required to pay a registration fee to secure their seat and confirm their registration after they receive an offer letter to join ePGD. Please check with the registration team for the exact details.

For flexible fee payment options, please contact the registration team. Please note that multiple courses will be offered simultaneously, and the total fee can be paid accordingly.

If a candidate wants to withdraw from ePGD, the candidate can do it at any time during the ePGD timeline. All such requests need to be mandatorily shared through an official email communication to the Program Office. The date of this email will be considered as the official date for withdrawal request. The refund amount will vary depending on the date of the withdrawal request, the timeline of individual courses and the total fee paid by the learner.

The fee refund policy is as follows:

For Courses in Term 1:

If only the registration fee is paid: 50% refund before batch commencement. No refund if request is received on the date of batch commencement or after the commencement of classes.

If both registration and course fees are paid:

For Courses After Term 1:

Please note: A ₹1,000 processing fee is deducted from any refund amount. Refund requests will be entertained as per IIT Bombay’s guidelines. Please reach out to the registration office for current refund timelines and conditions.

Other Queries

The candidates are required to complete the onboarding process before the e-Postgraduate Diploma orientation. To complete the onboarding process, candidates will need to submit the following documents/details before the beginning of the session:

Once all documents are submitted, candidates will be officially registered for the course and will be provided a unique registration number (EOEPGD/CSE/001/XY) by the Educational Outreach office. Candidates will be given a physical identity card issued by the Educational Outreach office, which can be used during their campus visit for the end team exams. Offline verification of the documents will be done on the IIT B campus during the end term examination.

Yes, you will have the opportunity to visit the IIT Bombay campus at the end of each term for in-person end-term exams.

The final graduation ceremony is also held on campus. Any additional visits can be made by request and subject to approval from IIT Bombay.

All ePGD graduates will get IIT Bombay alumni status along with the following benefits:

Identity

Exclusive Email Address

Networking Opportunities

Alumni Groups

Institute Discounts

Career Services

Volunteer Opportunities

Mentorship Opportunities

Stay Connected with the Institute

IIT Bombay is responsible for the complete academic delivery, including live teaching, curriculum, assessments, and evaluations. Great Learning is the program management partner, providing support with registration, the online learning platform, and non-academic learner support.