Imagine typing a few words and watching them transform into a high-quality video with no cameras, no actors, just pure AI at work.

That’s the power of OpenAI’s Sora, a revolutionary text-to-video model that can generate realistic, cinematic scenes in seconds.

Whether you’re a content creator, marketer, or just an AI enthusiast, Sora is set to redefine the way we produce visual content.

In this article, we’ll explore what Sora is, how it works, and how you can use it to bring your ideas to life.

What is Sora?

OpenAI’s Sora is an advanced AI video generation model that transforms text, images, and videos into new, dynamic video content.

Designed to democratize video creation, Sora enables users to produce high-quality videos without traditional filming equipment or extensive editing skills

Sora is available through ChatGPT subscription plans, with ChatGPT Plus offering up to 50 priority videos per month at 720p resolution and 5-second durations. In comparison, ChatGPT Pro provides up to 500 priority videos at 1080p resolution and 20-second durations, along with additional benefits.

Features of Sora:

1. Text-to-Video Generation

Sora can convert written descriptions into rich video content. Users can create videos that are as close to their creative vision using a prompt.

Example:

A user enters the prompt: “A fashionable woman walks along a Tokyo street lined with warm glowing neon.”

Sora interprets this description and creates a video showing the scene with detailed elements, capturing the city atmosphere & neon lights.

2. Image-to-Video Conversion

Beyond text prompts, Sora allows users to upload images, which it then animates into engaging video sequences.

Example: Uploading a still image of a serene beach at sunset, Sora can generate a short video where gentle waves lap the shore, seagulls fly across the sky, & the sun gradually dips below the horizon.

3. Video Remixing and Blending

Sora enables users to enhance and modify existing videos by blending them with new elements or styles, fostering creative experimentation.

Example: The user uploads a cityscape video and chooses a “cyberpunk” style preset. Sora remashes the initial footage, adding to it a futuristic neon color scheme, holographic billboards, & a dark atmosphere inspired by traditional cyberpunk imagery.

4. Aspect Ratios and Resolutions

To cater to various platforms and purposes, Sora supports multiple aspect ratios and resolutions.

Example: A content creator requires a vertical video for a social media story. With Sora, they produce a 9:16 aspect ratio video with 1080p resolution so that it has the best possible quality and compatibility for the platform.

5. Creative Tools

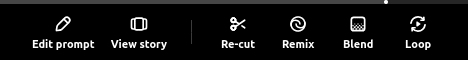

Sora offers a range of tools to refine and customize video content:

- Remix: Modify existing videos by altering elements such as color schemes, backgrounds, or visual effects.

Example: Transform a daytime landscape video into a nighttime scene with a starry sky and ambient moonlight.

- Storyboard: Visualize and plan video sequences by arranging scenes or keyframes.

Example: A filmmaker outlines a short story by creating a sequence of scenes, each representing a different part of the narrative, to preview the flow before the final generation.

- Re-cut: Trim or extend segments within a video to focus on specific moments or adjust pacing.

Example: Shorten a lengthy introduction or highlight a particular action sequence by trimming surrounding content.

- Blend: Seamlessly merge two videos to create a cohesive transition or combined scene.

Example: Blend a clip of a person walking into a forest with another of a mystical creature appearing, creating a smooth transition between the two scenes.

- Loop: Create seamless, repeating video loops ideal for backgrounds or continuous displays.

Example: Generate a looping animation of a rotating planet, perfect for use as a dynamic background in presentations.

6. User-Friendly Interface

Sora’s platform is intuitive so that users of all technical backgrounds can easily navigate and use its functionalities.

7. Content Moderation and Safety

To promote responsible use, Sora incorporates robust content moderation features:

- Watermarks and Metadata: All AI-generated videos include visible watermarks & metadata to indicate their origin, ensuring transparency.

Example: A generated video displays a subtle watermark in the corner, denoting it as AI-created content, helping viewers distinguish it from real footage.

- Depiction Restrictions: Sora limits the generation of realistic human appearances to prevent potential misuse, such as deepfakes.

Example: Attempts to create videos depicting specific individuals are blocked, safeguarding against unauthorized likeness replication.

By integrating these features, Sora empowers users to produce high-quality, creative video content efficiently, while maintaining ethical standards & user safety.

Step-by-Step: How Does OpenAI’s Sora Model Work?

1. Input Processing

Before generating a video, Sora processes the input provided by the user. This input can be text, images, or existing videos.

A) Text-to-Video Input

- The user provides a detailed text prompt describing the desired video scene.

- Sora’s natural language processing (NLP) module interprets the text, breaking it down into key elements such as:

- Objects (e.g., “a cat, a red car”)

- Actions (e.g., “running, jumping, swimming”)

- Environment (e.g., “a rainy street in Tokyo, a futuristic city”)

- Artistic Style & Mood (e.g., “cinematic, neon-lit, realistic”)

Example:

A user inputs: “A golden retriever runs through a field of wildflowers with the sun setting in the background.”

Sora identifies the dog, the field, the motion of running, and the lighting conditions of a sunset to generate a relevant scene.

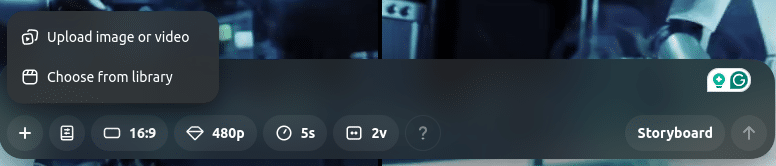

B) Image-to-Video Input

- Users can upload an image as a starting point.

- Sora analyzes the image to extract:

- Color palettes (e.g., warm tones of a sunset, vibrant city lights)

- Textures & Materials (e.g., grass, water, fabric)

- Perspective & Depth Information

- The AI then animates the image, adding movement and realistic details.

Example:

A still image of a beach at sunset can be turned into a video with waves crashing, birds flying, & the sun slowly setting.

C) Video-to-Video Input (Remixing & Enhancement)

- Users can upload a video that Sora will enhance, extend, or modify.

- The model analyzes movement, frame consistency, and transitions to maintain coherence.

- Users can request style changes, add objects, or modify backgrounds.

Example:

A daytime cityscape video can be transformed into a cyberpunk night scene with neon signs and rain reflections.

2. Latent Space Representation

Once the input is processed, Sora encodes it into a latent space. This step translates the input into a high-dimensional numerical format that captures key details like:

- Object relationships

- Motion patterns

- Color schemes and textures

- Perspective and depth

This process compresses information while preserving the structure needed for video generation.

Example:

The phrase “a futuristic car speeding through a neon-lit highway” is transformed into a numerical format that helps the AI generate consistent video frames.

3. Diffusion Model Processing

Sora uses diffusion models to generate video frames from scratch. This involves:

A) Noise Addition (Reverse Engineering the Image)

- The model starts with random noise (similar to static on a TV screen).

- It gradually removes the noise while shaping the pixels to match the prompt.

B) Iterative Refinement

- Through multiple steps, the AI adds details, enhances textures, & improves clarity.

- The process ensures temporal consistency, meaning objects and actions remain smooth across frames.

Example:

For the golden retriever running in a field, Sora ensures:

- The dog’s fur flows naturally with the wind.

- The shadows move consistently as the sun sets.

- The background remains steady, avoiding glitches.

4. Transformer Model for Temporal Consistency

Unlike static image generators, video AI must handle motion. Sora integrates transformer-based architectures to ensure:

- Consistent object placement (so the same cat doesn’t change shape in different frames).

- Realistic motion physics (like the way hair moves in the wind).

- Frame coherence (so there’s no flickering or weird jumps).

Sora achieves this by analyzing:

- Sequences of frames to understand movement.

- Attention mechanisms that focus on important elements like a person’s face, a moving car, or flowing water.

Example:

For a video of a dancer performing on stage, Sora ensures:

- The outfit moves naturally with the dance.

- The stage lighting changes smoothly.

- The dancer’s movements don’t glitch between frames.

5. Video Synthesis and Output Generation

Once Sora refines the video, it assembles and enhances the final output.

A) Frame Assembly

- The AI combines multiple video frames into a smooth sequence.

- It adjusts frame rates (e.g., 30 FPS, 60 FPS) for high-quality motion.

B) Post-Processing

- Color correction & lighting adjustments for realism.

- Stabilization & sharpness enhancement for crisp details.

- Final resolution selection (HD, 4K, etc.).

Example:

A forest scene at dawn might undergo:

- Brighter contrast adjustments to match the early morning light.

- Smoother tree movements in the wind.

- Higher-resolution textures for added realism.

6. Content Moderation & Safety Features

Sora is designed with ethical considerations to prevent misuse. The model:

- Adds watermarks & metadata to indicate AI-generated content.

- Restricts highly realistic human deepfakes to prevent fraud.

- Monitor input prompts to block inappropriate content.

Example:

If someone tries to generate a fake video of a celebrity, Sora will block or alter the request to prevent misuse.

By following these steps, Sora creates high-quality, dynamic videos that surpasses the boundaries of AI-powered video generation.

Step-by-Step Guide on How to Use OpenAI’s Sora

Step 1: Accessing Sora

Before you can start using Sora, you need access to the platform.

- Visit Sora’s official website.

- If you already have an account, click Sign In.

- If you’re new, click Sign Up and register with an email, Google, or Microsoft account.

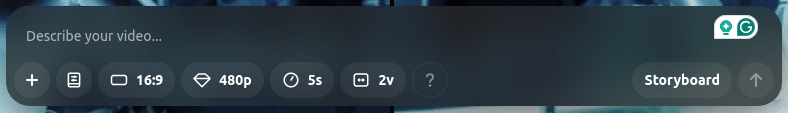

Step 2: Selecting the Type of Input

Sora allows different types of inputs based on your video generation needs.

A) Text-to-Video Generation

- If you want to create a video from scratch, select Text-to-Video Mode.

- A text box will appear where you can describe your desired video scene.

Example Prompt:

“A futuristic city with flying cars, neon-lit skyscrapers, and a sunset sky.”

B) Image-to-Video Generation

- Upload an image as a starting point.

- The AI will analyze the image and generate motion effects.

Example:

- Upload a beach sunset image → Sora adds ocean waves, flying birds, and moving clouds.

C) Video-to-Video Editing

- If you have an existing video, you can enhance, modify, or extend it.

- Options include style changes, object addition/removal, and animation enhancements.

Example:

- Upload a slow-motion running video → Convert it into a cyberpunk-styled animation.

Step 3: Writing a High-Quality Prompt

Sora relies on detailed prompts for better accuracy and creativity.

A) Structure of a Good Prompt

- Main Subject – What the video is about.

- Actions & Motion – What’s happening in the scene.

- Background & Environment – Where the scene takes place.

- Style & Mood – Animation style, cinematic, realistic, etc.

Example:

“A robot chef in a futuristic kitchen preparing sushi. The scene is lit with cool blue neon lights, & steam rises from the dishes. The camera moves smoothly from a first-person perspective.”

Tip:

- Be specific (mention details like time of day, colors, and movements).

- Avoid vague prompts like “A cool animation”—Sora needs clear instructions.

Step 4: Customizing Video Settings

Once you submit a prompt, Sora provides options to customize the output.

A) Resolution & Frame Rate

- Choose Standard (HD), 4K, or Cinematic Quality based on your needs.

- Select a frame rate (30 FPS for smooth motion, 60 FPS for ultra-fluid videos).

B) Duration & Length

- Most AI-generated videos have a default length (e.g., 5-15 seconds).

- If longer videos are supported, you can extend duration by generating additional frames.

C) Motion Style (if applicable)

Choose between:

- Realistic Animation

- Cartoon/Anime Style

- Cinematic Slow Motion

Example:

- If creating an action scene, select 60 FPS, cinematic lighting, and slow-motion effects.

Step 5: Generating the Video

Once all settings are configured, click Generate Video.

A) Processing Time

- Sora analyzes the input and begins generating frames.

- Depending on the complexity of the scene, processing may take a few minutes.

B) Preview & Refinement

- After generation, you’ll see a low-resolution preview.

- If needed, you can make edits or adjust elements (e.g., colors, lighting, or movement speed).

Example:

- If a robot’s movement is too fast, adjust the motion speed before final rendering.

Step 6: Downloading & Sharing the Video

Once satisfied with the output, click Download to save your video.

A) File Formats Available

- MP4 (Standard video format)

- GIF (For short animations)

- MOV (For professional editing in tools like Adobe Premiere)

B) Sharing Options

- Directly upload to social media (YouTube, Instagram, TikTok).

- Generate a sharable link for quick previews.

Pro Tip:

If using AI videos for marketing, add captions or voiceovers to make content more engaging.

Step 7: Post-Editing & Enhancements (Optional)

Even though Sora generates high-quality videos, post-editing can further enhance them.

A) Using Video Editing Software

- Import the Sora video into tools like Adobe Premiere Pro, DaVinci Resolve, or CapCut.

- Add text overlays, sound effects, and transitions.

B) Adding AI Voiceovers

- Use AI voice generators like ElevenLabs to add narration.

- Match voice tone with the video theme (e.g., robotic for sci-fi, warm for storytelling).

C) Enhancing with Visual Effects

- Add slow motion, zoom effects, or background music for a cinematic feel.

Example:

- A historical documentary-style AI video can be refined with color grading and voice narration.

Bonus: Tips to Get the Best Out of Sora

1. Use Specific & Descriptive Prompts

- Instead of “a cat playing”, try “a fluffy white cat jumping playfully on a green sofa in a cozy living room.”

2. Experiment with Different Styles

- Try realistic, anime, cinematic, or abstract styles to see what fits your needs.

3. Keep Video Length Short & Focused

- AI video models are optimized for short clips (10-30 sec), so focus on one key scene per video.

4. Use External Editing for Professional Results

- Combine AI-generated footage with traditional video editing for higher-quality productions.

Comparison Table Sora with other similar models

OpenAI’s Sora: Creativity, storytelling, and flexibility

Google’s Veo 2: Realism, precision, and motion physics

Hailuo MiniMax: Realistic motion and high-quality video rendering

Haiper: Prompt adherence and artistic video generation

Pika: User-friendly AI video generation

OpenAI’s Sora: High-quality with cinematic, artistic visuals

Google’s Veo 2: Ultra-realistic, up to 4K resolution

Hailuo MiniMax: High-quality, realistic videos

Haiper: Artistic and stylized video outputs

Pika: High-quality videos with a focus on user accessibility

OpenAI’s Sora: Smooth motion but less physics-focused

Google’s Veo 2: Physics-based, natural object movement

Hailuo MiniMax: Realistic depiction of human emotion and motion

Haiper: Emphasis on artistic expression over precise motion physics

Pika: Smooth motion with an emphasis on creative animations

OpenAI’s Sora: Excels in imaginative and surreal visuals

Google’s Veo 2: Best for realistic scenarios

Hailuo MiniMax: Capable of generating complex scenes with multiple characters

Haiper: Offers a variety of artistic styles and interpretations

Pika: Focuses on creative and engaging video content

OpenAI’s Sora: User-friendly, accessible to casual creators

Google’s Veo 2: Professional, aimed at precision users

Hailuo MiniMax: Features a user-friendly interface with options to explore other users’ clips

Haiper: Provides an intuitive platform with a focus on prompt accuracy

Pika: Offers memberships with credits for video generation

OpenAI’s Sora: Up to 60 seconds

Google’s Veo 2: Up to 1 minute

Hailuo MiniMax: Offers various durations with options for image-to-video and text-to-video generations

Haiper: Supports various video lengths depending on the model and subscription

Pika: Video duration varies based on membership and credit usage

OpenAI’s Sora: Art, storytelling, social media, entertainment

Google’s Veo 2: Advertising, documentaries, engineering, education

Hailuo MiniMax: Social media content, artistic projects, and realistic animations

Haiper: Artistic video creations, social media content, and experimental projects

Pika: Creative content generation for social media and marketing

OpenAI’s Sora: Adaptability, artistic styles, and fantasy visuals

Google’s Veo 2: Photorealism, object interaction, and clarity

Hailuo MiniMax: Continuous improvements with a focus on realistic motion and high-quality rendering

Haiper: Strong prompt adherence with a focus on artistic video generation

Pika: User-friendly platform with a focus on creative animations

OpenAI’s Sora: Slightly lower focus on perfect physics

Google’s Veo 2: Limited creativity for surreal outputs

Hailuo MiniMax: May require a subscription for access to advanced features and higher-quality outputs

Haiper: Subscription-based model with credits, which may limit extensive use without additional purchases

Pika: Membership-based access with credits, potentially limiting for high-volume users

OpenAI’s Sora: Basic plan at $20/month for 480p or 720p videos (5-10 sec); Pro plan at $200/month for 1080p videos (up to 20 sec)

Google’s Veo 2: Not specified in the provided sources

Hailuo MiniMax: Base plan at $9/month for 1,000 credits, no watermarks, and bonus credits for daily login

Haiper: Offers 100 free credits; $10/month for 1,500 credits on the latest model and unlimited generations on previous models

Pika: Memberships starting at $10/month for 660 monthly credits

Ethical Considerations While Using OpenAI’s Sora

- Content Misuse and Destructive Outputs

Users of Sora are not allowed to create or share content that encourages harm, such as bullying, harassment, defamation, discrimination, sexual exploitation of children, or incitement of violence and hatred.

- Privacy and Consent

The potential to create realistic videos requires strict measures to avoid the misuse of people’s likenesses without consent, thus upholding privacy rights and maintaining consent.

- Authenticity and Deepfakes

Sora’s ability to produce realistic videos has the potential to blur the line between what is real and what is fake, risking deepfakes, which could contribute to spreading misinformation and eroding public confidence.

- Impact on Creative Professions

The integration of AI in creative fields has sparked debates about job displacement and the exploitation of artists’ work without proper compensation, highlighting the need for ethical collaboration and fair practices.

Conclusion

OpenAI’s Sora is revolutionizing AI-driven video creation, OpenAI’s Sora is transforming AI-generated video making, promising huge potential with ethical & technical challenges.

As AI keeps revolutionizing creative industries, it’s essential to remain ahead with the right expertise.

If you want to learn about AI’s potential in media, computer vision, or generative models, Great Learning’s AI and Machine Learning course offers professional training to equip you with mastery over these new-age technologies.

Equip yourself with industry-relevant knowledge and future-proof your career in the ever-evolving AI field.

Suggested: