- What is Data Ingestion?

- Types of Data Ingestion

- How Does Data Ingestion Work?

- Batch vs. Real-Time Data Ingestion

- Challenges in Data Ingestion

- Best Practices for Efficient Data Ingestion

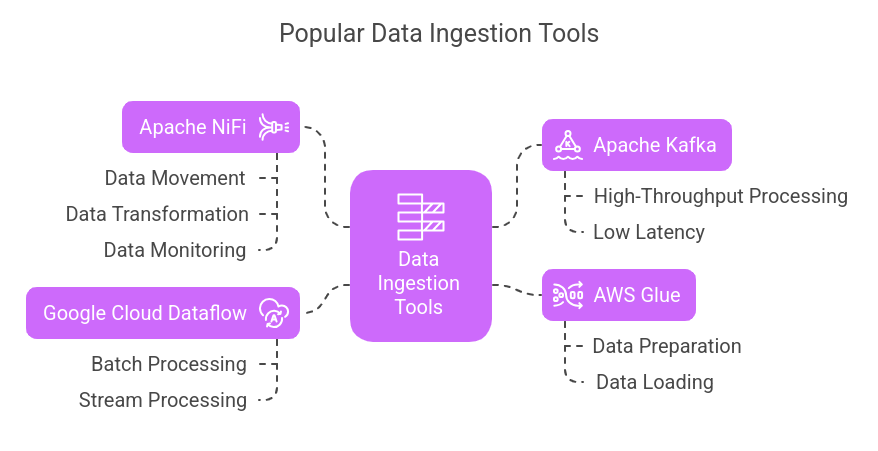

- Popular Data Ingestion Tools

- Real-World Applications of Data Ingestion

- Future Trends in Data Ingestion

- Conclusion

- Frequently Asked Questions

Data ingestion is a critical process in data management. It allows businesses to gather data from multiple channels, such as databases, APIs, or streaming platforms, and make it available for further processing.

In this article, we’ll explore the different types of data ingestion, processes, challenges, best practices, and tools that help organizations efficiently collect and process data for analysis.

What is Data Ingestion?

Imagine you run a company that collects data from social media, customer transactions, IoT sensors, and databases. Before you can analyze this data for insights, you must gather it in one place.

Data ingestion is the process of collecting, importing, and preparing this data for storage and analysis.

It acts as the first step in the data pipeline, ensuring that raw data is structured and ready for use in business intelligence, machine learning, or reporting.

Also Read: What is Data Collection?

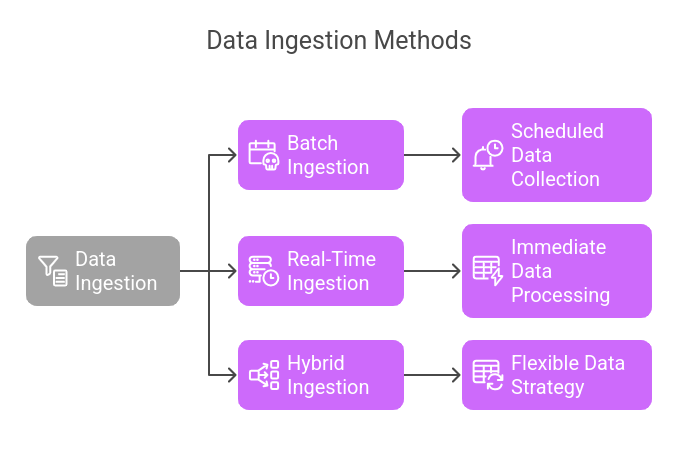

Types of Data Ingestion

There are three main types of data ingestion:

- Batch Ingestion – Data is collected in chunks at scheduled intervals (e.g., daily or hourly updates). This is useful for handling large amounts of data when real-time updates aren’t necessary, such as monthly sales reports.

- Real-Time Ingestion – Data is ingested as soon as it is generated, which is essential for applications like fraud detection, stock market analysis, or IoT sensor data.

- Hybrid Ingestion – A mix of batch and real-time, depending on the priority of data. For example, financial institutions might use real-time ingestion for fraud detection while using batch ingestion for daily reports.

Also Read: Types of data

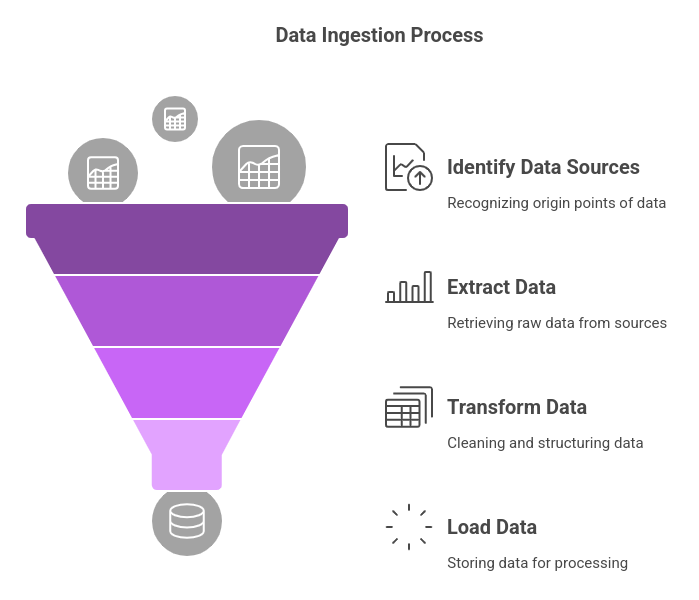

How Does Data Ingestion Work?

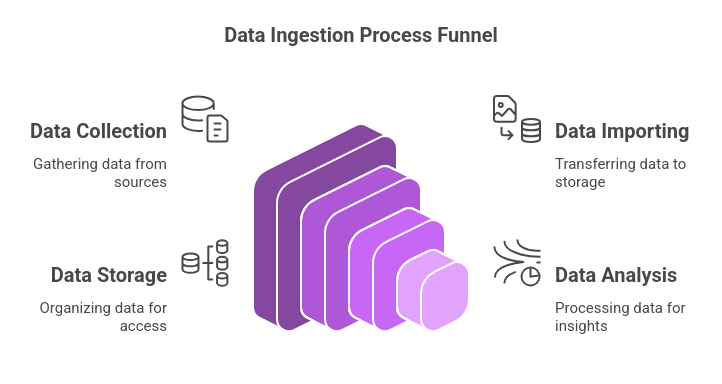

The process involves four key steps:

- Identifying Data Sources – Data can come from databases, APIs, cloud storage, IoT devices, or logs.

- Extracting Data – Retrieving raw data from these sources.

- Transforming Data – Cleaning, structuring, and standardizing data to make it usable.

- Loading Data – Storing it in a data warehouse, data lake, or analytics platform for further processing.

For example, an e-commerce website might ingest customer purchase data from different regions and store it in a central database for real-time insights on trending products.

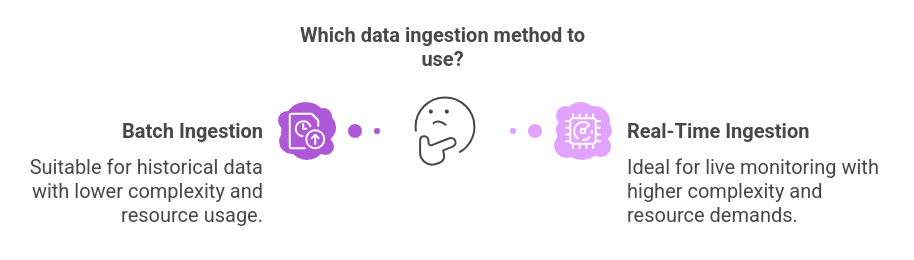

Batch vs. Real-Time Data Ingestion

| Feature | Batch Ingestion | Real-Time Ingestion |

| Processing Speed | Periodic | Continuous |

| Best For | Historical data, reports | Live monitoring, fraud detection |

| Complexity | Less | More |

| Resource Usage | Lower | Higher |

If you’re streaming live sports data, real-time ingestion is ideal. If you’re compiling end-of-month financial summaries, batch ingestion is sufficient.

Challenges in Data Ingestion

Even though data ingestion is essential, it comes with some challenges:

- Handling Large Data Volumes – Companies are managing data in the range of terabytes or even petabytes. This requires efficient management in a very crucial sense.

- Data Format Variability – Data comes in different formats (structured, semi-structured, unstructured), which makes it harder to standardize.

- Scalability Issues – As the amount of data grows, systems must scale without slowdowns.

- Security & Compliance Risks – Sensitive data must be protected and comply with regulations like GDPR.

Best Practices for Efficient Data Ingestion

To optimize data ingestion, organizations should follow these best practices:

- Choose the Right Ingestion Strategy – Use batch or real-time ingestion based on business needs.

- Automate Data Pipelines – Reduce manual intervention with automation tools.

- Ensure Data Quality – Clean and standardize data before processing.

- Monitor Performance – Track ingestion failures and latency issues.

- Use Cloud Solutions – Cloud-based ingestion tools help scale operations efficiently.

Popular Data Ingestion Tools

Several tools facilitate efficient data ingestion:

- Apache NiFi – A data flow automation tool that enables easy movement, transformation, and monitoring of data.

- Apache Kafka – A distributed streaming platform that processes high-throughput data in real-time with low latency.

- AWS Glue – A fully managed ETL service that simplifies the process of preparing and loading data into storage systems.

- Google Cloud Dataflow – A cloud-based service that enables scalable and real-time data processing in batch and stream modes.

Real-World Applications of Data Ingestion

There are varying industries across the globe that utilize data ingestion. Examples of such industries include:

- Healthcare – an area that covers real-time monitoring of patients and diagnosis.

- Finance – centered around fraud detection and stock market analytics

- E-commerce – customer behavior analysis and recommendation engines

- IoT – for smart home automation and industrial monitoring.

An example could be that a patient in a hospital collects real-time vitals to detect any critical conditions instantaneously, while an e-commerce company collects and ingests all its data related to customers for analysis to improve its selling strategy.

Future Trends in Data Ingestion

The field of data ingestion is evolving with advancements like:

- AI-Powered Data Ingestion – AI automates data processing, reducing errors.

- Edge Computing – Processing data closer to the source instead of relying on cloud-based ingestion.

- Serverless Ingestion Solutions – Cost-effective cloud-based ingestion without managing infrastructure.

- Data Mesh Architecture – Decentralized data ownership for better scalability.

Conclusion

The process of data ingestion is one of the key steps in the data analytics pipeline, since the raw data organization starts from here onward. It includes collection, processing, and storage of the data, making sure that all put together is valuable for better decisions.

Picking the right tool and strategy for either batch or real-time ingestion will maximize the output and enhance data quality for actionable business decisions. As data-driven industries continue to grow, optimizing data ingestion will remain a top priority for organizations worldwide.

Ready to take your analytics skills to the next level?

Explore our free data analytics courses to learn how to transform ingested data into powerful insights. These courses are designed to help you build a robust analytics pipeline—from data collection to advanced analysis—empowering you to make smarter, data-driven decisions.

Frequently Asked Questions

1. How does data ingestion differ from ETL (Extract, Transform, Load)?

Data ingestion focuses on collecting and transferring raw data, while ETL involves additional steps like data transformation and loading into a structured system for analysis.

2. What are the security risks associated with data ingestion?

Security risks include unauthorized access, data breaches, compliance violations, and insecure data transfers, which can be mitigated through encryption and access controls.

3. How does data ingestion handle unstructured data like images and videos?

Specialized tools and frameworks, such as Apache Hadoop and TensorFlow, process unstructured data by converting it into machine-readable formats before ingestion.

4. Can data ingestion be performed on-premises or limited to cloud platforms?

Data ingestion can be done both on-premises and in the cloud, depending on business requirements, scalability needs, and security considerations.

5. What is the role of APIs in data ingestion?

APIs facilitate seamless data transfer between applications, allowing real-time ingestion from external sources like third-party services, social media, or IoT devices.