Ever wondered how Google understands your search so well?

The secret lies in BERT, a powerful AI language model that helps computers understand words in context.

Unlike older models that read text one way, BERT looks at both sides of a word to grasp its true meaning. Let’s explore how it works and why it’s a game-changer for natural language processing.

What is BERT?

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a language model developed by Google AI in 2018.

Unlike earlier models that processed text in a single direction, BERT reads text bidirectionally, allowing it to understand the context of a word based on both its preceding and following words.

Key aspects of BERT include:

- Bidirectional Context: By analyzing text from both directions, BERT captures the full context of a word, leading to a deeper understanding of language.

- Transformer Architecture: BERT utilizes transformers, which are models designed to handle sequential data by focusing on the relationships between all words in a sentence simultaneously.

- Pre-training and Fine-tuning: Initially, BERT is pre-trained on large text datasets to learn language patterns. It can then be fine-tuned for specific tasks like question answering or sentiment analysis, enhancing its performance in various applications.

BERT’s bidirectional method is important in natural language processing (NLP) since it enables models to understand the meaning of a word depending on its context.

This results in more accurate meanings, particularly in compound sentences where the meaning of a word may be affected by words preceding & following it.

Also Read: What is a Large Language Model?

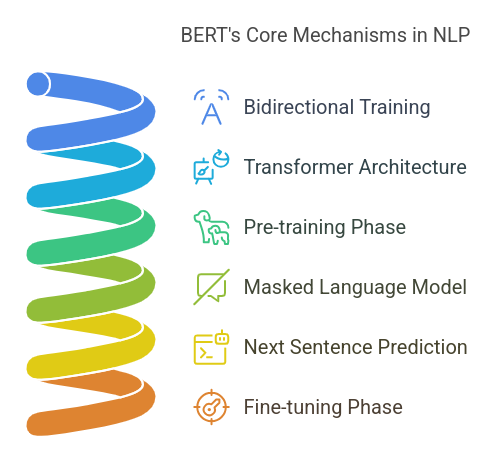

How BERT Works: The Core Mechanisms

BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking model in natural language processing (NLP) that has significantly enhanced machines’ understanding of human language. Let’s delve into its core mechanisms step by step:

1. Bidirectional Training: Understanding Context from Both Left and Right

Most traditional language models process text unidirectionally, either left-to-right or right-to-left. BERT, on the other hand, uses bidirectional training and can therefore look at the whole context of a word by scanning both what has come before it and what follows it. This allows BERT to understand words fully in sentences.

2. Transformer Architecture: Self-Attention Mechanism for Contextual Learning

At the core of BERT’s architecture is the Transformer model, which utilizes a self-attention mechanism. This mechanism enables BERT to weigh the importance of each word in a sentence relative to the others, facilitating a deeper understanding of context & relationships between words.

3. Pre-training and Fine-tuning: Two-Step Learning Process

BERT undergoes a two-step learning process:

Pre-training: In this phase, BERT is trained on large text corpora using two unsupervised tasks:

- Masked Language Modeling (MLM): BERT randomly masks certain words in a sentence and learns to predict them based on the surrounding context.

- Next Sentence Prediction (NSP): BERT learns to predict whether one sentence logically follows another, aiding in understanding sentence relationships.

- Fine-tuning: After pre-training, BERT is fine-tuned on specific tasks, such as sentiment analysis or question answering, by adding task-specific layers and training on smaller, task-specific datasets.

4. Masked Language Model (MLM): Predicting Missing Words in a Sentence

During pre-training, BERT employs the MLM task, where it randomly masks 15% of the words in a sentence and learns to predict these masked words based on the context provided by the remaining words. This process helps BERT develop a deep understanding of language patterns and word relationships.

Suggested Read: Word Embeddings in NLP

5. Next Sentence Prediction (NSP): Understanding Sentence Relationships

In the NSP task, BERT is exposed to pairs of sentences and trained to predict if the second sentence logically follows from the first. Through this task, BERT learns to understand the relationship between sentences, an ability that is important for tasks such as question answering & natural language inference.

By employing bidirectional training, the Transformer model, and a two-step learning procedure, BERT has raised the bar in NLP, achieving state-of-the-art performance on numerous language understanding tasks.

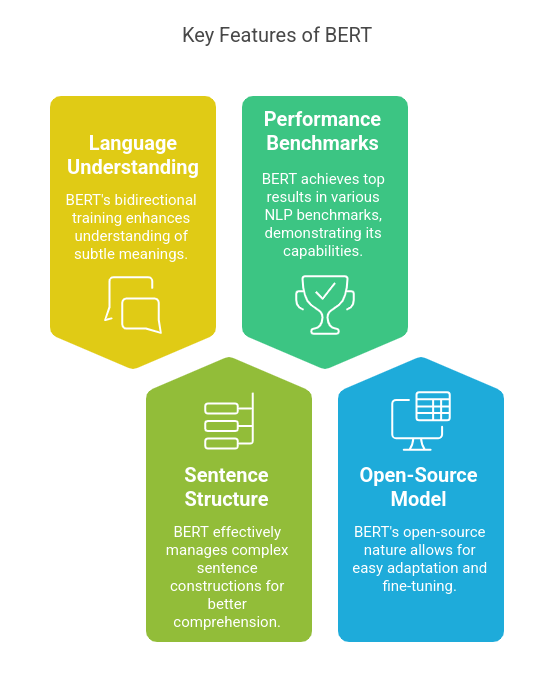

Key Features and Advantages of BERT

- Improved Understanding of Language subtleties and Polysemy: BERT’s bidirectional training allows it to grasp the subtle meanings of words, especially those with multiple interpretations, by considering the context from both preceding and following words.

- Effective Handling of Complex Sentence Structures: By analyzing the entire sentence context, BERT adeptly manages intricate linguistic constructions, enhancing comprehension and processing accuracy.

- State-of-the-Art Performance in NLP Benchmarks: BERT has achieved leading results in various NLP benchmarks, such as the General Language Understanding Evaluation (GLUE) and the Stanford Question Answering Dataset (SQuAD), showcasing its superior language understanding capabilities.

- Open-Source Availability and Adaptability: As an open-source model, BERT is accessible to researchers and developers, facilitating its adaptation and fine-tuning for a wide range of NLP tasks and applications.

Applications of BERT in Real-World Scenarios

- Search Engines: BERT improves search engines by better understanding user queries, resulting in more accurate and relevant search results.

- Chatbots and Virtual Assistants: Through a better understanding of context, BERT allows chatbots and virtual assistants to have more natural & coherent conversations with users.

- Sentiment Analysis: BERT’s deep contextual understanding enables more accurate sentiment classification, helping to accurately interpret the emotional tone of textual data.

- Machine Translation and Text Summarization: BERT is used for context-sensitive translation and summarization, which enhances the quality of translated text and summaries.

By leveraging these features and applications, BERT continues to play an essential role in advancing the field of Natural Language Processing.

Also Read: Top Applications of Natural Language Processing (NLP)

Future of BERT and NLP Advancements

The field of Natural Language Processing (NLP) has seen rapid advancements since the introduction of BERT (Bidirectional Encoder Representations from Transformers).

These developments have led to more sophisticated models and applications, shaping the future of NLP.

1. Evolution into Advanced Models:

- RoBERTa: Building upon BERT, RoBERTa (Robustly Optimized BERT Pretraining Approach) enhances training methodologies by utilizing larger datasets and longer training periods, resulting in improved performance on various NLP tasks.

- ALBERT: A Lite BERT (ALBERT) minimizes model size by sharing parameters and factorization methods while preserving performance and enhancing efficiency.

- T5: The Text-To-Text Transfer Transformer (T5) redefines NLP tasks in a single text-to-text framework, allowing the model to process various tasks like translation, summarization, and question answering under one architecture.

2. Integration with Multimodal AI Systems:

Future NLP systems are becoming more and more integrated with other modalities besides text, including images and videos.

This multimodal style enables models to understand and produce content that involves both language and imagery, which further improves applications such as image captioning, video analysis, and others.

3. Optimizations for Efficiency and Deployment in Low-Resource Environments:

Efforts are being made to fine-tune NLP models for deployment in low-computational-resource environments.

Methods like knowledge distillation, quantization, and pruning are used to compress model size and inference time, making sophisticated NLP capabilities more ubiquitous across devices and applications.

These developments hold a promising future for NLP, with models becoming more capable, versatile, and efficient, thus expanding their applicability across a wide range of real-world applications.

Conclusion

BERT has revolutionized NLP, paving the way for advanced models like RoBERTa, ALBERT, and T5 while driving innovations in multimodal AI and efficiency optimization.

As NLP continues to evolve, mastering these technologies becomes essential for professionals aiming to excel in AI-driven fields.

If you’re eager to deepen your understanding of NLP and machine learning, explore Great Learning’s AI course designed to equip you with industry-relevant skills and hands-on experience in cutting-edge AI applications.

If you want to learn about other basic NLP concepts, check out our free NLP courses.