Have you ever asked yourself how companies make split-second decisions from real-time data?

From identifying fraud in banking to recommending content on streaming services, real-time analytics analyzes data as it’s created, giving instant insights.

But how does it happen, and why is it so important in today’s fast-moving digital age?

Let’s explore!

Definition of Real-Time Analytics

Real-time analytics is the process of gathering, processing, & analyzing data as it is produced to deliver real-time insights and enable immediate decision-making.

As opposed to conventional analytics, which is based on past data & batch processing, real-time analytics streams continuously process streaming data to discover patterns, detect anomalies, and initiate automated responses in real-time.

Also Read: What is Data Collection?

How Real-Time Analytics Works?

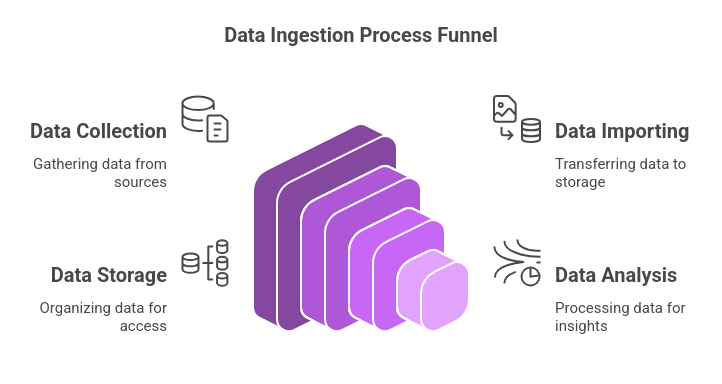

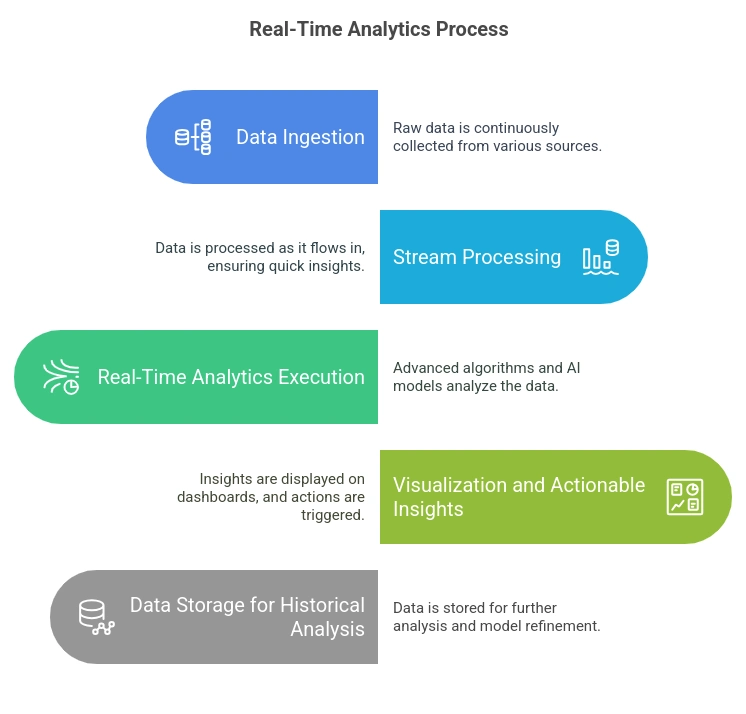

1. Data Ingestion:

- Raw data is continuously collected from multiple sources, such as IoT devices, social media feeds, transaction logs, sensors, or application logs.

- This data is typically unstructured or semi-structured and arrives in high volumes and velocities.

Also Read: Types of Data

2. Stream Processing:

- Unlike batch processing, where data is analyzed after being stored, stream processing tools (e.g., Apache Kafka, Apache Flink, or Apache Storm) handle data as it flows in.

- This ensures that insights can be generated in milliseconds or seconds rather than minutes or hours.

3. Real-Time Analytics Execution:

- Advanced algorithms, AI models, and machine learning techniques process the incoming data to detect patterns, correlations, or anomalies.

- Statistical models, predictive analytics, and natural language processing (NLP) are often used to extract meaningful insights.

4. Visualization and Actionable Insights:

- The processed data is then displayed on real-time dashboards, alerting stakeholders about trends, risks, or opportunities.

- Automated actions can also be triggered, such as blocking a fraudulent transaction or adjusting supply chain logistics.

5. Data Storage for Historical Analysis:

- Although real-time analytics focuses on instant insights, data is often stored for further analysis and refinement of predictive models.

- Cloud storage and data lakes help organizations maintain long-term data records.

Key Features of Real-Time Analytics

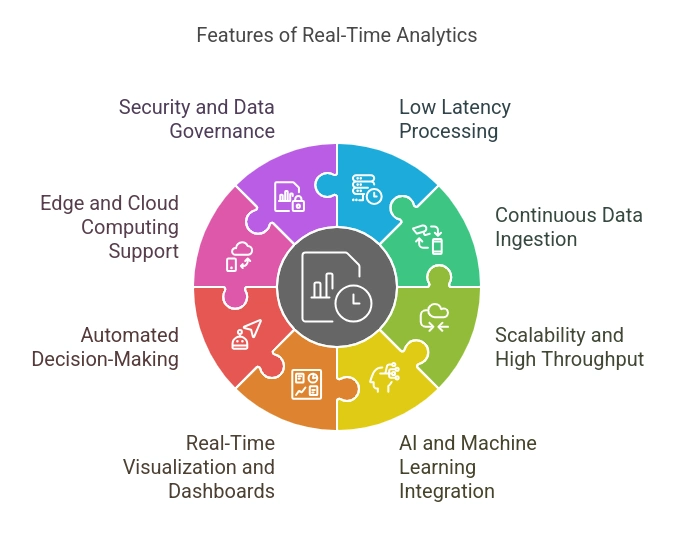

- Low Latency Processing

Real-time analytics processes data within milliseconds or seconds, enabling businesses to react instantly to critical events, such as fraud detection, system failures, or customer interactions.

- Continuous Data Ingestion

Unlike batch processing, real-time analytics continuously collects data from multiple sources, including IoT devices, social media, transactional systems, and sensors, ensuring up-to-the-moment insights.

- Scalability and High Throughput

Modern real-time analytics systems utilize distributed computing & cloud infrastructure to efficiently process huge streams of data and ensure smooth running even under peak loads.

- AI and Machine Learning Integration

Advanced analytics models powered by AI and ML enhance real-time decision-making by detecting anomalies, predicting trends, as well as automating responses without human intervention.

- Real-Time Visualization and Dashboards

Interactive dashboards and real-time reporting software offer immediate insights through dynamic graphs, charts, and alerts, enabling businesses to track performance & make data-driven decisions in real-time.

- Automated Decision-Making

By integrating rule-based triggers and predictive analytics, real-time systems can automatically execute actions, such as blocking fraudulent transactions or adjusting inventory levels based on demand.

- Edge and Cloud Computing Support

Edge computing enables faster data processing closer to the source, while cloud platforms provide scalable, on-demand analytics capabilities, ensuring real-time insights across decentralized environments.

- Security and Data Governance

Robust encryption, access controls, & compliance frameworks ensure that real-time data is processed securely while adhering to industry regulations such as GDPR, HIPAA, & PCI-DSS.

Benefits of Real-Time Analytics

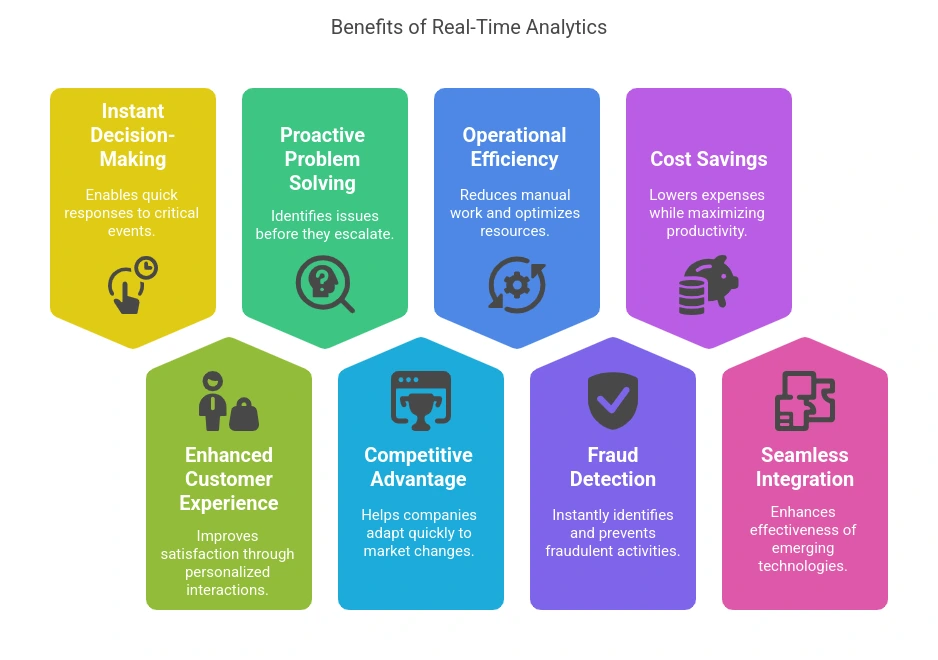

- Instant Decision-Making

Businesses can respond to critical events in real-time, such as preventing fraud, mitigating cybersecurity threats, or optimizing supply chain operations without delays.

- Enhanced Customer Experience

Personalized recommendations, dynamic pricing, and instant support improve customer satisfaction by delivering timely and relevant interactions based on real-time behaviour.

- Proactive Problem Solving

Organizations can identify and resolve issues before they escalate, such as detecting equipment failures in manufacturing or network anomalies in IT infrastructure.

- Competitive Advantage

Companies leveraging real-time analytics can stay ahead of competitors by quickly adapting to market trends, consumer demands, and operational challenges.

- Operational Efficiency

Continuous monitoring and automation reduce manual intervention, optimize resource allocation, and improve workflow efficiency across industries.

- Fraud Detection and Risk Mitigation

Financial institutions and e-commerce platforms use real-time analytics to detect fraudulent transactions instantly & prevent security breaches.

- Cost Savings

Through optimizing stock, minimizing downtime, and eliminating processes, companies can reduce operation expenses while achieving maximum productivity and revenue.

- Seamless Integration with Emerging Technologies

Real-time analytics makes AI, IoT, and blockchain applications more effective, supporting smarter automation & more precise predictive insights.

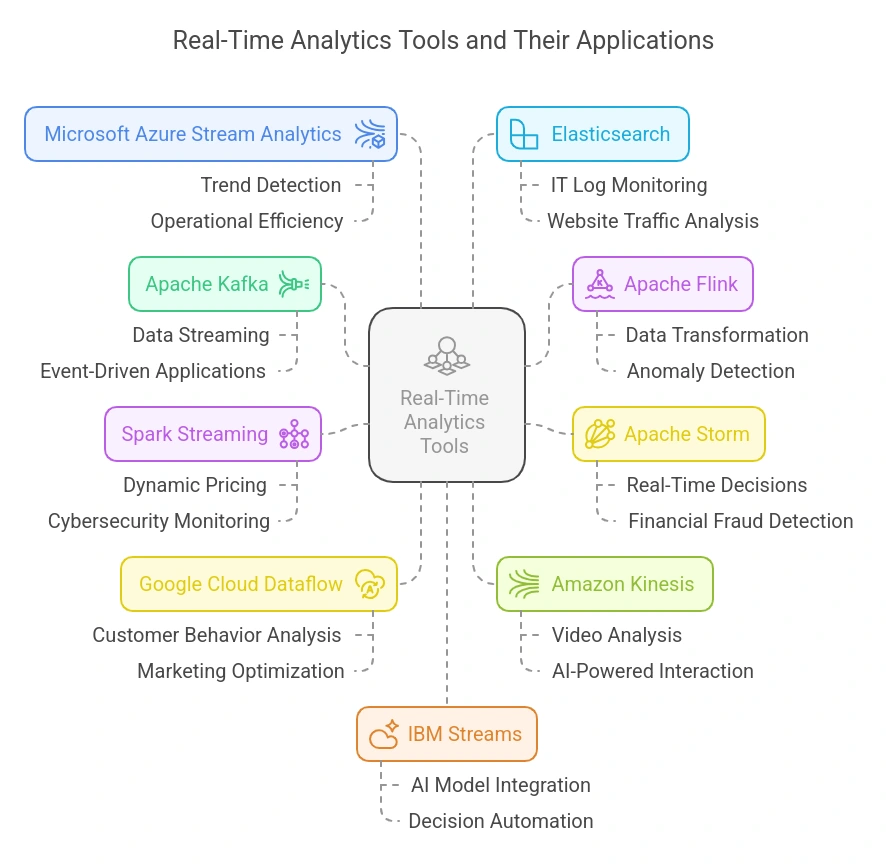

Tools Used for Real-Time Analytics and Their Uses

1. Apache Kafka

Use Case: Real-time data streaming and event-driven applications

How It Helps:

Apache Kafka is a distributed event-streaming platform that enables real-time data ingestion, processing, and distribution. It helps businesses handle high-throughput, low-latency messaging and ensures seamless data flow between applications. Kafka is widely used for real-time fraud detection, monitoring IoT sensor data, and log analytics.

2. Apache Flink

Use Case: Stream processing and real-time analytics applications

How It Helps:

Apache Flink is an efficient stream-processing platform for transforming and analyzing data in real time. Flink has capabilities such as event time processing, distributed computation, and fault tolerance. Companies leverage Flink to automate predictive maintenance, detect anomalies, and drive real-time dashboards that observe major systems.

3. Apache Storm

Use Case: Real-time computation and big data analytics

How It Helps:

Apache Storm is a system for real-time computation that can handle unbounded streams of data at scale. It is utilized in applications requiring real-time decisions, including detecting financial fraud, sentiment analysis for social media, and processing IoT sensor data.

4. Spark Streaming (Apache Spark)

Use Case: Micro-batch stream processing for real-time analytics

How It Helps:

Spark Streaming extends Apache Spark’s capabilities to process streaming data in near real-time. It integrates with Kafka, Flume, and other sources to perform transformations, aggregations, and machine learning tasks. It is commonly used in e-commerce for dynamic pricing, recommendation engines, and cybersecurity monitoring.

5. Google Cloud Dataflow

Use Case: Serverless data processing and real-time analytics in the cloud

How It Helps:

Google Cloud Dataflow is a fully managed service that processes large-scale real-time data using Apache Beam. It is highly scalable and used for analyzing customer behaviour, monitoring IT infrastructure, and optimizing marketing campaigns.

6. Amazon Kinesis

Use Case: Real-time data streaming and machine learning integration

How It Helps:

Amazon Kinesis enables companies to gather, analyze, and process real-time data streams. It enables applications like real-time video analysis, stock market tracking, and AI-powered customer interaction. It is integrated with AWS services like Lambda & Redshift for cloud-based scalable analytics.

7. Microsoft Azure Stream Analytics

Use Case: Real-time event processing and predictive analytics

How It Helps:

Azure Stream Analytics processes large volumes of real-time data from IoT devices, logs, and applications. It helps businesses detect trends, automate responses, and improve operational efficiency in industries like finance, healthcare, and manufacturing.

8. Elasticsearch (ELK Stack: Elasticsearch, Logstash, Kibana)

Use Case: Real-time search, log analytics, and visualization

How It Helps:

Elasticsearch is used for searching and analyzing large datasets in real time. When combined with Logstash (data ingestion) and Kibana (visualization), it enables businesses to monitor IT logs, detect cybersecurity threats, and analyze website traffic in real-time dashboards.

Also Read: Best Data Visualization Tools

9. IBM Streams

Use Case: Advanced real-time analytics with AI and machine learning

How It Helps:

IBM Streams is a high-performance platform for processing massive real-time data streams. It integrates with AI models to automate decision-making in sectors like financial services, healthcare, and industrial automation.

10. Cloudera DataFlow

Use Case: IoT data processing and event-driven analytics

How It Helps:

Cloudera DataFlow provides real-time data gathering and processing from IoT devices, edge computing platforms, and enterprise applications. It supports predictive maintenance, smart city monitoring, and industrial automation.

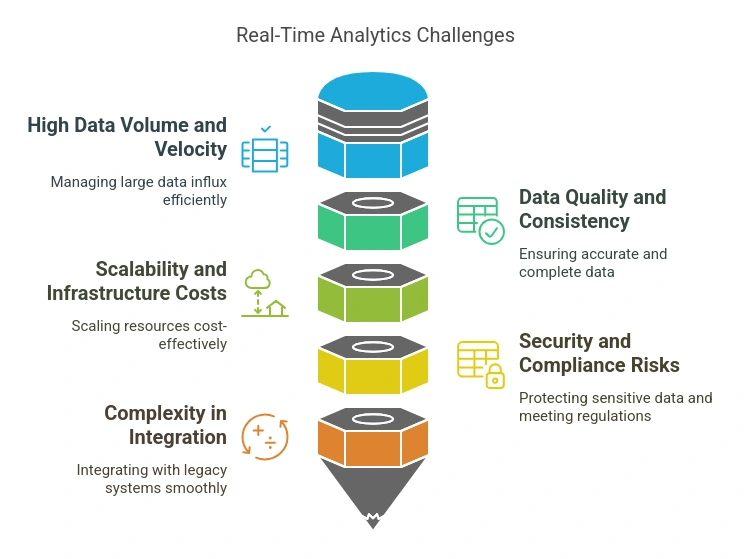

Challenges in Implementing Real-Time Analytics

1. High Data Volume and Velocity

Challenge:

Real-time analytics needs to handle enormous data amounts in a short time, often from numerous inputs such as IoT devices, social media, and transactional systems. Processing of such data smoothly without delay poses a great challenge.

Solution:

- Use distributed processing frameworks like Apache Kafka, Apache Flink, or Spark Streaming to handle high-throughput data efficiently.

- Implement edge computing to process data closer to the source before sending it to central systems.

- Optimize data pipelines with batch and micro-batch processing to balance speed and accuracy.

2. Data Quality and Consistency

Challenge:

As real-time analytics deals with streaming data continuously, it is not easy to ensure accuracy, consistency, and completeness. It can result in erroneous insights due to missing data, duplicates, or invalid entries.

Solution:

- Use data validation and cleansing tools such as Apache Nifi, Talend, or AWS Glue to preprocess incoming data.

- Implement schema enforcement to maintain structured data formats.

- Leverage machine learning models to detect and correct anomalies in real-time.

3. Scalability and Infrastructure Costs

Challenge:

Real-time analytics systems require robust infrastructure capable of scaling as data volume increases. However, maintaining on-premise solutions can be costly and resource-intensive.

Solution:

- Leverage cloud-based analytics solutions like Google Cloud Dataflow, AWS Kinesis, or Azure Stream Analytics to scale resources dynamically.

- Use serverless computing and containerized solutions (Kubernetes, Docker) to reduce operational overhead.

- Implement data partitioning and caching techniques to optimize performance and minimize costs.

4. Security and Compliance Risks

Challenge:

Processing real-time data, particularly in sectors such as finance and healthcare, entails dealing with sensitive data. Security breaches, unauthorized access, and regulatory compliance (GDPR, HIPAA) complicate matters.

Solution:

- Implement end-to-end encryption for data in transit and at rest.

- Use role-based access control (RBAC) and multi-factor authentication to prevent unauthorized access.

- Adopt AI-driven security monitoring tools for threat detection in real-time.

- Ensure compliance with regulatory standards by using audit logs and secure data governance frameworks.

5. Complexity in Integration with Existing Systems

Challenge:

Many organizations have legacy systems that were not built for real-time processing, making integration difficult. Ensuring seamless data flow across different platforms and applications is challenging.

Solution:

- Use APIs and middleware solutions to bridge legacy systems with modern real-time analytics platforms.

- Implement event-driven architectures to facilitate smoother data exchange between systems.

- Use ETL (Extract, Transform, Load) pipelines to convert batch-based data into real-time insights gradually.

Real-World Applications of Real-Time Analytics

1. Fraud Detection in Financial Transactions

Use Case:

Banks and payment gateways use real-time analytics to detect fraudulent transactions by analyzing user behaviour and transaction patterns.

Code Example:

Using Apache Kafka for real-time transaction streaming and fraud detection.

from kafka import KafkaConsumer

import json

# Connect to Kafka topic for transactions

consumer = KafkaConsumer(

'transactions',

bootstrap_servers='localhost:9092',

value_deserializer=lambda x: json.loads(x.decode('utf-8'))

)

# Define fraud detection threshold

FRAUD_THRESHOLD = 5000 # Any transaction above $5000 is flagged

for message in consumer:

transaction = message.value

if transaction['amount'] > FRAUD_THRESHOLD:

print(f"ALERT: Fraudulent transaction detected! User: {transaction['user_id']}, Amount: ${transaction['amount']}")

Expected Output:

ALERT: Fraudulent transaction detected! User: 12345, Amount: $7500

2. Predictive Maintenance in Manufacturing (IoT Sensors)

Use Case:

Manufacturers use real-time sensor data to predict machinery failures before they happen, reducing downtime.

Code Example:

Using Pandas and Scikit-learn to process IoT sensor data.

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

import random

# Simulate real-time sensor data

data = pd.DataFrame({

'temperature': [random.uniform(30, 100) for _ in range(10)],

'vibration': [random.uniform(0, 10) for _ in range(10)],

'machine_status': [random.choice([0, 1]) for _ in range(10)] # 1 = Failure, 0 = Normal

})

# Train a simple predictive model

model = RandomForestClassifier()

model.fit(data[['temperature', 'vibration']], data['machine_status'])

# New incoming sensor data

new_data = pd.DataFrame({'temperature': [90], 'vibration': [7]})

prediction = model.predict(new_data)

if prediction[0] == 1:

print("ALERT: Machine failure predicted! Immediate maintenance required.")

else:

print("Machine operating normally.")

Expected Output:

ALERT: Machine failure predicted! Immediate maintenance required.

3. Real-Time Traffic Monitoring and Optimization

Use Case:

Cities use real-time analytics to analyze traffic patterns and dynamically adjust signals to reduce congestion.

Code Example:

Using OpenCV and Python for real-time vehicle detection.

import cv2

# Load pre-trained Haar cascade for vehicle detection

car_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_car.xml')

# Open video feed (simulate real-time traffic monitoring)

cap = cv2.VideoCapture('traffic.mp4')

while cap.isOpened():

ret, frame = cap.read()

if not ret:

break

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

cars = car_cascade.detectMultiScale(gray, 1.1, 3)

print(f"Real-time vehicle count: {len(cars)}")

if len(cars) > 10:

print("Traffic congestion detected! Optimizing signal timing.")

# Display the frame (Optional)

# cv2.imshow('Traffic Feed', frame)

# if cv2.waitKey(1) & 0xFF == ord('q'):

# break

cap.release()

cv2.destroyAllWindows()

Expected Output:

Real-time vehicle count: 8

Real-time vehicle count: 12

Traffic congestion detected! Optimizing signal timing.

4. Stock Market Price Prediction & Alerts

Use Case:

Investors use real-time stock price data to predict trends and make informed trading decisions.

Code Example:

Using yfinance and Pandas to fetch stock prices and detect price spikes.

import yfinance as yf

# Fetch real-time stock data for Tesla (TSLA)

stock = yf.Ticker("TSLA")

data = stock.history(period="1d", interval="1m")

# Check for sudden price spikes

current_price = data['Close'].iloc[-1]

previous_price = data['Close'].iloc[-2]

if current_price > previous_price * 1.02: # If price increases by more than 2%

print(f"ALERT: Tesla stock price surged to ${current_price:.2f}!")

elif current_price < previous_price * 0.98: # If price drops by more than 2%

print(f"ALERT: Tesla stock price dropped to ${current_price:.2f}. Consider buying!")

else:

print(f"Stock price stable: ${current_price:.2f}")

Expected Output:

ALERT: Tesla stock price surged to $745.50!

5. Real-Time Sentiment Analysis on Social Media

Use Case:

Brands analyze Twitter data in real time to detect customer sentiment and respond to feedback instantly.

Code Example:

Using Tweepy and TextBlob to analyze live tweets.

import tweepy

from textblob import TextBlob

# Twitter API credentials

API_KEY = "your_api_key"

API_SECRET = "your_api_secret"

ACCESS_TOKEN = "your_access_token"

ACCESS_SECRET = "your_access_secret"

# Authenticate with Twitter API

auth = tweepy.OAuthHandler(API_KEY, API_SECRET)

auth.set_access_token(ACCESS_TOKEN, ACCESS_SECRET)

api = tweepy.API(auth)

# Stream live tweets for a specific keyword

keyword = "Tesla"

tweets = api.search_tweets(q=keyword, lang="en", count=5)

for tweet in tweets:

sentiment = TextBlob(tweet.text).sentiment.polarity

sentiment_label = "Positive" if sentiment > 0 else "Negative" if sentiment < 0 else "Neutral"

print(f"Tweet: {tweet.text}\nSentiment: {sentiment_label}\n")

Expected Output:

Tweet: "Tesla stock is on fire today!"

Sentiment: Positive

Tweet: "Not happy with Tesla's latest update. Hope they fix it soon."

Sentiment: Negative

Conclusion

Real-time analytics is transforming industries by enabling instant decision-making, optimizing operations, and enhancing user experiences.

From fraud detection in banking to predictive maintenance in manufacturing, businesses leveraging real-time data gain a significant competitive advantage.

As organizations increasingly adopt AI, machine learning, and cloud computing for real-time insights, professionals with expertise in data analytics and AI-powered decision-making are in high demand.

If you want to build a career in this field, Great Learning’s Data Science and Business Analytics Course can help you gain hands-on experience with real-time data processing, AI-driven analytics, and cloud-based solutions.

Explore our course today and take the next step toward mastering real-time analytics!