- What is Prompt Engineering?

- Key Elements of Prompt Engineering

- Technical Components of Prompt Engineering

- How Prompt Engineering Works?

- Prompt Engineering Examples

- Various Prompt Types for AI Applications

- Why Is Prompt Engineering Important?

- Prompt Engineering Challenges

- Prompt Engineering Best Practices

- Conclusion

Have you ever wondered how AI tools like ChatGPT or deep ai chat understand and respond to your questions? The answer lies in prompt engineering.

Prompt engineering is the skill of crafting effective inputs to get the best results from AI systems.

In this article, we’ll explore prompt engineering, why it’s important, and how you can use it with simple examples and code.

What is Prompt Engineering?

Prompt engineering is the practice of designing and refining prompt questions or instructions to elicit specific responses from AI models. Prompts are considered as queries or instructions you give an AI system to produce accurate, insightful, and pertinent results.

Prompt engineering depends on language and context, unlike traditional programming, which defines intricate algorithms. The objective is to use precise and well-structured queries to close the gap between human intent and computer output.

Suggested Read: What is Generative AI?

Key Elements of Prompt Engineering

- Clarity: Clearly state your query.

- Context: Give the task adequate background knowledge.

- Constraints: Define parameters for the output, like word count or format.

- Examples: Showcase the preferred format or aesthetic to direct the AI.

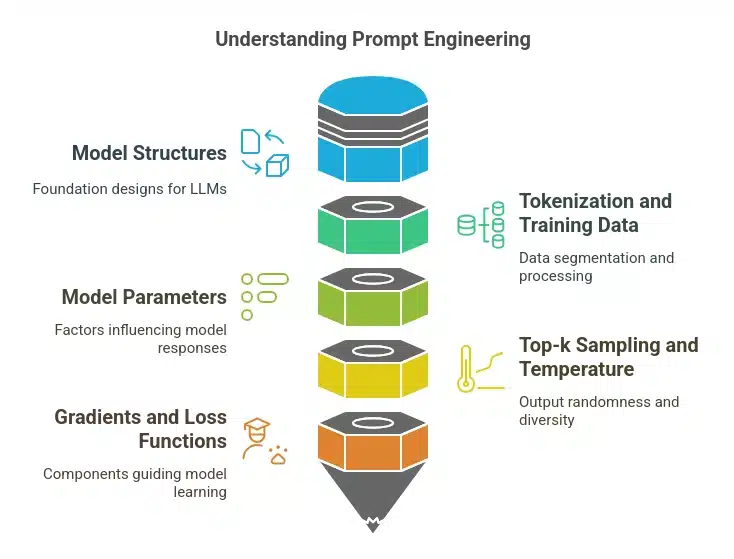

Technical Components of Prompt Engineering

- Model structures: Transformer designs serve as the foundation for large language models (LLMs), such as Mata’s LLaMA and GPT (Generative Pre-trained Transformer). It is frequently necessary to comprehend these underlying systems in order to create prompts that are effective.

- Tokenization and training data: Tokenizing incoming data into smaller pieces (tokens) for processing, LLMs are trained on enormous datasets. A model’s interpretation of a prompt may be impacted by the tokenization method (word-based, byte-pair, etc.).

- Model parameters: The model’s response to a prompt is determined by the parameters, which are adjusted throughout the training process. Creating effective prompts can be facilitated by knowing how these parameters relate to model outcomes.

- Top-k Sampling and Temperature: In order to ascertain the randomness and diversity of outputs, models employ strategies such as temperature setting and top-k sampling while producing replies. For example, responses may be more varied (but possibly less accurate) at higher temperatures.

- Gradients and Loss Functions: Deeper down, the model’s gradients and loss functions affect how it behaves during prompt response. The learning process of the model is guided by these mathematical components.

How Prompt Engineering Works?

Prompt engineering operates by using the model’s training data and its capacity to decipher context and patterns in human language.

1. Create a suitable prompt:

- To create a suitable and efficient prompt, clarity is required. Always ensure that the prompt is unambiguous.

- Using constraints or setting up a boundary is also helpful.

- Avoid leading questions. Leading questions can bias the model’s output.

2. Iterate and Evaluate:

- Write the prompt first according to the requirement.

- Generate the result of the prompt you created by any AI model.

- Evaluate the result.

- Repeat the process or refine the prompt if the result is not satisfying.

- Continue the prompt refinement and result generation process until the desired output is generated.

3. Calibrate and fine-tune:

In addition to improving the prompt itself, the AI model may need to be calibrated or adjusted. This entails modifying the model’s parameters to better suit particular datasets or tasks. Although this is a more sophisticated method, it can greatly enhance the model’s performance for certain applications.

Prompt Engineering Examples

Let’s examine a few instances to show how varied prompts can affect AI outputs.

1. Example: Coming Up with an Inspirational Phrase

- Prompt: “Write a motivational quote for someone facing challenges at work.”

- Response: “Beautiful places are frequently reached via challenging routes. Accept the challenge because it will give you the strength to succeed.

2. Example: Creative Writing with Variations

- Prompt 1: “Write a short story about a robot exploring a forest.” is the first prompt.

- Response 1: “A lone robot named Luma strayed into the thick woods of a world where nature had taken back the land. It studied each leaf and bloomed curiously, its metal frame reflecting sunshine. For the first time, it experienced an inexplicable feeling of astonishment.”

- Prompt 2 (With Constraints): “Write a story in exactly 50 words about a robot exploring a forest.”

- Response 2: The robot’s sensors blinked as sunlight streamed through the tall trees. Every step unveiled secrets: rustling leaves, aromatic blossoms, and chattering birds. It realized for the first time that life existed outside of its circuits. The forest was an experience, vivid and untouchable, like a dream it didn’t even know it could have, rather than data.

3. Example: Help with Programming

Quick engineering is essential when looking to AI for coding answers.

- Prompt: “Write Python code to calculate the factorial of a number using recursion.”

- Response:

`python

def factorial(n):

if n == 0 or n == 1:

return 1

else:

return n factorial(n - 1)

Example usage

print(factorial(5)) Output: 120

```

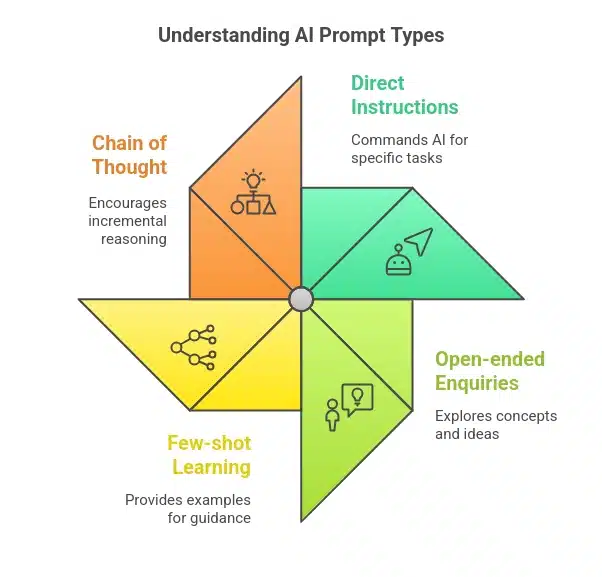

Various Prompt Types for AI Applications

There is no one-size-fits-all method for prompt engineering. It adjusts to various tasks and fields. The sorts of prompts and their uses are broken out as follows:

1. Direct Instructions

These cues instruct the AI to carry out a specific task.

- Example: “Translate this sentence to Spanish: ‘AI is transforming industries.'”

- Output: “”La inteligencia artificial está transformando las industrias.”

2. Open-ended enquiries

Used for concept exploration or brainstorming.

- Example: “What are some creative uses of AI in education?” is an example.

- Output: “AI can create personalized learning experiences, design interactive quizzes, provide instant feedback, and even simulate real-world scenarios for experiential learning.”

3. Learning with Few Shots

Giving AI guidance by including instances in the prompt.

Example: “Translate the following sentences to German:

1. The cat is on the roof. -> Die Katze ist auf dem Dach.

2. The sun is shining brightly. -> Die Sonne scheint hell.

3. The AI revolution is here. ->”

Output: “Die KI-Revolution ist hier.”

4. The Chain of Thought

The AI is prompted to think incrementally.

- Example: “How far does a train go if it runs at 60 miles per hour for three hours? Explain your thinking.

- Output: “In an hour, the train covers 60 miles. It travels 60 x 3 = 180 miles in three hours. Consequently, the train travels 180 miles in three hours.

Why Is Prompt Engineering Important?

Prompt engineering is important because it helps in:

- Enhancing Model Output Relevance and Accuracy: Effective Prompt Engineering ensures that the AI not only understands the task at hand but also contextual nuances, leading to outputs that are both accurate and contextually appropriate.

- Bridging Human-AI Communication: By refining how we communicate with AI systems, we can better leverage their capabilities, transforming them from mere tools into collaborative partners in creativity and problem-solving.

- Solving complex problems: Prompts let AI models support coding, data analysis, or corporate decision-making by explicitly defining tasks. Prompt Engineering is known for its ability to solve complex problems within minutes.

- Customization and Flexibility: Through Prompt Engineering, users can tailor AI outputs to specific needs and preferences, whether it’s generating content in a particular style or solving complex problems within defined parameters.

Prompt Engineering Challenges

Despite its strength, fast engineering has drawbacks.

- Ambiguity: Inaccurate suggestions produce unrelated outcomes.

- Bias: The outcome could reinforce bias if the prompt exhibits it.

- Try and Error: Creating the perfect prompt might take several tries.

Prompt Engineering Best Practices

- Be Specific: Indicate the output’s task, format, and scope.

- Provide Context: Include pertinent information to frame the assignment.

- Iterate and Experiment: Test and refine prompts.

- Set Constraints: Establish parameters such as tone or word count.

Conclusion

The art and science of prompt engineering are combined. Gaining proficiency in this area will enable you to fully utilize AI systems and direct them to complete tasks with accuracy, originality, and relevance. Prompt engineering will continue to be a vital tool as AI develops, enabling people to interact with machines in an efficient manner and produce excellent results.

If you begin using it today, you’ll quickly learn how prompt engineering may change your interactions with AI from simple inquiries to ground-breaking discoveries. You can learn prompt engineering from our Free Prompt Engineering Course.

Ready to take your prompt engineering skills to the next level? Explore our Microsoft AI Professional Program to learn how to design, build, and deploy AI solutions end-to-end on Azure—empowering you to create more advanced prompts and AI-driven applications.

Suggested Read: What Is ChatGPT and How Does It Work?