- What is Natural Language Processing?

- How did Natural Language Processing come to exist?

- How does Natural Language Processing work?

- Why natural language processing is important?

- Why is Advancement in the Field of NLP Necessary?

- What can natural language processing do?

- What are some of the applications of NLP?

- How to learn Natural Language Processing (NLP)?

- Available Open-Source softwares in NLP Domain

- What are Regular Expressions?

- What is Text Wrangling?

- Text Cleansing

- What factors decide the quality and quantity of cleansing?

- How do we define text cleansing?

- What is Stemming?

- What are the various types of stemmers?

- What is lemmatization in NLP?

- What is Stop Word Removal?

- What is Rare Word Removal?

- What is Spell Correction?

- What is Dependency Parsing?

What is Natural Language Processing?

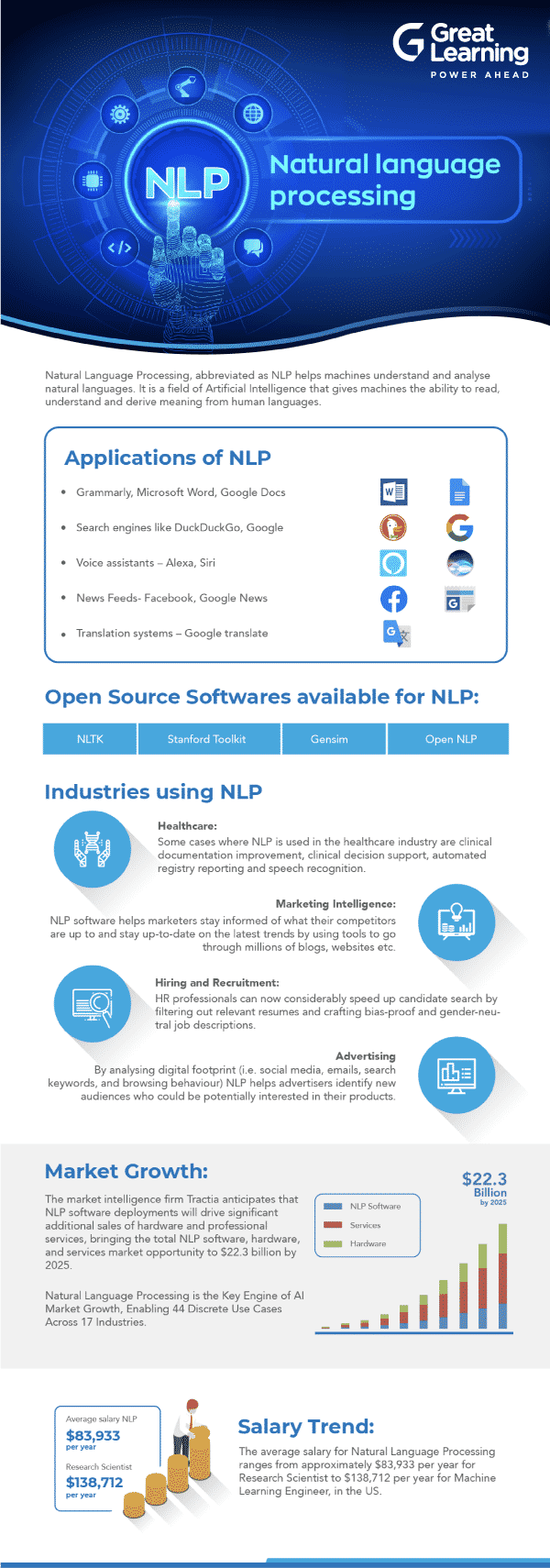

Natural language processing is the application of computational linguistics to build real-world applications which work with languages comprising of varying structures. We are trying to teach the computer to learn languages, and then also expect it to understand it, with suitable efficient algorithms.

All of us have come across Google’s keyboard which suggests auto-corrects, word predicts (words that would be used) and more. Grammarly is a great tool for content writers and professionals to make sure their articles look professional. It uses ML algorithms to suggest the right amounts of gigantic vocabulary, tonality, and much more, to make sure that the content written is professionally apt, and captures the total attention of the reader. Translation systems use language modelling to work efficiently with multiple languages.

Check out the free NLP course by Great Learning Academy to learn more.

How did Natural Language Processing come to exist?

People involved with language characterization and understanding of patterns in languages are called linguists. Computational linguistics kicked off as the amount of textual data started to explode tremendously. Wikipedia is the greatest textual source there is. The field of computational linguistics began with an early interest in understanding the patterns in data, Parts-of Speech(POS) tagging, easier processing of data for various applications in the banking and finance industries, educational institutions, etc.

How does Natural Language Processing work?

NLP aims at converting unstructured data into computer-readable language by following attributes of natural language. Machines employ complex algorithms to break down any text content to extract meaningful information from it. The collected data is then used to further teach machines the logics of natural language.

Natural language processing uses syntactic and semantic analysis to guide machines by identifying and recognising data patterns. It involves the following steps:

- Syntax: Natural language processing uses various algorithms to follow grammatical rules which are then used to derive meaning out of any kind of text content. Commonly used syntax techniques are lemmatization, morphological segmentation, word segmentation, part-of-speech tagging, parsing, sentence breaking, and stemming.

- Semantics: This is a comparatively difficult process where machines try to understand the meaning of each section of any content, both separately and in context. Even though semantical analysis has come a long way from its initial binary disposition, there’s still a lot of room for improvement. NER or Named Entity Recognition is one of the primary steps involved in the process which segregates text content into predefined groups. Word sense disambiguation is the next step in the process, and takes care of contextual meaning. Last in the process is Natural language generation which involves using historical databases to derive meaning and convert them into human languages.

Learn how NLP traces back to Artificial Intelligence.

Why natural language processing is important?

The amount of data generated by us keep increasing by the day, raising the need for analysing and documenting this data. NLP enables computers to read this data and convey the same in languages humans understand.

From medical records to recurrent government data, a lot of these data is unstructured. NLP helps computers to put them in proper formats. Once that is done, computers analyse texts and speech to extract meaning. Not only is the process automated, but also near-accurate all the time.

Why is Advancement in the Field of NLP Necessary?

NLP is the process of enhancing the capabilities of computers to understand human language. Databases are highly structured forms of data. Internet, on the other hand, is completely unstructured with minimal components of structure in it. In such a case, understanding human language and modelling it is the ultimate goal under NLP. For example, Google Duplex and Alibaba’s voice assistant are on the journey to mastering non-linear conversations. Non-linear conversations are somewhat close to the human’s manner of communication. We talk about cats in the first sentence, suddenly jump to talking tom, and then refer back to the initial topic. The person listening to this understands the jump that takes place. Computers currently lack this capability.

Prepare for the top Deep Learning interview questions.

What can natural language processing do?

Currently, NLP professionals are in a lot of demand, for the amount of unstructured data available is increasing at a very rapid pace. Underneath this unstructured data lies tons of information that can help companies grow and succeed. For example, monitoring tweet patterns can be used to understand the problems existing in the societies, and it can also be used in times of crisis. Thus, understanding and practicing NLP is surely a guaranteed path to get into the field of machine learning. For beginners, creating a NLP portfolio would highly increase the chances of getting into the field of NLP.

Check out the top NLP interview question and answers.

What are some of the applications of NLP?

- Grammarly, Microsoft Word, Google Docs

- Search engines like DuckDuckGo, Google

- Voice assistants – Alexa, Siri

- News feeds- Facebook,Google News

- Translation systems – Google translate

How to learn Natural Language Processing (NLP)?

To start with, you must have a sound knowledge of programming languages like Python, Keras, NumPy, and more. You should also learn the basics of cleaning text data, manual tokenization, and NLTK tokenization. The next step in the process is picking up the bag-of-words model (with Scikit learn, keras) and more. Understand how the word embedding distribution works and learn how to develop it from scratch using Python. Embedding is an important part of NLP, and embedding layers helps you encode your text properly. After you have picked up embedding, it’s time to lean text classification, followed by dataset review. And you are good to go!

Great Learning offers a Deep Learning certificate program which covers all the major areas of NLP, including Recurrent Neural Networks, Common NLP techniques – Bag of words, POS tagging, tokenization, stop words, Sentiment analysis, Machine translation, Long-short term memory (LSTM), and Word embedding – word2vec, GloVe.

Available Open-Source softwares in NLP Domain

- NLTK

- Stanford toolkit

- Gensim

- Open NLP

We will understand traditional NLP, a field which was run by the intelligent algorithms that were created to solve various problems. With the advance of deep neural networks, NLP has also taken the same approach to tackle most of the problems today. In this article we will cover traditional algorithms to ensure the fundamentals are understood.

We look at the basic concepts such as regular expressions, text-preprocessing, POS-tagging and parsing.

What are Regular Expressions?

Regular expressions are effective matching of patterns in strings. Patterns are used extensively to get meaningful information from large amounts of unstructured data. There are various regular expressions involved. Let us consider them one by one:

- (a period): All characters except for n are matched

- w: All [a-z A-Z 0-9] characters are matched with this expression

- $: Every expression ends with $

- : used to nullify the speciality of the special character.

- W (upper case W) matches any non-word character.

- s: This expression (lowercase s) matches a single white space character – space, newline,

return, tab, form [nrtf].

- r: This expression is used for a return character.

- S: This expression matches any non-white space character.

- t: This expression performs a tab operation.

- n: Used to express a newline character.

- d: Decimal digit [0-9].

- ^: Used at the start of the string.

What is Text Wrangling?

We will define it as the pre-processing done before obtaining a machine-readable and formatted text from raw data.

Some of the processes under text wrangling are:

- text cleansing

- specific pre-processing

- tokenization

- stemming or lemmatization

- stop word removal

Text Cleansing

Text collected from various sources has a lot of noise due to the unstructured nature of the text. Upon parsing of the text from the various data sources, we need to make sense of the unstructured property of the raw data. Therefore, text cleansing is used in the majority of the cleaning to be performed.

[optin-monster-shortcode id=”ehbz4ezofvc5zq0yt2qj”]

What factors decide the quality and quantity of cleansing?

- Parsing performance

- Source of data source

- External noise

Consider a second case, where we parse a PDF. There could be noisy characters, non ASCII characters, etc. Before proceeding onto the next set of actions, we should remove these to get a clean text to process further. If we are dealing with xml files, we are interested in specific elements of the tree. In the case of databases we manipulate splitters and are interested in specific columns. We will look at splitters in the coming section.

How do we define text cleansing?

In conclusion, processes done with an aim to clean the text and to remove the noise surrounding the text can be termed as text cleansing. Data munging and data wrangling are also used to talk about the same. They are used interchangeably in a similar context.

Sentence splitter

NLP applications require splitting large files of raw text into sentences to get meaningful data. Intuitively, a sentence is the smallest unit of conversation. How do we define something like a sentence for a computer? Before that, why do we need to define this smallest unit? We need it because it simplifies the processing involved. For example, the period can be used as splitting tool, where each period signifies one sentence. To extract dialogues from a paragraph, we search for all the sentences between inverted commas and double-inverted commas. . A typical sentence splitter can be something as simple as splitting the string on (.), to something as complex as a predictive classifier to identify sentence boundaries:

Tokenization

Token is defined as the minimal unit that a machine understands and processes at a time. All the text strings are processed only after they have undergone tokenization, which is the process of splitting the raw strings into meaningful tokens. The task of tokenization is complex due to various factors such as

- need of the NLP application

- the complexity of the language itself

For example, in English it can be as simple as choosing only words and numbers through a regular expression. For dravidian languages on the other hand, it is very hard due to vagueness present in the morphological boundaries between words.

What is Stemming?

Stemming is the process of obtaining the root word from the word given. Using efficient and well-generalized rules, all tokens can be cut down to obtain the root word, also known as the stem. Stemming is a purely rule-based process through which we club together variations of the token. For example, the word sit will have variations like sitting and sat. It does not make sense to differentiate between sit and sat in many applications, thus we use stemming to club both grammatical variances to the root of the word. Stemming is in use for its simplicity. But in the case of dravidian languages with many more alphabets, and thus many more permutations of words possible, the possibility of the stemmer identifying all the rules is very low. In such cases we use the lemmatization instead. Lemmatization is a robust, efficient and methodical way of combining grammatical variations to the root of a word.

What are the various types of stemmers?

- Porter Stemmer

- Lovins Stemmer

- Dawson Stemmer

- Krovetz Stemmer

- Xerox Stemmer

Porter Stemmer: Porter stemmer makes use of larger number of rules and achieves state-of-the-art accuracies for languages with lesser morphological variations. For complex languages, custom stemmers need to be designed, if necessary. On the contrary, a basic rule-based stemmer, like removing –s/es or -ing or -ed can give you a precision of more than 70 percent .

There exists a family of stemmers known as Snowball stemmers that is used for multiple languages like Dutch, English, French, German, Italian, Portuguese, Romanian, Russian, and so on.

In modern NLP applications usually stemming as a pre-processing step is excluded as it typically depends on the domain and application of interest. When NLP taggers, like Part of Speech tagger (POS), dependency parser, or NER are used, we should avoid stemming as it modifies the token and thus can result in an unexpected result.

What is lemmatization in NLP?

Lemmatization is a methodical way of converting all the grammatical/inflected forms of the root of the word. Lemmatization makes use of the context and POS tag to determine the inflected form(shortened version) of the word and various normalization rules are applied for each POS tag to get the root word (lemma).

A few questions to ponder about would be.

- What is the difference between Stemming and lemmatization?

- What would the rules be for a rule-based stemmer for your native language?

- Would it be simpler or difficult to do so?

What is Stop Word Removal?

Stop words are the most commonly occurring words, that seldom add weightage and meaning to the sentences. They act as bridges and their job is to ensure that sentences are grammatically correct. It is one of the most commonly used pre-processing steps across various NLP applications. Thus, removing the words that occur commonly in the corpus is the definition of stop-word removal. Majority of the articles and pronouns are classified as stop words. Many tasks like information retrieval and classification are not affected by stop words. Therefore, stop-word removal is not required in such a case. On the contrary, in some NLP applications stop word removal has a major impact. The stop word list for a language is a hand-curated list of words that occur commonly. Stop word lists for most languages are available online. Many ways exist to automatically generate the stop word list. A simple way to obtain the stop word list is to make use of the word’s document frequency. Words presence across the corpus is used as an indicator for classification of stop-words. Research has ascertained that we obtain the optimum set of stop words for a given corpus. NLTK comes with a loaded list for 22 languages.

One should consider answering the following questions.

- How does stop-word removal help?

- What are some of the alternatives for stop-word removal?

What is Rare Word Removal?

Some of the words that are very unique in nature like names, brands, product names, and some of the noise characters also need to be removed for different NLP tasks. Use of names in the case of text classification isn’t a feasible option to use. Even though we know Adolf Hitler is associated with bloodshed, his name is an exception. Usually, names, do not signify the emotion and thus nouns are treated as rare words and replaced by a single token. The rare words are application dependent, and must be chosen uniquely for different applications.

What is Spell Correction?

Finally, spellings should be checked for in the given corpus. The model should not be trained with wrong spellings, as the outputs generated will be wrong. Thus, spelling correction is not a necessity but can be skipped if the spellings don’t matter for the application.

In the next article, we will refer to POS tagging, various parsing techniques and applications of traditional NLP methods. We learned the various pre-processing steps involved and these steps may differ in terms of complexity with a change in the language under consideration. Therefore, understanding the basic structure of the language is the first step involved before starting any NLP project. We need to ensure, we understand the natural language before we can teach the computer.

What is Dependency Parsing?

Dependency parsing is the process of identifying the dependency parse of a sentence to understand the relationship between the “head” words. Dependency parsing helps to establish a syntactic structure for any sentence to understand it better. These types of syntactic structures can be used for analysing the semantic and the syntactic structure of a sentence. That is to say, not only can the parsing tree check the grammar of the sentence, but also its semantic format. The parse tree is the most used syntactic structure and can be generated through parsing algorithms like Earley algorithm, Cocke-Kasami-Younger (CKY) or the Chart parsing algorithm. Each of these algorithms have dynamic programming which is capable of overcoming the ambiguity problems.

Since any given sentence can have more than one dependency parse, assigning the syntactic structure can become quite complex. Multiple parse trees are known as ambiguities which need to be resolved in order for a sentence to gain a clean syntactic structure. The process of choosing a correct parse from a set of multiple parses (where each parse has some probabilities) is known as syntactic disambiguation.

Seize the opportunities that await you through our dynamic range of free courses. Whether you’re interested in Data Science, Cybersecurity, Management, Cloud Computing, IT, or Software, we offer a broad spectrum of industry-specific domains. Gain the essential skills and expertise to thrive in your chosen field and unleash your full potential.