What is Mean Squared Error?

In Statistics, Mean Squared Error (MSE) is defined as Mean or Average of the square of the difference between actual and estimated values.

Contributed by: Swati Deval

To understand it better, let us take an example of actual demand and forecasted demand for a brand of ice creams in a shop in a year. Before we move into the example,

| Month | Actual Demand | Forecasted Demand | Error | Squared Error |

| 1 | 42 | 44 | -2 | 4 |

| 2 | 45 | 46 | -1 | 1 |

| 3 | 49 | 48 | 1 | 1 |

| 4 | 55 | 50 | 5 | 25 |

| 5 | 57 | 55 | 2 | 4 |

| 6 | 60 | 60 | 0 | 0 |

| 7 | 62 | 64 | -2 | 4 |

| 8 | 58 | 60 | -2 | 4 |

| 9 | 54 | 53 | 1 | 1 |

| 10 | 50 | 48 | 2 | 4 |

| 11 | 44 | 42 | 2 | 4 |

| 12 | 40 | 38 | 2 | 4 |

| Sum | 56 |

MSE= 56/12 = 4.6667

From the above example, we can observe the following.

- As forecasted values can be less than or more than actual values, a simple sum of difference can be zero. This can lead to a false interpretation that forecast is accurate

- As we take a square, all errors are positive, and mean is positive indicating there is some difference in estimates and actual. Lower mean indicates forecast is closer to actual.

- All errors in the above example are in the range of 0 to 2 except 1, which is 5. As we square it, the difference between this and other squares increases. And this single high value leads to higher mean. So MSE is influenced by large deviators or outliers.

As this can indicate how close a forecast or estimate is to the actual value, this can be used as a measure to evaluate models in Data Science.

MSE as Model Evaluation Measure

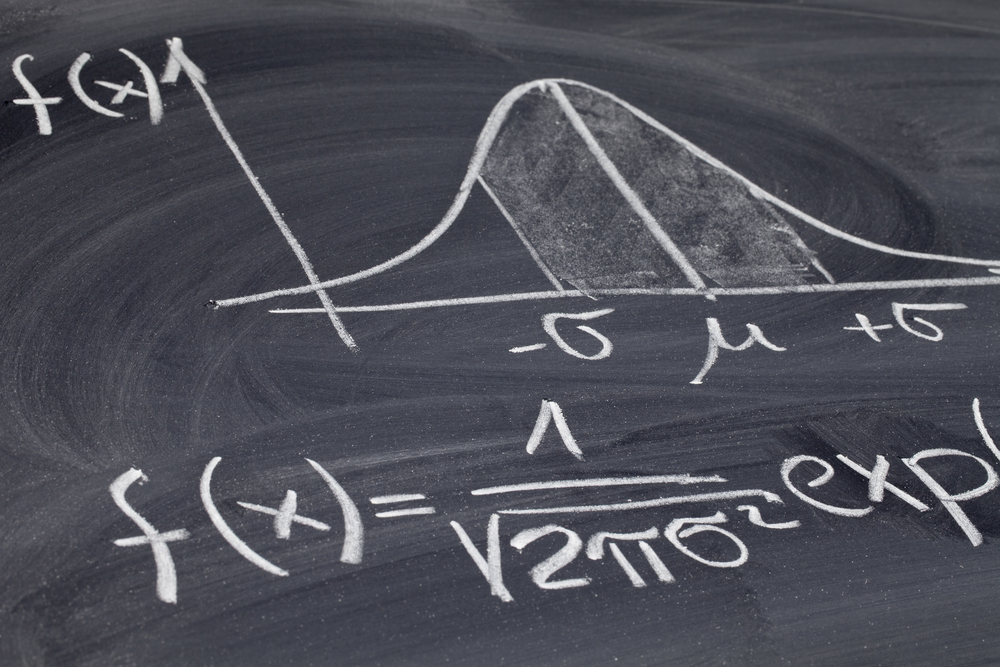

In the Supervised Learning method, the data set contains dependent or target variables along with independent variables. We build models using independent variables and predict dependent or target variables. If the dependent variable is numeric, regression models are used to predict it. In this case, MSE can be used to evaluate models.

In Linear regression, we find lines that best describe given data points. Many lines can describe given data points, but which line describes it best can be found using MSE.

For a given dataset, no data points are constant, say N. Let SSE1, SSE2, … SSEn denotes Sum of squared error. So MSE for each line will be SSE1/N, SSE2/N, … , SSEn/N

Hence the least sum of squared error is also for the line having minimum MSE. So many best-fit algorithms use the least sum of squared error methods to find a regression line.

MSE unit order is higher than the error unit as the error is squared. To get the same unit order, many times the square root of MSE is taken. It is called the Root Mean Squared Error (RMSE).

RMSE = SQRT(MSE)

This is also used as a measure for model evaluation. There are other measures like MAE, R2 used for regression model evaluation. Let us see how these compare with MSE or RMSE

Mean Absolute Error (MAE) is the sum of the absolute difference between actual and predicted values.

R2 or R Squared is a coefficient of determination. It is the total variance explained by model/total variance.

| MSE / RSME | MAE | R2 |

| Based on square of error | Based on absolute value of error | Based on correlation between actual and predicted value |

| Value lies between 0 to ∞ | Value lies between 0 to ∞ | Value lies between 0 and 1 |

| Sensitive to outliers, punishes larger error more | Treat larger and small errors equally. Not sensitive to outliers | Not sensitive to outliers |

| Small value indicates better model | Small value indicates better model | Value near 1 indicates better model |

RSME is always greater than or equal to MAE (RSME >= MAE). The greater difference between them indicates greater variance in individual errors in the sample.

Both R & Python have functions which give these values for a regression model. Which measure to choose depends on the data set and the problem being addressed. If we want to treat all errors equally, MAE is a better measure. If we want to give more weight-age to large errors, MSE/RMSE is better.

Conclusion

MSE is used to check how close estimates or forecasts are to actual values. Lower the MSE, the closer is forecast to actual. This is used as a model evaluation measure for regression models and the lower value indicates a better fit.

Great Learning also offers a PG Program in Artificial Intelligence and Machine Learning in collaboration with UT Austin. Take up the PGP AIML and learn with the help of online mentorship sessions and gain access to career assistance, interview preparation, and job fairs. Get world-class training by industry leaders.