- What Is Local Search In AI?

- The Step-by-Step Operation of Local Search Algorithm

- Applying Local Search Algorithm To Route Optimization Example

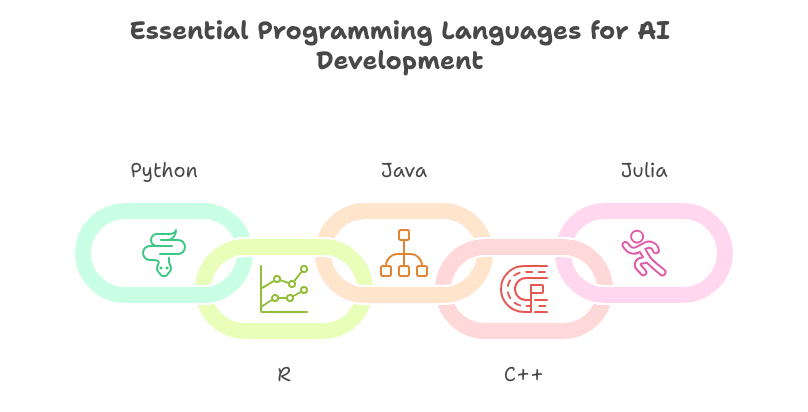

- Different Types of local search algorithm

- Practical Application Examples for local search algorithm

- Why Is Choosing The Right Optimization Type Crucial?

- Choose From Our Top Programs To Accelerate Your AI Learning

- Conclusion

- FAQs

Ever wondered how AI finds its way around complex problems?

It’s all thanks to the local search algorithm in artificial intelligence. This blog has everything you need to know about this algorithm.

We’ll explore how local search algorithms work, their applications across various domains, and how they contribute to solving some of the toughest challenges in AI.

What Is Local Search In AI?

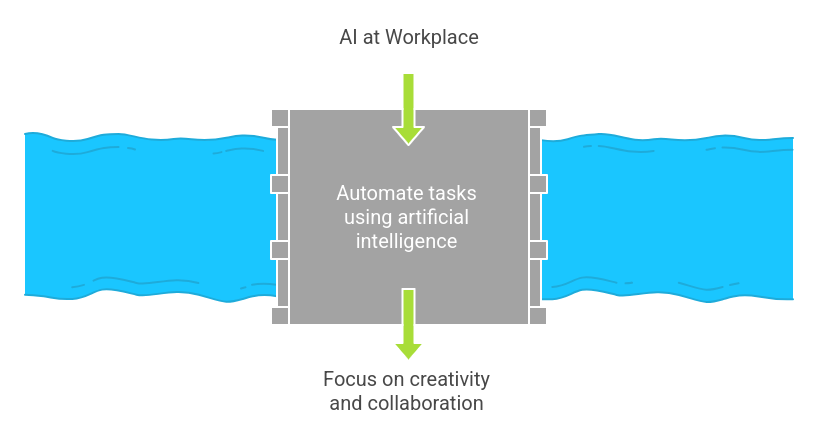

Local search in artificial intelligence (AI) is a versatile algorithm that efficiently tackles optimization problems, making it a valuable tool in AI development services.

Often referred to as simulated annealing or hill-climbing, it employs greedy search techniques to seek the best solution within a specific region.

This approach isn’t limited to a single application; it can be applied across various AI applications, such as those used to map locations like Half Moon Bay or find nearby restaurants on the High Street.

Here’s a breakdown of what local search entails:

1. Exploration and Evaluation

The primary goal of local search is to find the optimal outcome by systematically exploring potential solutions and evaluating them against predefined criteria.

2. User-defined Criteria

Users can define specific criteria or objectives the algorithm must meet, such as finding the most efficient route between two points or the lowest-cost option for a particular item.

3. Efficiency and Versatility

Local search’s popularity stems from its ability to quickly identify optimal solutions from large datasets with minimal user input. Its versatility allows it to handle complex problem-solving scenarios efficiently.

In essence, local search in AI offers a robust solution for optimizing systems and solving complex problems, making it an indispensable tool for developers and engineers.

The Step-by-Step Operation of Local Search Algorithm

1. Initialization

The algorithm starts by initializing an initial solution or state. This could be randomly generated or chosen based on some heuristic knowledge. The initial solution serves as the starting point for the search process.

2. Evaluation

The current solution is evaluated using an objective function or fitness measure. This function quantifies how good or bad the solution is with respect to the problem’s optimization goals, providing a numerical value representing the quality of the solution.

3. Neighborhood Generation

The algorithm generates neighboring solutions from the current solution by applying minor modifications.

These modifications are typically local and aim to explore the nearby regions of the search space.

Various neighborhood generation strategies, such as swapping elements, perturbing components, or applying local transformations, can be employed.

4. Neighbor Evaluation

Each generated neighboring solution is evaluated using the same objective function used for the current solution. This evaluation calculates the fitness or quality of the neighboring solutions.

5. Selection

The algorithm selects one or more neighboring solutions based on their evaluation scores. The selection process aims to identify the most promising solutions among the generated neighbors.

Depending on the optimization problem, the selection criteria may involve maximizing or minimizing the objective function.

6. Acceptance Criteria

The selected neighboring solution(s) are compared to the current solution based on acceptance criteria.

These criteria determine whether a neighboring solution is accepted as the new current solution. Standard acceptance criteria include comparing fitness values or probabilities.

7. Update

If a neighboring solution meets the acceptance criteria, it replaces the current solution as the new incumbent solution. Otherwise, the current solution remains unchanged, and the algorithm explores additional neighboring solutions.

8. Termination

The algorithm iteratively repeats steps 3 to 7 until a termination condition is met. Termination conditions may include:

- Reaching a maximum number of iterations

- Achieving a target solution quality

- Exceeding a predefined time limit

9. Output

Once the termination condition is satisfied, the algorithm outputs the final solution. According to the objective function, this solution represents the best solution found during the search process.

10. Optional Local Optimum Escapes

Local search algorithm incorporate mechanisms to escape local optima. These mechanisms may involve introducing randomness into the search process, diversifying search strategies, or accepting worse solutions with a certain probability.

Such techniques encourage the exploration of the search space and prevent premature convergence to suboptimal solutions.

Advance your knowledge of Local Search Algorithms and AI with UT Austin’s Post Graduate Program in AI & ML.

Enroll today!

Applying Local Search Algorithm To Route Optimization Example

Let’s understand the steps of a local search algorithm in artificial intelligence using the real-world scenario of route optimization for a delivery truck:

1. Initial Route Setup

The algorithm starts with the delivery truck’s initial route, which could be generated randomly or based on factors like geographical proximity to delivery locations.

2. Evaluation of Initial Route

The current route is evaluated based on total distance traveled, time taken, and fuel consumption. This evaluation provides a numerical measure of the route’s efficiency and effectiveness.

3. Neighborhood Exploration

The algorithm generates neighboring routes from the current route by making minor adjustments, such as swapping the order of two adjacent stops, rearranging clusters of stops, or adding/removing intermediate stops.

4. Evaluation of Neighboring Routes

Each generated neighboring route is evaluated using the same criteria as the current route. This evaluation calculates metrics like total distance, travel time, or fuel usage for the neighboring routes.

5. Selection of Promising Routes

The algorithm selects one or more neighboring routes based on their evaluation scores. For instance, it might prioritize routes with shorter distances or faster travel times.

6. Acceptance Criteria Check

The selected neighboring route(s) are compared to the current route based on acceptance criteria. If a neighboring route offers improvements in efficiency (e.g., shorter distance), it may be accepted as the new current route.

7. Route Update

If a neighboring route meets the acceptance criteria, it replaces the current route as the new plan for the delivery truck. Otherwise, the current route remains unchanged, and the algorithm continues exploring other neighboring routes.

8. Termination Condition

The algorithm repeats steps 3 to 7 iteratively until a termination condition is met. This condition could be reaching a maximum number of iterations, achieving a satisfactory route quality, or running out of computational resources.

9. Final Route Output

Once the termination condition is satisfied, the algorithm outputs the final optimized route for the delivery truck. This route minimizes travel distance, time, or fuel consumption while satisfying all delivery requirements.

10. Optional Local Optimum Escapes

To prevent getting stuck in local optima (e.g., suboptimal routes), the algorithm may incorporate mechanisms like perturbing the current route or introducing randomness in the neighborhood generation process.

This encourages the exploration of alternative routes and improves the likelihood of finding a globally optimal solution.

In this example, a local search algorithm in artificial intelligence iteratively refines the delivery truck’s route by exploring neighboring routes and selecting efficiency improvements.

The algorithm converges towards an optimal or near-optimal solution for the delivery problem by continuously evaluating and updating the route based on predefined criteria.

Also Read

Different Types of local search algorithm

1. Hill Climbing

Definition

Hill climbing is an iterative algorithm that begins with an arbitrary solution & makes minor changes to the solution. At each iteration, it selects the neighboring state with the highest value (or lowest cost), gradually climbing toward a peak.

Process

- Start with an initial solution

- Evaluate the neighbor solutions

- Move to the neighbor solution with the highest improvement

- Repeat until no further improvement is found

Variants

- Simple Hill Climbing: Only the immediate neighbor is considered.

- Steepest-Ascent Hill Climbing: Considers all neighbors and chooses the steepest ascent.

- Stochastic Hill Climbing: Chooses a random neighbor and decides based on probability.

2. Simulated Annealing

Definition

Simulated annealing is incite by the annealing process in metallurgy. It allows the algorithm to occasionally accept worse solutions to escape local maxima and aim to find a global maximum.

Process

- Start with an initial solution and initial temperature

- Repeat until the system has cooled, here’s how

– Select a random neighbor

– If the neighbor is better, move to the neighbor

– If the neighbor is worse, move to the neighbor with a probability depending on the temperature and the value difference.

– Reduce the temperature according to a cooling schedule.

Key Concept

The probability of accepting worse solutions decrease down as the temperature decreases.

3. Genetic Algorithm

Definition

Genetic algorithm is inspired by natural selection. It works with a population of solutions, applying crossover and mutation operators to evolve them over generations.

Process

- Initialize a population of solutions

- Evaluate the fitness of each solution

- Select pairs of solutions based on fitness

- Apply crossover (recombination) to create new offspring

- Apply mutation to introduce random variations

- Replace the old population with the new one

- Repeat until a stopping criterion is met

Key Concepts

- Selection: Mechanism for choosing which solutions get to reproduce.

- Crossover: Combining parts of two solutions to create new solutions.

- Mutation: Randomly altering parts of a solution to introduce variability.

4. Local Beam Search

Definition

Local beam search keeps track of multiple states rather than one. At each iteration, it generates all successors of the current states and selects the best ones to continue.

Process

- Start with 𝑘 initial states.

- Generate all successors of the current 𝑘 states.

- Evaluate the successors.

- Select the 𝑘 best successors.

- Repeat until a goal state is found or no improvement is possible.

Key Concept

Unlike random restart hill climbing, local beam search focuses on a set of best states, which provides a balance between exploration and exploitation.

Master AI essentials like Local Search Algorithms in UT Post Graduate Program in AI & ML.

Enroll today!

Practical Application Examples for local search algorithm

1. Hill Climbing: Job Shop Scheduling

Description

Job Shop Scheduling involves allocating resources (machines) to jobs over time. The goal is to minimize the time required to complete all jobs, known as the makespan.

Local Search Type Implementation

Hill climbing can be used to iteratively improve a schedule by swapping job orders on machines. The algorithm evaluates each swap and keeps the one that most reduces the makespan.

Impact

Efficient job shop scheduling improves production efficiency in manufacturing, reduces downtime, and optimizes resource utilization, leading to cost savings and increased productivity.

2. Simulated Annealing: Network Design

Description

Network design involves planning the layout of a telecommunications or data network to ensure minimal latency, high reliability, and cost efficiency.

Local Search Type Implementation

Simulated annealing starts with an initial network configuration and makes random modifications, such as changing link connections or node placements.

It occasionally accepts suboptimal designs to avoid local minima and cooling over time to find an optimal configuration.

Impact

Applying simulated annealing to network design results in more efficient and cost-effective network topologies, improving data transmission speeds, reliability, and overall performance of communication networks.

3. Genetic Algorithm: Supply Chain Optimization

Description

Supply chain optimization focuses on improving the flow of goods & services from suppliers to customers, minimizing costs, and enhancing service levels.

Local Search Type Implementation

Genetic algorithm represent different supply chain configurations as chromosomes. It evolves these configurations using selection, crossover, and mutation to find optimal solutions that balance cost, efficiency, and reliability.

Impact

Utilizing genetic algorithm for supply chain optimization leads to lower operational costs, reduced delivery times, and improved customer satisfaction, making supply chains more resilient and efficient.

4. Local Beam Search: Robot Path Planning

Description

Robot path planning involves finding an optimal path for a robot to navigate from a starting point to a target location while avoiding obstacles.

Local Search Type Implementation

Local beam search keeps track of multiple potential paths, expanding the most promising ones. It selects the best 𝑘 paths at each step to explore, balancing exploration and exploitation.

Impact

Optimizing robot paths improves navigation efficiency in autonomous vehicles and robots, reducing travel time and energy consumption and enhancing the performance of robotic systems in industries like logistics, manufacturing, and healthcare.

Also Read

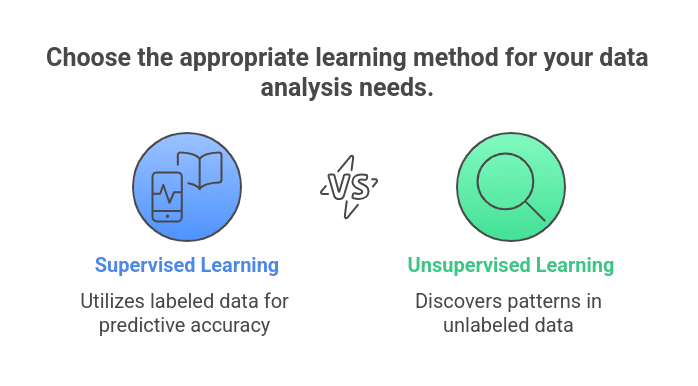

Why Is Choosing The Right Optimization Type Crucial?

Choosing the right optimization method is crucial for several reasons:

1. Efficiency and Speed

- Time Complexity

Different optimization methods have varying time complexities. For example, hill climbing is often faster for simple problems but may get stuck in local optima.

In contrast, simulated annealing might take longer but has a better chance of getting a global optimum.

- Computational Resources

Some methods require more computational power and memory. Genetic algorithm, which maintain and evolve a population of solutions, typically need more resources than simpler methods like hill climbing.

2. Solution Quality

- Local vs. Global Optima

Methods like hill climbing are prone to getting trapped in local optima, making them unsuitable for problems with many local peaks.

However, simulated annealing and genetic algorithms are designed to escape local optima and are better for finding global solutions.

- Problem Complexity

For highly complex problems with ample search space, methods like local beam search or genetic algorithms are often more effective as they explore multiple paths simultaneously, increasing the chances of finding a high-quality solution.

3. Applicability to Problem Type

- Discrete vs. Continuous Problems

Some optimization methods are better suited for discrete problems (e.g., genetic algorithm for combinatorial issues), while others excel in continuous domains (e.g., gradient descent for differentiable functions).

- Dynamic vs. Static Problems

For dynamic problems where the solution space changes over time, methods that adapt quickly (like genetic algorithm with real-time updates) are preferable.

4. Robustness and Flexibility

- Handling Constraints

Certain methods are better at handling constraints within optimization problems. For example, genetic algorithm can easily incorporate various constraints through fitness functions.

- Robustness to Noise

In real-world scenarios where noise in the data or objective function may exist, methods like simulated annealing, which temporarily accepts worse solutions, can provide more robust performance.

5. Ease of Implementation and Tuning

- Algorithm Complexity

Simpler algorithms like hill climbing are more accessible to implement and require fewer parameters to tune.

In contrast, genetic algorithm and simulated annealing involve more complex mechanisms and parameters (e.g., crossover rate, mutation rate, cooling schedule).

- Parameter Sensitivity

The performance of some optimization methods is susceptible to parameter settings. Choosing a method with fewer or less sensitive parameters can reduce the effort needed for fine-tuning.

Selecting the proper optimization method is essential for efficiently achieving optimal solutions, effectively navigating problem constraints, ensuring robust performance across different scenarios, and maximizing the utility of available resources.

Choose From Our Top Programs To Accelerate Your AI Learning

Master local search algorithm for AI effortlessly with Great Learning’s Top Artificial Intelligence (AI) Courses

Whether you’re delving into Hill Climbing or exploring Genetic Algorithm, our structured approach makes learning intuitive and enjoyable.

You’ll build a solid foundation in AI optimization techniques through practical exercises and industry-relevant examples.

Enroll now to be a part of this high-demanding field.

| Programs | PGP – Artificial Intelligence & Machine Learning | PGP – Artificial Intelligence for Leaders | PGP – Machine Learning |

| University | The University Of Texas At Austin & Great Lakes | The University Of Texas At Austin & Great Lakes | Great Lakes |

| Duration | 12 Months | 5 Months | 7 Months |

| Curriculum | 10+ Languages & Tools 11+ Hands-on projects40+Case studies22+Domains | 50+ Projects completed15+ Domains | 7+ Languages and Tools 20+ Hands-on Projects 10+ Domains |

| Certifications | Get a Dual Certificate from UT Austin & Great Lakes | Get a Dual Certificate from UT Austin & Great Lakes | Certificate from Great Lakes Executive Learning |

| Cost | Starting at ₹ 7,319/month | Starting at ₹ 4,719 / month | Starting at ₹5,222 /month |

Also Read

- What are the Most In-demand Skills in Artificial Intelligence?

- How to Start a Career in Artificial Intelligence and Machine Learning?

Conclusion

Here, we have covered everything you need to know about local search algorithm for AI.

To delve deeper into this fascinating field and acquire the most demanded skills, consider enrolling in Great Learning’s Post Graduate Program in Artificial Intelligence & Machine Learning.

With this program, you’ll gain comprehensive knowledge and hands-on experience, paving the way for lucrative job opportunities with the highest salaries in AI.

Don’t miss out on the chance to elevate your career in AI and machine learning with Great Learning’s renowned program.

FAQs

Local search algorithm focus on finding optimal solutions within a local region of the search space. At the same time, global optimization methods aim to find the best solution across the entire search space.

A local search algorithm is often faster but may get stuck in local optima, whereas global optimization methods provide a broader exploration but can be computationally intensive.

Techniques such as online learning and adaptive neighborhood selection can help adapt local search algorithm for real-time decision-making.

By continuously updating the search process based on incoming data, these algorithms can quickly respond to changes in the environment and make optimal decisions in dynamic scenarios.

Yes, several open-source libraries and frameworks, such as Scikit-optimize, Optuna, and DEAP, implement various local search algorithm and optimization techniques.

These libraries offer a convenient way to experiment with different algorithms, customize their parameters, and integrate them into larger AI systems or applications.