Edited & Reviewed By-

Anuj Saini

(Director of Data Science, RPX)

Large Language Models (LLMs) such as GPT & BERT are trailblazing the world of artificial intelligence.

These models have the ability to comprehend and develop human-like text, making them valuable in many real-world applications, from chatbots to content creation.

However, deploying and managing these models isn't easy.

It involves a series of steps to ensure they work smoothly and ethically, from the moment they're created to when they're actively used.

This guide will walk you through the end-to-end process of managing LLMs, covering everything from deployment and updates to monitoring performance and ensuring fairness.

Understanding Large Language Models (LLMs)

What are LLMs?

Large Language Models (LLMs) are powerful artificial intelligence (AI) systems that are designed to understand, generate, and respond to human language.

These models are built using various amounts of text data and are trained to perform various tasks, such as answering the questions, translating languages, writing content, and even having conversations.

Examples of popular LLMs include:

- GPT (Generative Pre-trained Transformer): A model created by OpenAI, known for its ability to generate human-like text.

- BERT (Bidirectional Encoder Representations from Transformers): A model developed by Google, focused on understanding the context of words in a sentence.

Purpose of LLMs

LLMs have several important purposes, especially in business and technology. Here’s how they help:

1. Enhancing Business KPIs (Key Performance Indicators):

LLMs can be used to enhance business outcomes, such as improving customer satisfaction or expanding sales. The best known models know that they can automate customer support, perform customer feedback analysis, and even create marketing content.

2. Driving Innovation in AI Applications:

LLMs push the boundaries of what AI can do. They are at the heart of many exciting technologies, such as chatbots, virtual assistants, automated content creation, and even tools that assist in creative fields like writing, music, or art.

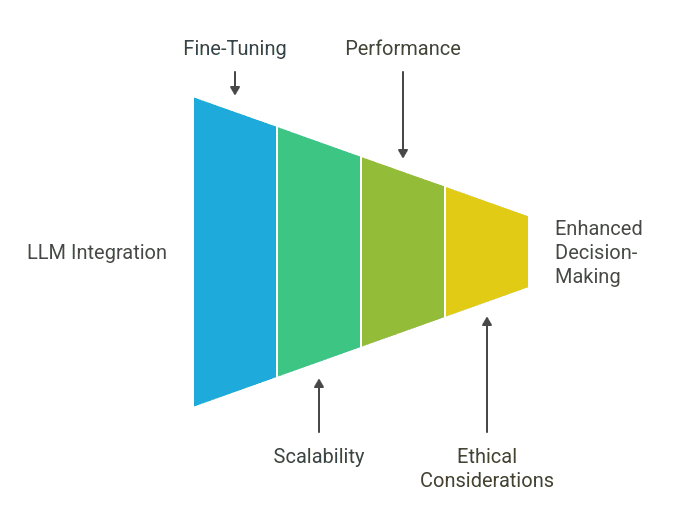

Operationalizing LLMs

Deploying LLMs in Real-World Applications

Once a Large Language Model (LLM) is developed & trained, the next step is making the model available to users or other systems, so it can begint the performing tasks like answering questions or developing content.

From Development to Deployment:

- During the development phase, LLMs are often tested in environments like Jupyter notebooks, where data scientists experiment with the model.

- One common way to deploy models is by using APIs (Application Programming Interfaces), which allow other software to communicate with the model. For example, the model can be accessed by a website or app to generate responses in real time.

- Flask is a popular Python framework often used to create web applications that can serve models like LLMs through APIs.

Key Considerations for Deployment:

- Scalability: The model should be able to manage the growing number of users without slowing down or even crashing.

- Latency: It’s essential that the model responds quickly. High latency as well as delays in responses, can cause users to lose interest.

- User Accessibility: The model should be easy for users to interact with, whether it’s through a chatbot, a virtual assistant, or a content generation tool.

Refreshing and Updating Models

LLMs need to be updated regularly to stay accurate. As new data comes in, the patterns the model learned from old data might change, and the model may not perform as well. This is why model refreshing is essential.

Why Refreshing is essential:

Over time, data patterns can change, indicating that the model might not understand the latest data also.

Steps for Refreshing:

- Pipeline Automation: Automating the process of updating models is crucial to make the refresh process faster and more efficient. This can be done by setting up automated pipelines.

- Versioning: It’s essential to keep track of different versions of models. When a model is refreshed, a new version is created so that older models can be compared and switched back to if needed.

- Graceful Model Decommissioning: When updating models, the older versions should be retired smoothly to avoid issues with users who might still be interacting with them.

Monitoring LLM Performance

After deploying & refreshing LLMs, it’s essential to constantly observe how well the model is functioning in real-world applications.

Monitoring Tools like MLflow:

Tools such as MLflow assists in tracking the implementation of the model by providing dashboards as well as analytics. These tools show how well the model is working & whether it needs some adjustments.

- Tracking Key Metrics and Detecting Model Drift: It's essential to track metrics like accuracy, response time & user engagement to make sure that the model is constantly meeting the expectations.

- Model Drift: Over time, the model might start to perform worse because the data it was trained on is no longer accurate. Detecting this drift early & retraining the model is important to maintain performance.

MLOps for LLMs

What is MLOps?

MLOps combines DevOps and machine learning practices to manage the lifecycle of models. It streamlines the process from development to deployment and monitoring, ensuring models are reliable, scalable, and continuously improved.

Applying DevOps Principles to Machine Learning:

MLOps applies DevOps practices to machine learning, automating tasks such as data collection, model training, testing, deployment, & monitoring. This assists the team to manage machine learning projects efficiently at scale.

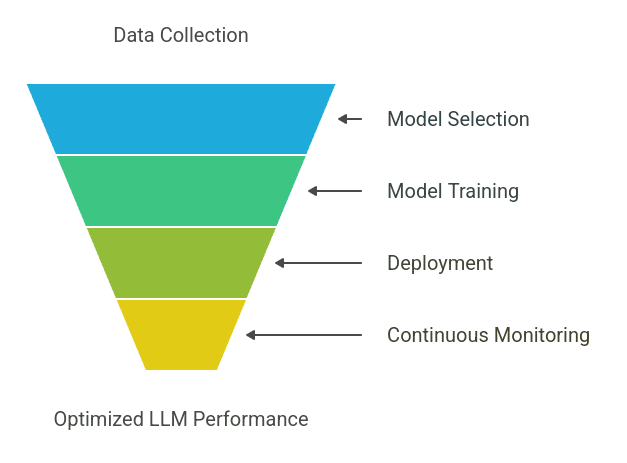

Components of the MLOps Lifecycle

MLOps covers the full lifecycle of a machine learning model, ensuring it is constantly evolving & performing optimally:

- Data Collection and Preparation: Gathering and also cleaning data is the very first step in the lifecycle. This includes ensuring data is relevant and high-quality for training the model.

- Model Selection, Fine-Tuning, and Evaluation: Choosing the right machine learning model is critical. Once selected, it is fine-tuned using training data to improve performance.

- Deployment and Continuous Monitoring: After the model is deployed, continuous monitoring ensures it’s performing as expected. This tracks metrics like accuracy, response time, and user feedback, and making necessary adjustments.

- CI/CD Pipeline for LLMs: A Continuous Integration (CI) & Continuous Delivery (CD) pipeline is essential in MLOps, automating the integration & deployment of machine learning models.

Importance of CI/CD

- Continuous Integration: Automatically tests & integrates code changes, ensuring the system remains stable.

- Continuous Delivery: Deploys code changes to production automatically, improving speed and reliability.

- Building Effective Pipelines: CI/CD pipelines should support testing, staging, and production environments, ensuring the model functions properly at each stage before real-world deployment.

- Containerization: Containerization is a vital practice in MLOps, especially for deploying large models like LLMs, using tools like Docker to package the entire model ecosystem.

- Packaging the Entire Model Ecosystem: A container includes the model, dependencies, configurations, and the necessary environment, ensuring consistent behavior regardless of the deployment location.

- Ensuring Consistency Across Environments: Containers guarantee that the model performs consistently across all environments, such as development, testing, staging, and production, making deployments reliable and predictable.

Ethical and Responsible AI Practices

As the use of large language models (LLMs) becomes highly widespread, ensuring responsible AI practices is highly essential. Key elements include:

1. Addressing Biases: AI models may inherit biases from training data, leading to unfair outcomes. It’s crucial to identify and mitigate these biases in both training and output.

2. Ensuring Fairness and Accountability: AI systems must be fair, transparent, and accountable. Businesses should ensure their models are explainable and trustworthy for users.

3. Guardrails for Generative AI: Generative AI, like LLMs, has the potential for misuse, especially when it comes to developing harmful or deceptive content. To prevent the possibilties of risks, it's highly essential to implement safeguards:

4. Preventing Misuse: AI systems should have safeguards like moderation tools to block harmful or offensive content, ensuring ethical outputs.

5. Balancing Accuracy and Ethics: AI models must balance high accuracy with ethical considerations, adjusting outputs to align with societal values even if it slightly impacts performance.

6. Regulatory Compliance: As AI evolves, global standards ensure responsible use. Compliance is essential for building trust and ethical development.

7. Data Privacy and AI Ethics: AI must adhere to data privacy laws (e.g. GDPR) and ethical guidelines, ensuring responsible handling of personal data and alignment with local regulations.

Suggested Read: AI Ethics and Developments

Future Trends in LLM Management

1. Technological Advancements

The evolution of LLMs is driven by continuous technological innovations. As LLMs become more complex, new developments are improving their capabilities:

2. Innovations Driving LLM Evolution:

- More Efficient Architectures: New architectures make LLMs more efficient in terms of training time and resource usage.

- Better Training Methods: Innovations in training techniques, such as transfer learning & unsupervised learning, are improving model performance and scalability.

3. Increasing Emphasis on Ethical AI by Businesses and Regulators:

Companies are facing growing pressure from both regulators & consumers to ensure that their AI systems are ethical, transparent, and aligned with societal values.

This trend pushes businesses to integrate ethical considerations into every stage of AI development, from design to deployment.

Conclusion

Managing large language models (LLMs) needs a complete approach that blends cutting-edge technology with ethical considerations.

By adhering to responsible AI practices, businesses can ensure the deployment of LLMs that are not only effective but also fair as well as transparent.

As AI develops, adopting automation and ethical development trends will be crucial for staying competitive.

For those interested in mastering these advancements and learning how to manage cutting-edge LLMs, consider enrolling in Great Learning's AI and ML course, which covers both the technical and ethical sides of AI, equipping you for a successful career in this area.