Problem Statement

Organisations with multiple cloud platforms and On-premise environments have challenges in managing DevOps activities from a centralised application with an intuitive user interface. They had to rely on the admins of the respective environments to execute any manual build, deployment, or get status reports on DevOps activities.

PG in Cloud Computing With AI Skills

Learn AWS, Azure & GCP with 80+ projects, 120+ services, expert mentorship & career support. Now with Applied AI on Cloud!

Proposed Solution

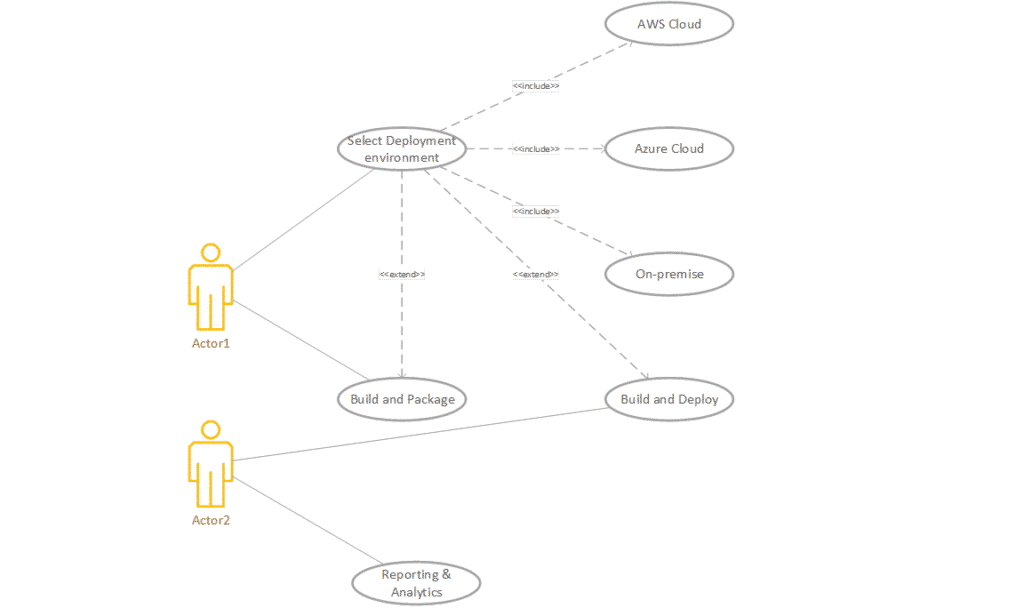

The proposal is to build an Intra-cloud application which interacts with different cloud/on-premise environments which enables the development team to manage the DevOps activities. The solution will offer a cognitive Azure Bot application to:

1. Select the Build and Deployment Environment

Choose the environment for the manual build and deployment. The following environments are in scope:

- Azure

- AWS

- On-premise

2. Build and Package the Application

List the applications which are available to build. Select the option and build the latest codebase for the selected option. Package using the build specification. Give the status as a response to the user.

3. Build and Deploy the Application

Build and deploy the latest code base for the given application. Provide the deployment details and status as response back to the user.

4. Analytics and Reporting

Reporting of the build and deployment statistics to the end-user. Available as a downloadable file.

This solution with Azure Chatbot powered by Language understanding capability offered by LUIS would enable the users to take real-time and pre-emptive decisions to perform manual build and deployment DevOps activities in these environments.

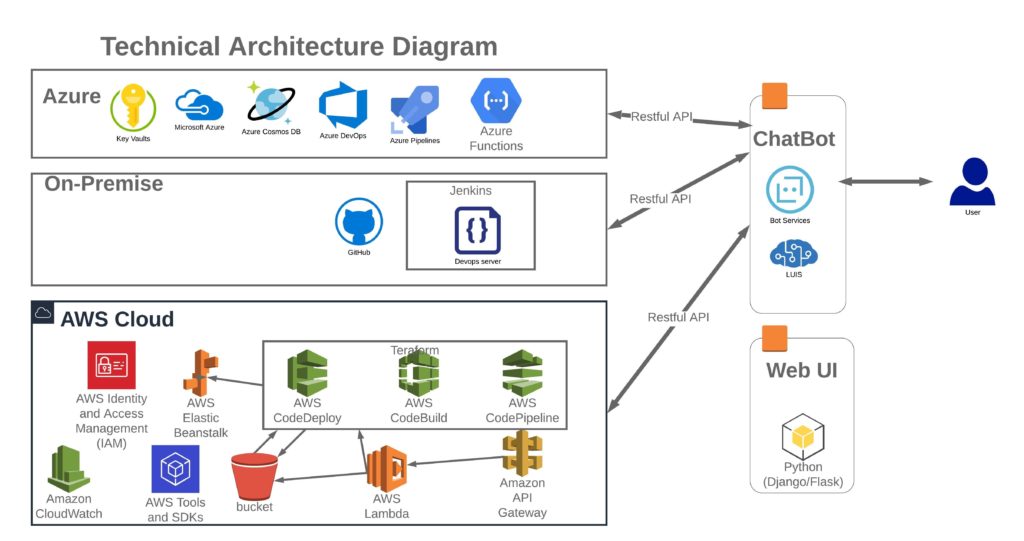

Architecture:

The proposed template of the solution and the application architecture for the Chatbot application would look as shown in the below diagram.

The various components of the architecture in the diagram above have been explained briefly below:

Azure Platform

Azure DevOps :

Azure build Pipeline is setup in Azure DevOps. .Net and PHP applications are set up in the build and deployment pipeline.

Azure Functions:

Microservices are built using .Net Core Azure Function templates. To access the build, deployment and status reports.

Key Vaults:

This service stores secure data like Azure credentials and personal access tokens to call the pipeline rest API.

AWS Platform

Amazon API Gateway:

Secure Rest API created for AWS DevOps tasks are deployed in API Gateway. It acts as a single point of entry for the defined group of microservices.

AWS Elastic Beanstalk:

Elastic Beanstalk is used to deploy and manage web applications built on multiple technologies like Java and PHP. AWS Elastic Beanstalk is an orchestration service offered by Amazon Web Services for deploying applications which orchestrate various AWS services, including EC2, S3, Simple Notification Service, CloudWatch, autoscaling, and Elastic Load Balancers.

S3 Bucket:

Source provider for the build packages for the pipeline. After the generation of the build package, it is saved in the destination S3 bucket location.

AWS Lambda:

Microservices are built using AWS Lambda functions. .Net Core serverless applications are built using the AWS SDK. This service interfaces with AWS CodePipeline, Code Build, and Code Deploy API services.

IAM:

- Policy to allow Lambda function to access CodePipeline API

- Policy to allow Lambda function to access Code Build API

- Policy to allow Lambda function to access S3 Bucket.

CloudWatch:

This provides the log for monitoring serverless Lambda function executions we run on AWS.

On-Premise Platform

Jenkins build automation server is used for On-premise DevOps configuration. .Net Core web applications are set up in Jenkins using build job and pipelines. The API for continuous build and continuous pipeline deployment is exposed which is consumed by a Bot service.

In this environment source code is downloaded from GitHub. Applications source code is sourced from GitHub repositories.

UI Interface

Azure Chatbot:

This chatbot is basically the UI interface that is capable of having human-like conversations powered by Language interpretations using LUIS. Each of the environments like AWS, Azure and On-prem have separate Dialogues which can be easily extended for adding new cloud platforms. Prompts for selection options and confirmation gives a real-time user interaction experience. The sequence of questionnaires is implemented using the waterfall dialogue class and customised adaptive cards. All the microservices from the different environment are consumed using the rest API in the Bot application. No environment-specific credentials are configured in the Bot application source code to make it easily configure with rest API.

Reports are generated and are downloaded in CSV format. The bot offers the build and deployment status reports which capture the status and details of the pipeline execution.

LUIS services provide the pre-defined intents while interpreting the chat messages for driving the user conversation with Bot.

Note: This project makes use of unauthenticated users in the Bot. The deployment is done in Teams or custom web application in the production environment.

Python Flask:

Additional Web UI interface is provided using Flask micro web framework. The rest API developed for the Azure Bot is reused in this Web UI. Users can perform the same operations provided from Bot in this Web UI.

Business and Technical Challenges:

Business challenges and Solutions:

- Add reporting capability using data generated dynamically from build and release in different environment: Power BI reports replaced with CSV reports which are downloaded. Converted json data to CSV. No additional managed service cost for the reporting feature.

- Cost of cloud resources should be minimal: Used serverless computing like lambda functions and azure functions instead of EC2. This will reduce computing costs.

- Cost of setting up DevOps for on-premise applications: Used license-free Jenkins server for On-premise applications. DevOps setup completed without incurring any additional license fees.

Technical Challenges and Solutions:

- As there are multiple environments involved, each cloud DevOps services developed with the microservice approach are built and deployed separately. Used AWS Lambda and Azure function to realise microservice architecture.

- Maintainability of Bot for new platforms and clouds: used Waterfall dialogue and developed each environment actions using separate dialogue. With this design, it is easy to add/remove each environment cloud without much code changes.

- Bot and service code maintainability: Service implementation separated from the Bot core code structure. So, the changes in the service can be handled separately without impacting the Bot code.

- Lightweight Service: Used rest API in .net Core platform.

Learning:

The following learnings are made from this Capstone project:

- Cost optimisation using serverless computing like lambda and Azure functions.

- Exposure to the setup DevOps pipeline in different cloud platforms and on-premise platforms.

- Role of Microservice pattern in rest API service development.

- Building secure cloud solutions using IAM, key vault, AWS API management etc.

About the Author:

Shijith Shylan – Shijith works as a technical architect in Microsoft Dotnet development at Accenture. He is interested in Cloud architecture, DevOps and UI development with Service-oriented architecture. He has 14 years of work experience in design and development of .Net applications.

To pursue a career in Cloud Computing, upskill with Great Learning’s PG Program in Cloud Computing.