Ever wondered how Google Photos can automatically organize your pictures by faces or locations?

That’s the power of image recognition.

This technology helps computers identify objects, people, and even emotions in images, opening up countless possibilities.

In this guide, we’ll explain what image recognition is, how it works, and the many ways it’s transforming industries today.

Defining Image Recognition

Image recognition is the specialized field of computer vision, which falls under the greater category of AI, where computers can interpret visual information. Image recognition involves identifying and classifying objects, people, places, or even actions from images or videos.

Key functions of image recognition include:

- Identifying objects: Recognizing various items such as animals, vehicles, and everyday objects.

- Identifying places: Detecting landmarks, locations, or specific environments like cities, parks, or buildings.

- Recognizing people: Identifying individuals through facial recognition or other features.

- Understanding actions: Recognizing movements or activities, such as detecting gestures or recognizing actions like running or dancing.

- Scene analysis: Understanding the context of a scene, including background elements and relationships between objects.

- Emotion detection: Analyzing facial expressions to determine the emotional state of a person.

- Text recognition: Extracting and reading text within images (also known as optical character recognition or OCR).

- Pattern recognition: Identifying patterns or anomalies in visual data, such as detecting defects in products or recognizing specific shapes and structures.

Image recognition enables computers to not just “see” but to understand and interpret the world through visual data, enabling a wide range of applications across industries.

2. The Science Behind Image Recognition

Understanding how image recognition works requires breaking down how these systems process and interpret visual data.

Overview of How Image Recognition Works:

- Data Collection

The first step involves collecting a large set of images with various objects, people, and scenes. These images are often labeled with accurate descriptions to help the model learn.

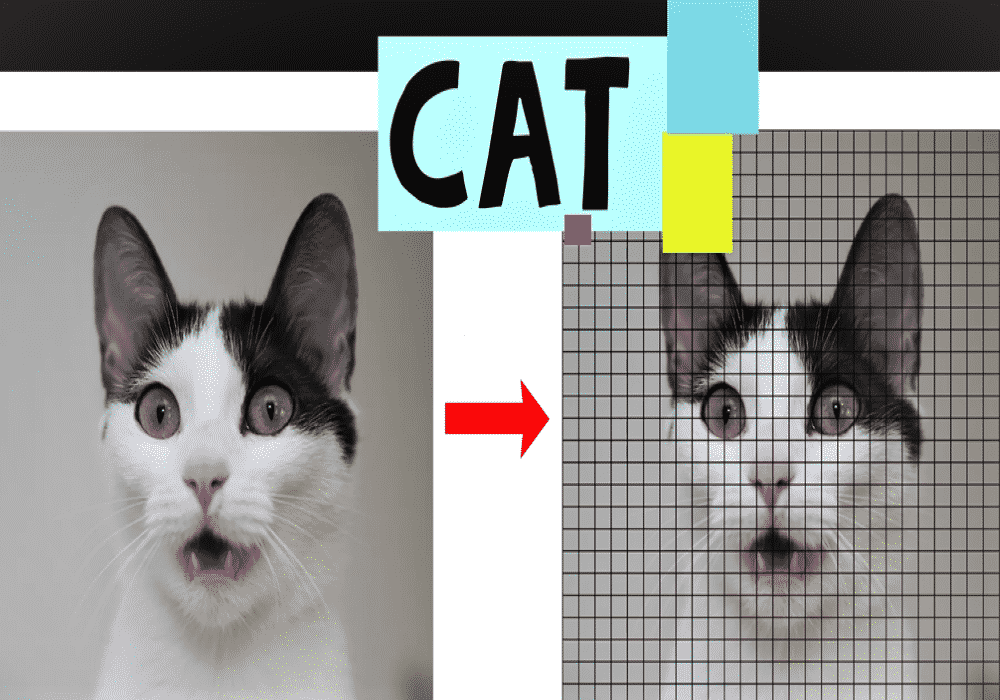

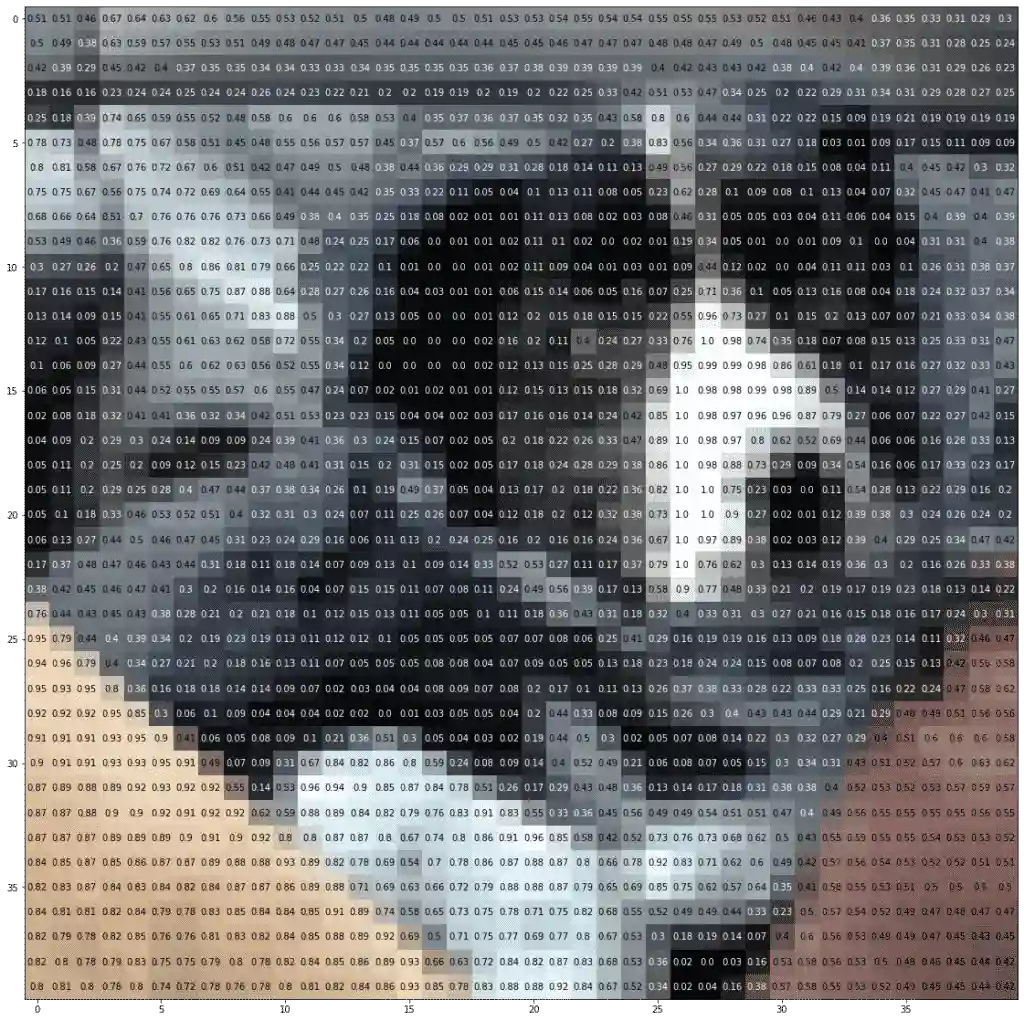

- Pixel Analysis

Every image consists of thousands (or millions) of tiny pixels, each containing color and brightness values. The recognition system begins by analyzing these individual pixels to identify patterns and features.

- Feature Extraction

Machine learning algorithms extract specific features from these images, such as edges, corners, and textures. The system looks for patterns that can help distinguish one object from another, much like how humans identify key features like the shape of a face or the outline of a car.

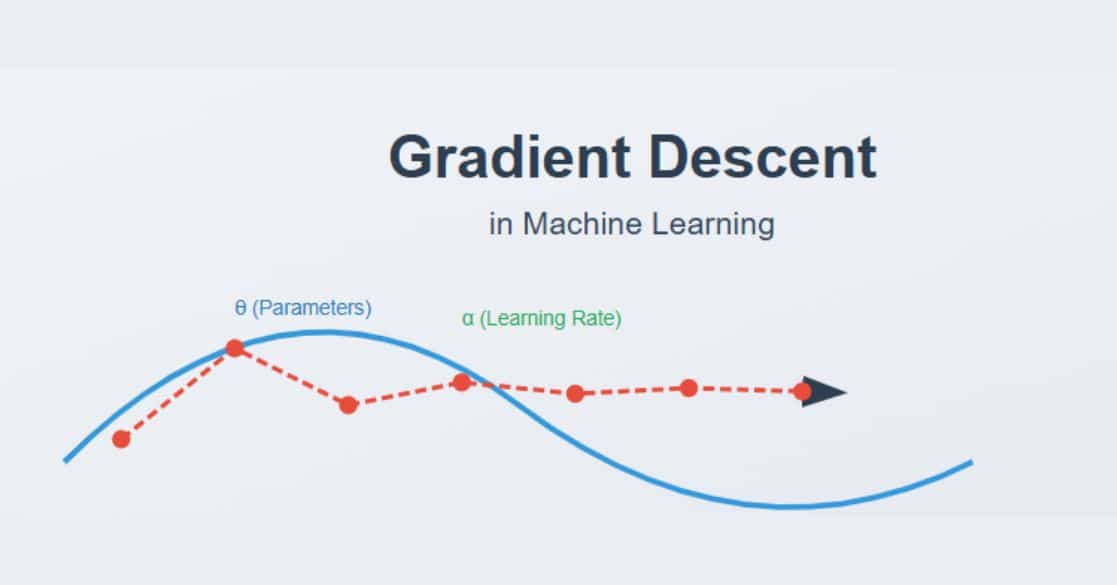

- Training Models

With the help of labeled data, machine learning models, particularly deep learning algorithms (such as convolutional neural networks), are trained to recognize these features and associate them with specific categories (e.g., “dog,” “car,” “cat,” etc.).

- Prediction and Recognition

After the model has been trained, it can take new images, analyze the pixels, extract relevant features, and predict what the image contains. The system may output a label like “dog” or “building” along with a confidence score indicating the likelihood that the prediction is correct.

Popular Image Recognition Algorithms and How They Are Used

In image recognition, several powerful algorithms are employed to help machines “see” and understand visual data.

These algorithms use different approaches to identify patterns, classify objects, and make sense of images.

Below are some of the most popular image recognition algorithms and their practical applications.

1. Convolutional Neural Networks (CNNs)

CNNs are one of the most widely used algorithms for image recognition. They are designed to automatically and adaptively learn spatial hierarchies of features from images. CNNs work by passing an image through multiple layers (convolutional layers, pooling layers, and fully connected layers) to detect various features like edges, textures, and complex shapes.

Use Cases:

- Facial Recognition: Identifying and verifying faces in images for security or social media applications.

- Object Detection: Used in autonomous vehicles to detect pedestrians, traffic signs, and other vehicles.

- Medical Imaging: Assisting doctors by identifying anomalies in medical scans like X-rays or MRIs.

2. Region-based CNN (R-CNN)

R-CNN builds upon CNN by dividing the image into regions and applying the CNN to each region to classify objects. Unlike traditional CNNs, R-CNN first proposes potential regions of interest (ROIs) in the image and then uses CNN to classify the objects in those regions.

Use Cases:

- Object Localization: Used in applications like robotic vision or drone navigation, where the system needs to not only recognize but also locate objects in the environment.

- Video Surveillance: Detecting and tracking objects or people in security footage.

3. You Only Look Once (YOLO)

YOLO is an advanced real-time object detection algorithm that divides an image into a grid and predicts bounding boxes and class probabilities for each grid cell. YOLO can detect multiple objects in one image at high speed, making it ideal for real-time applications.

Use Cases:

- Real-Time Video Analysis: Detecting moving objects in live video feeds, such as vehicles or people, for applications in security and surveillance.

- Self-Driving Cars: Identifying pedestrians, other vehicles, traffic lights, and obstacles in real-time to navigate safely.

Also Read: YOLO Object Detection Using OpenCV

4. Faster R-CNN

Faster R-CNN is an improvement over R-CNN that integrates Region Proposal Networks (RPN) with CNNs, allowing the algorithm to propose regions and classify objects in one step, significantly improving speed and accuracy.

Use Cases:

- Image Captioning: Creating descriptions for images by recognizing objects and their relationships within the scene.

- Automated Inventory Management: Detecting and tracking items in retail settings, enabling smart inventory systems.

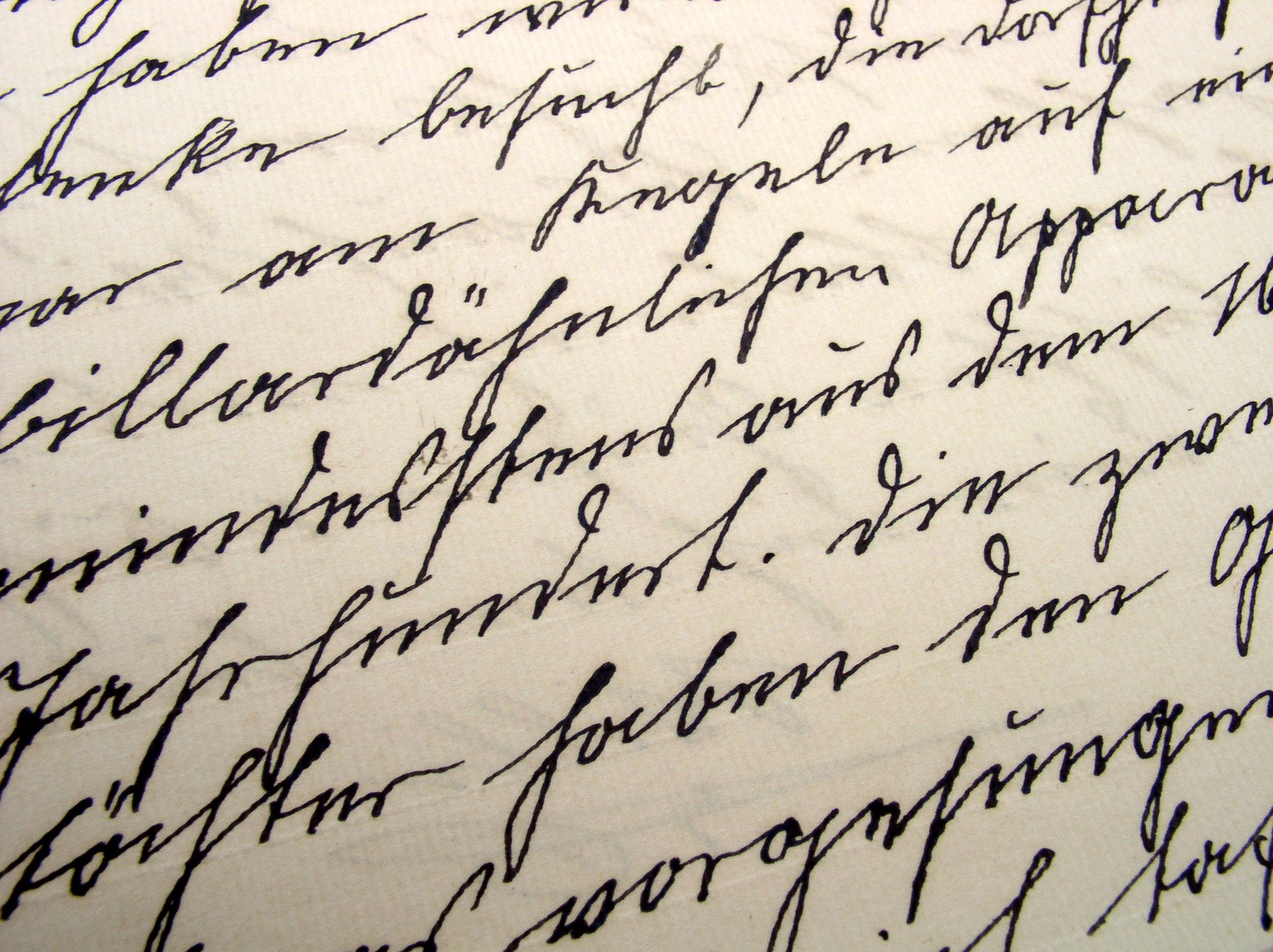

5. Support Vector Machines (SVM)

SVM is a machine learning algorithm that can be used for image classification. It works by finding the hyperplane that best separates different classes of objects in an image. Though less popular for large-scale tasks, SVM is still effective for simpler image recognition tasks when the dataset is smaller.

Use Cases:

- Text Recognition: Identifying and classifying text in images, such as reading scanned documents.

- Handwritten Digit Recognition: Used in applications like postal code reading and bank check processing.

6. K-Nearest Neighbors (KNN)

KNN is a simple machine learning algorithm that classifies objects based on the closest labeled data points in a feature space. It is less computationally intensive but can be slower with large datasets. KNN works by measuring the distance between pixels and classifying the image based on the most frequent label among the closest neighbors.

Use Cases:

- Image Search: Matching similar images by comparing their features.

- Face Recognition: Classifying faces by comparing known data with new, unlabeled images.

7. Principal Component Analysis (PCA)

PCA is a dimensionality reduction technique often used in conjunction with image recognition. It reduces the number of variables in an image while preserving the most important features for classification. PCA simplifies the data while retaining the features that are most important for recognition.

Use Cases:

- Feature Extraction: Reducing image complexity before applying more advanced classification models.

- Face Recognition: Used in facial recognition systems where the number of features is reduced to speed up processing.

How These Algorithms Are Used In Different Industries

- Retail and E-commerce: Image recognition algorithms help companies like Amazon use visual search to allow customers to search for products by uploading images.

- Healthcare: In radiology, deep learning-based algorithms like CNNs are used to analyze medical scans, identifying issues such as tumors or fractures.

- Autonomous Vehicles: YOLO, R-CNN, and Faster R-CNN are frequently used in self-driving cars to detect pedestrians, road signs, and other vehicles in real time.

- Security and Surveillance: Facial recognition and object detection algorithms are widely used in public safety to track individuals, monitor suspicious activity, and improve security systems.

- Agriculture: Drones and image recognition algorithms help monitor crop health, detect pests, and assess yield predictions.

Comparison Table: Image Recognition vs Object Detection

| Feature | Image Recognition | Object Detection |

| Definition | Identifying and classifying objects, people, or scenes in an image. | Identifying and localizing multiple objects within an image by drawing bounding boxes. |

| Primary Goal | To classify what the image contains (e.g., dog, car, tree). | To detect and locate objects within the image (e.g., a car in the top-right corner, a dog in the center). |

| Output | Single label or class for the entire image (e.g., “dog”). | Multiple bounding boxes with object labels for each identified object (e.g., “car”, “dog”, with coordinates). |

| Scope | Works with entire images or scenes, classifying them as a whole. | Focuses on identifying and marking specific objects within an image. |

| Use Cases | – Image classification- Scene analysis- Facial recognition | – Object tracking in videos- Self-driving cars- Retail and inventory management- Security surveillance |

| Technology Used | – CNN (Convolutional Neural Networks)- SVM (Support Vector Machines) | – CNN (Convolutional Neural Networks)- YOLO (You Only Look Once)- Faster R-CNN- SSD (Single Shot MultiBox Detector) |

| Complexity | Simpler, as it typically involves identifying one or a few categories in an image. | More complex, as it involves detecting multiple objects and their positions. |

| Example | Recognizing a single object like a “cat” or a “tree” in an image. | Detecting multiple objects like “person”, “dog”, and “bicycle” in the same image with their bounding boxes. |

| Output Type | A single class label (e.g., “cat”, “car”, “tree”). | Multiple object labels and bounding box coordinates for each detected object. |

| Accuracy Requirements | Typically needs high accuracy for individual object classification. | Needs high accuracy for both object recognition and correct placement (bounding boxes). |

| Real-World Applications | – Content-based image retrieval- Automated image tagging- Social media tagging | – Autonomous driving- Facial recognition- Object tracking in security systems |

| Examples of Tools | – Google Vision API- ImageNet classifier- TensorFlow’s Image Classification | – YOLO (You Only Look Once)- R-CNN- OpenCV for real-time object detection |

Also Read: Real-Time Object Detection Using TensorFlow

Challenges and Limitations of Image Recognition

1. Data Privacy Concerns

- Challenge: Image recognition often deals with sensitive data, such as facial images, medical scans, or personal photos, raising privacy concerns.

- Impact: Mismanagement of this data can lead to breaches of privacy, identity theft, and unauthorized surveillance.

- Solution: Implementing strong data protection laws (e.g., GDPR) and adopting local data processing methods (edge computing) can help ensure better security and privacy control.

2. Accuracy and Bias in Algorithms

- Challenge: Image recognition models may be inaccurate, especially if they are trained on biased or unrepresentative data.

- Impact: Bias in algorithms can lead to unfair outcomes, such as misidentifying individuals based on race, gender, or age, particularly in sensitive areas like law enforcement or hiring.

- Solution: Regular audits, diversified datasets, and ongoing improvements in AI fairness can help reduce bias and improve the accuracy of models.

3. Dependence on Large Datasets

- Challenge: Training effective image recognition systems requires massive amounts of labeled data, which can be time-consuming and costly to gather.

- Impact: Insufficient or low-quality data leads to poor model performance, limiting the technology’s effectiveness.

- Solution: Using techniques like transfer learning (adapting pre-trained models) and data augmentation (creating synthetic variations of data) can reduce the need for large datasets and improve model training.

The Future of Image Recognition

Image recognition is set for rapid advancements, driven by AI and machine learning. Key trends shaping its future include:

- Improved Accuracy: AI models, particularly deep learning networks, will enhance precision in object, face, and pattern recognition.

- Multimodal Systems: Integration with other sensory inputs will allow for smarter, more context-aware recognition.

- Real-Time Processing: Faster, more efficient systems will enable instant analysis, critical for applications like autonomous driving.

- Edge Computing: Processing on devices will boost privacy, speed, and reduce reliance on centralized cloud computing.

- Wider Applications: From healthcare to retail, image recognition will revolutionize industries with personalized and intelligent solutions.

- Ethical Focus: Addressing privacy, bias, and surveillance issues will be essential for responsible development.

In summary, image recognition will continue to evolve, offering new opportunities while requiring careful ethical consideration.

Conclusion

Image recognition is changing the face of industry, offering innovative solutions with the challenges of privacy & bias. As AI and machine learning evolve, the ongoing considerations are reshaping it into more efficient and ethical systems.

To remain on top in this exciting field, Great Learning’s AI and ML course offers the perfect platform to develop the skills required to build and apply technologies such as image recognition.

Be it a fresher in AI or seeking an upgrade in your expertise, Great Learning gives you the one-stop place to learn by helping you shape the future of technology.