In a DevOps interview, the interviewer tests your understanding of the tools, concepts, and DevOps culture to solve real-world problems.

This guide covers all the popular DevOps questions asked in interviews, along with answers that demonstrate practical, hands-on experience.

General and Cultural DevOps Questions

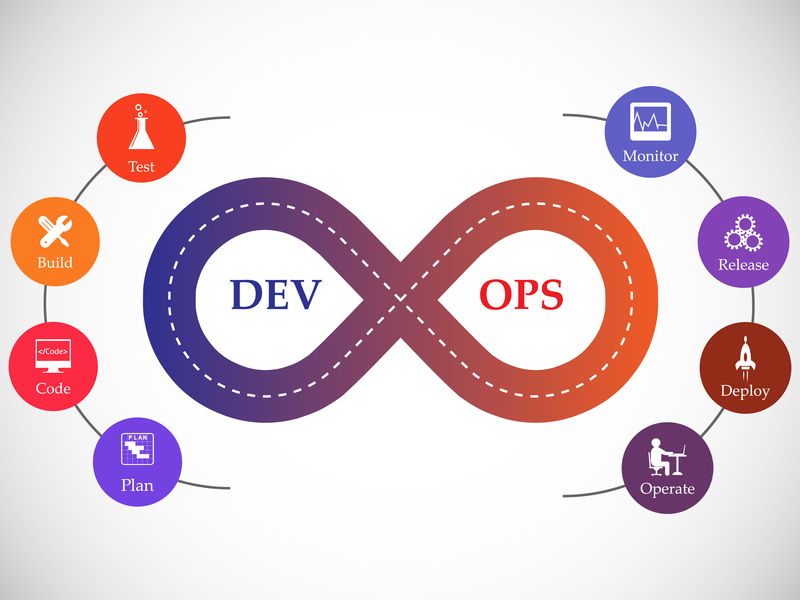

1. What is DevOps?

DevOps is a cultural approach in which development (those who write the code) and operations (those who manage servers and deployment) work together to automate and accelerate the software delivery lifecycle.

Normally, developers would write the code and hand it over to the ops team to deploy. But in DevOps, this wall has been removed. Both teams together handle the entire software lifecycle, from planning to deployment.

The advantage of this is that developers now also focus on stability and scalability, and operations people are involved earlier in the design process, resulting in fast and reliable software releases.

Learn devops from basics in this free online training. DevOps course is taught hands-on from experts. Understand containerization, Docker, Jenkins, & continuous monitoring. Perfect for beginners.

2. What are the core principles of DevOps?

DevOps has three core principles: shared ownership, automation, and fast feedback.

- Shared ownership means the entire team takes responsibility for the product, not just the developers or ops people separately. Everyone has the same goal: to improve the product.

- Automation is done so that repetitive tasks (like build, test, deploy) don't have to be done manually. This saves time and reduces errors.

- And fast feedback means getting quick feedback from both the system and users, through monitoring and user data.

This feedback helps the team understand what's working well and what needs improvement, both in the product and the process.

3. How is DevOps different from Agile?

Agile and DevOps both solve different parts of the same problem.

Agile focuses on making the software development process fast and flexible. This means working in small parts, testing, and providing updates quickly.

DevOps takes this idea further. It doesn't just stop at development, but covers the entire process, including operations and delivery.

We can say that Agile makes the developer team faster, and DevOps makes the entire organization faster and more reliable in delivering and running software.

Read: How to Become a DevOps Engineer

4. What are the most important benefits of DevOps?

The biggest advantage of DevOps is speed and stability, both come together. Previously, it was believed that increasing speed would compromise system stability, but DevOps has achieved a balance between the two.

In DevOps, the release pipeline is automated, so that new features can be launched quickly. Testing and infrastructure are also automated and team-based, hence there are less mistakes in production.

And even if an issue arises, the Mean Time To Recovery (MTTR) is less. This is because both the team and the tools are prepared to quickly resolve problems.

5. Which DevOps tools are you most familiar with?

I have extensive practical experience with DevOps tools across the entire software lifecycle. I typically use these tools:

- Version Control: I mostly use Git and GitHub to manage code and collaborate with the team.

- CI/CD: GitLab CI is my favorite because it integrates directly with the repository, though I've also built complex pipelines using Jenkins.

- Infrastructure as Code (IaC): I use Terraform for cloud infrastructure setup and Ansible for server configuration.

- Containerization: I build images with Docker and manage them at large scale with Kubernetes.

- Monitoring: I usually use a combination of Prometheus and Grafana for monitoring. One collects metrics, the other visualizes them. I've also occasionally used Datadog when I need an all-in-one setup.

Read in detail: Top Essential Tools for DevOps Professionals

Version Control Questions

6. What is Git?

Git is a distributed version control system that tracks changes to code.

"Distributed" means that each developer has the entire history of the project on their own computer. This allows them to work without internet access, create new branches, and safely merge their code.

This system is perfect for teamwork. Each person can work on a different feature without disrupting the code of others. When everything is ready, everyone's work is merged into a final version.

7. Explain the difference between git merge and git rebase.

Both git merge and git rebase work by transferring changes from one branch to another, but the method is different.

Git merge is simple and safe. It keeps the history of both branches as is and creates a new "merge commit." It shows what was merged from which branch.

Example:

git merge feature-branch

This combines the histories of both branches without deleting or rewriting anything.

Git rebase seems smart, but can also be risky. It takes your feature branch's commits and pastes them onto the latest commit of the target branch, as if everything happened in a straight line.

Example:

git rebase main

The result is a clean, linear history, as if no side branches ever existed.

But rebase should only be performed on your local or private branches. Rebasing on shared branches (like main or develop) will distort the history and may mix up team commits.

8. What is a Git branching strategy you have used?

I use GitHub Flow. It has only one main branch that is always deployable.

If you want to do something new, just create a feature branch, like feature-login-page, and it will be spun out from the main branch. When the work is done, open a pull request, merge the branch back into the main branch, and then deploy.

I've also used GitFlow before. It's fine for larger projects with tight release cycles, but it seems a bit overkill for smaller web projects. GitHub Flow is simple and perfect for continuous delivery.

9. What is a pull request?

A pull request, or PR, is a way to propose changes to a codebase. First, you create a branch (say, feature-login), do your work on it, then send a pull request so that the team can decide whether these changes should go into main branch or not.

In this process, the team reviews the code, looks for errors, suggests improvements, and also runs automated tests.

So, a pull request is a system to ensure communication and quality.

CI/CD Pipeline Questions

10. What is a CI/CD pipeline?

A CI/CD pipeline is essentially an automated process that takes a developer's code from commit to production.

It involves some automated steps, such as:

- Compiling the code

- Running tests

- Checking security vulnerabilities

- Deploying the application

The goal is to make every release predictable and secure. Whenever you make a code change, this pipeline runs automatically and provides immediate feedback on the code's quality and readiness for release.

11. What is the difference between Continuous Integration, Continuous Delivery, and Continuous Deployment?

They represent a journey toward full automation:

- Continuous Integration (CI): This is the baseline. Developers merge their code into a shared repository frequently, and each merge triggers an automated build and test run. The goal is to catch integration bugs early.

- Continuous Delivery (CD): This builds on CI. After passing all automated tests, the code is automatically deployed to a staging or pre-production environment. A release to production is still a manual button-push, but it's a business decision, not a technical one.

- Continuous Deployment: This is the final step. Every change that passes the entire pipeline is automatically released to production without any human intervention. This requires a high degree of confidence in your automated testing and monitoring.

12. How would you set up a CI/CD pipeline from scratch?

For a basic pipeline, I would perform these steps:

- Source: First, connect a CI server (like Jenkins or GitLab CI) to the Git repository. The pipeline should automatically trigger as soon as a new commit is made to the main branch.

- Build: The next step is to compile the code or build a Docker image if using Docker.

- Test: Then run automated tests like unit tests and integration tests. If any test fails, the pipeline should fail.

- Package: If all tests pass, push the Docker image to the container registry (Docker Hub or AWS ECR).

- Deploy: In the final stage, pull the new image from the registry and deploy it to the staging environment, where it can be manually promoted to production after review.

13. What is blue-green deployment?

Blue-green deployment is a strategy that minimizes downtime (the time a site or app is down).

It consists of two identical production environments:

- Blue → currently live

- Green → currently idle

You deploy the new version in the green environment. After thorough testing and ensuring everything is working properly, you shift the router or traffic to the green environment.

The blue environment now becomes idle, ready for the next release or for immediate rollback if something goes wrong.

One drawback is that keeping database changes in sync across both environments can be challenging.

14. What is a canary release?

Canary release is a cautious deployment strategy. In this, the new version is first activated for only a small number of users, such as 1% of the traffic group. This small group is called "canary."

This group is then closely monitored. If there are no errors or performance issues, traffic is gradually increased until 100% of users are using the new version.

The advantage of this is that if the new release goes wrong, only a small audience is affected, not everyone.

Infrastructure as Code (IaC) Questions

15. What is Infrastructure as Code (IaC)?

Infrastructure as Code (IaC) means managing your infrastructure, such as servers, networks, and load balancers, through configuration files instead of manually setting them up.

You write code for how your environment should look, and tools like Terraform or Ansible follow and set it up.

The advantages of this are:

- You can keep your environment under version control.

- You can test changes before applying them.

- You can easily replicate the same environment for testing or disaster recovery.

16. What is the difference between Terraform and Ansible?

Both are IaC (Infrastructure as Code) tools, but they perform different functions:

- Terraform: It is used to create, change, or delete infrastructure. It decides "what will be built," such as servers, networks, storage, etc. in the cloud.

- Ansible: It is used to configure existing servers and install software. It manages "how it will work."

Normally, people first create servers with Terraform, then configure them with Ansible playbooks.

17. Explain the concept of "immutable infrastructure."

“Immutable infrastructure” means never making any direct changes to a server after it has been deployed.

If you want to update an application or apply a patch, don't log in to the existing server and make the changes.

Instead:

- Create a new server image that already includes all the changes.

- Deploy new servers from that image.

- Remove old servers.

This prevents “configuration drift” and makes the environment predictable.

18. What is configuration management?

Configuration management means maintaining your servers and systems in a consistent and desired state.

DevOps uses tools like Ansible, Puppet, or Chef to automate this.

You simply write in code:

- Which packages should be installed

- Which services should be running

And the tool automatically ensures that this state is maintained across all servers.

The advantage of this is that you can easily manage hundreds or thousands of servers, and changes are applied systematically and reliably.

Containerization and Orchestration Questions

19. What is a container?

A container is a lightweight package that bundles an application's code and all its dependencies (libraries, runtime, etc.).

It isolates the app from its environment. So, whether you run it on a laptop, a staging server, or in production, the app will always behave the same.

This is the solution to the "It was running on my machine!" problem. Regardless of the environment, the app will run the same way inside the container.

20. How is a container different from a virtual machine?

Containers and virtual machines (VMs) both provide isolation, but at different levels.

- A virtual machine (VM) virtualizes hardware. Each VM requires its own full operating system. It is heavy, takes time to start, and consumes more resources.

- A container virtualizes the operating system. It shares the kernel of the host OS, and does not require a separate full OS.

The advantage of containers is that they are lightweight, start quickly, and use fewer resources. You can run multiple containers on a single host, while only a few VMs can run on the same resources.

21. What is Docker?

Docker is the platform that popularized containers. It provides simple tools to build, share, and run applications within containers.

- A

Dockerfileis a simple way to define an application image. - The Docker Engine runs these images as containers.

It standardized the way we package and ship software.

22. What is Kubernetes?

Kubernetes is a tool that manages containers. We call it a container orchestrator.

When you run multiple containers on different machines, you need a tool to manage them, and that's what Kubernetes does.

It automatically handles:

- Deployment - setting up new containers.

- Scaling - increasing or decreasing the number of containers as traffic increases.

- Networking - managing communication between containers.

For example:

- If a container crashes, Kubernetes will automatically restart it.

- If user traffic suddenly increases, it will start more containers.

23. What is a Kubernetes Pod?

A Pod is the smallest unit you can deploy in Kubernetes.

Basically, it's a wrapper around one or more containers. Containers within a Pod share the same network space and can also share storage volumes, so they easily communicate with each other.

You can run multiple containers within a Pod, but the usual pattern is that a Pod contains one main application container.

24. Explain the difference between a Deployment and a StatefulSet in Kubernetes.

Deployment and StatefulSet both manage pods in Kubernetes, but their use-cases are different.

- Deployment: This is for stateless apps, such as a web server front-end. Its pods are all identical; if one dies, Kubernetes creates a new pod.

- StatefulSet: This is for stateful apps, such as a database. Each pod is unique, and the network ID and storage remain stable, even if the pod is moved to another node. This ensures that pods are created and scaled in a predictable order.

Monitoring and Logging Questions

25. Why is monitoring important in DevOps?

In DevOps, monitoring means monitoring the system and gathering feedback. You can't improve what you don't measure.

Monitoring allows you to see in real time how the system is performing and can catch problems before they reach users.

More than just alerting you to errors or failures, good monitoring also provides data that helps:

- Understand system performance

- Identify bottlenecks

- Decide where to focus development efforts

26. What are the three pillars of observability?

Observability has three pillars: logs, metrics, and traces. These three help understand different aspects of a system:

- Logs: These are detailed records of specific events, time-stamped. If a problem or crash occurs, logs reveal exactly what happened.

- Metrics: These are numbers that are measured over time, such as CPU usage, request latency, or memory usage. They are useful for dashboards, alerts, and trend analysis.

- Traces: These follow the entire journey of a single request, such as how the request moved through different services. These are very important for debugging performance or slow operations in microservices.

27. What is the difference between monitoring and observability?

Monitoring means you only see things you already know might be wrong, like high CPU usage, or a server down. You set up dashboards and alerts that let you know if something else goes wrong.

Observability is a system that helps you understand unknown problems (that you can't imagine in advance). Monitoring tells you something is wrong, but observability tells you why it's wrong - through logs, metrics, and traces.

28. Name some popular monitoring tools.

- Prometheus – primarily for collecting metrics, such as server or app performance.

- Grafana – for taking data from Prometheus and creating cool dashboards that are easy to understand.

- ELK Stack – a combo of Elasticsearch, Logstash, and Kibana; primarily used for collecting and analyzing logs.

- Datadog and New Relic – These are somewhat all-in-one solutions, offering metrics, logs, and alerts all in one, but they are paid.

Prometheus + Grafana is the most common combination, and the top choice for ELK logs. Datadog/New Relic uses logs for convenience.

Cloud and Security Questions

29. How do you integrate security into a CI/CD pipeline (DevSecOps)?

DevSecOps is about shifting security left, making it part of the development process instead of an afterthought. In the CI/CD pipeline, this means automating security checks at different stages:

- Static Application Security Testing (SAST): Add a step to check common vulnerabilities in the source code as soon as the code is committed.

- Software Composition Analysis (SCA): This is important. Scan third-party libraries or dependencies to find known vulnerabilities.

- Dynamic Application Security Testing (DAST): Find security flaws by running automated tests on the live application in the staging environment.

The goal is to provide developers with fast feedback on security issues, just like feedback on build or test failures.

30. What is your experience with cloud platforms like AWS, Azure, or GCP?

I've worked extensively with AWS. In my last role, I used Terraform to manage infrastructure, including EC2 instances in Auto Scaling Groups, storing application assets in S3, and running a PostgreSQL database in RDS.

The CI/CD process was also built with AWS tools. Docker containers were deployed to an ECS cluster using CodePipeline and CodeBuild.

I also have good experience with networking. I understood and efficiently utilized the concepts of VPCs, subnets, and security groups.

31. What is a VPC (Virtual Private Cloud)?

A VPC, or Virtual Private Cloud, is a private, separate network within a public cloud.

You can create your own virtual network where you can launch your own resources, such as servers and databases.

You have complete control over it:

- You can choose your IP address range

- You can create public and private subnets

- You can configure route tables and network gateways