Artificial intelligence keeps evolving at an incredible pace, and DeepSeek-R1 is the latest model that’s making headlines. But how does it stack up against OpenAI’s offerings?

In this article, we’ll explore what DeepSeek-R1 brings to the table—its features, performance in key benchmarks, and real-world use cases—so you can decide if it’s right for your needs.

What Is DeepSeek-R1?

DeepSeek-R1 is a next-generation “reasoning-first” AI model that aims to advance beyond traditional language models by focusing on how it arrives at its conclusions.

Built using large-scale reinforcement learning (RL) techniques, DeepSeek-R1 and its predecessor, DeepSeek-R1-Zero, emphasize transparency, mathematical proficiency, and logical coherence.

Key points to know:

- Open-Source Release: DeepSeek offers the main model (DeepSeek-R1) plus six distilled variants (ranging from 1.5B to 70B parameters) under the MIT license. This open approach has garnered significant interest among developers and researchers.

- Reinforcement Learning Focus: DeepSeek-R1’s reliance on RL (rather than purely supervised training) allows it to “discover” reasoning patterns more organically.

- Hybrid Training: After initial RL exploration, supervised fine-tuning data was added to address readability and language-mixing issues, improving overall clarity.

From R1-Zero to R1: How DeepSeek-Evolved

DeepSeek-R1-Zero was the initial version, trained via large-scale reinforcement learning (RL) without supervised fine-tuning. This pure-RL approach helped the model discover powerful reasoning patterns like self-verification and reflection. However, it also introduced problems such as:

- Poor Readability: Outputs were often hard to parse.

- Language Mixing: Responses could blend multiple languages, reducing clarity.

- Endless Loops: Without SFT safeguards, the model occasionally fell into repetitive answers.

DeepSeek-R1 fixes these issues by adding a supervised pre-training step before RL. The result – More coherent outputs and robust reasoning, comparable to OpenAI on math, coding, and logic benchmarks.

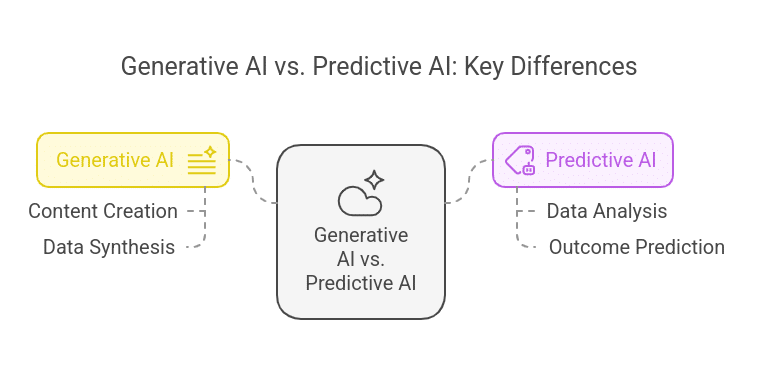

Suggested: What is Generative AI?

Core Features & Architecture

- Mixture of Experts (MoE) Architecture: DeepSeek-R1 uses a large MoE setup—671B total parameters with 37B activated during inference. This design ensures only the relevant parts of the model are used for a given query, lowering costs and speeding up processing.

- Built-In Explainability: Unlike many “black box” AIs, DeepSeek-R1 includes step-by-step reasoning in its output. Users can trace how an answer was formed—crucial for use cases like scientific research, healthcare, or financial audits.

- Multi-Agent Learning: DeepSeek-R1 supports multi-agent interactions, allowing it to handle simulations, collaborative problem-solving, and tasks requiring multiple decision-making components.

- Cost-Effectiveness: DeepSeek cites a relatively low development cost (around $6 million) and emphasizes lower operational expenses, thanks to the MoE approach and efficient RL training.

- Ease of Integration: For developers, DeepSeek-R1 is compatible with popular frameworks like TensorFlow and PyTorch, alongside ready-to-use modules for rapid deployment.

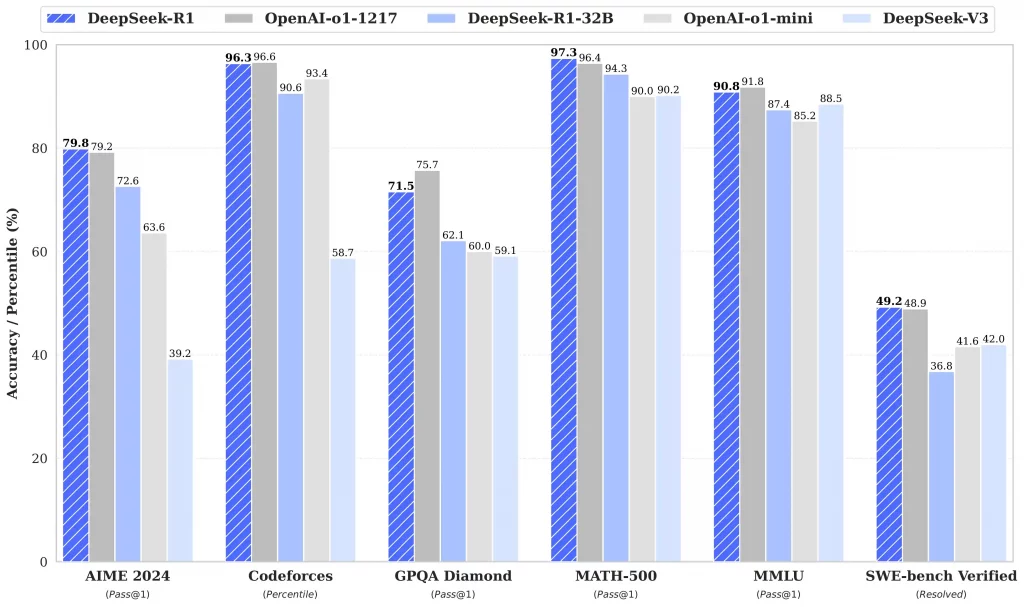

How Does DeepSeek-R1 Compare to OpenAI’s Models?

DeepSeek-R1 directly competes with OpenAI’s “o1” series (e.g., GPT-based models) across math, coding, and reasoning tasks. Here’s a snapshot of how they fare on critical benchmarks, based on reported data:

| Benchmark | DeepSeek-R1 | OpenAI o1-1217 | Notes |

|---|---|---|---|

| AIME 2024 | 79.8% | 79.2% | Advanced math competition |

| MATH-500 | 97.3% | 96.4% | High-school math problems |

| Codeforces | 96.3% | 96.6% | Coding competition percentile |

| GPQA Diamond | 71.5% | 75.7% | Factual Q&A tasks |

- Where DeepSeek Shines: Mathematical reasoning and code generation, thanks to RL-driven CoT.

- Where OpenAI Has an Edge: General knowledge Q&A, plus slightly better coding scores on some sub-benchmarks.

Distilled Models: Qwen and Llama

DeepSeek goes beyond just the main R1 model. They distill the reasoning abilities into smaller, dense models based on both Qwen (1.5B to 32B) and Llama (8B & 70B). For instance:

- DeepSeek-R1-Distill-Qwen-7B: Achieves over 92% on MATH-500, outperforming many similarly sized models.

- DeepSeek-R1-Distill-Llama-70B: Hits 94.5% on MATH-500 and 57.5% on LiveCodeBench—close to some OpenAI-coded models.

This modular approach means smaller organizations can tap high-level reasoning without needing massive GPU clusters.

Practical Use Cases

- Advanced Math & Research

- Universities and R&D labs can harness the step-by-step problem-solving abilities for complex proofs or engineering tasks.

- Coding & Debugging

- Automate code translation (e.g., from C# in Unity to C++ in Unreal).

- Assist with debugging by pinpointing logical errors and offering optimized solutions.

- Explainable AI in Regulated Industries

- Finance, healthcare, and government require transparency. DeepSeek-R1’s chain-of-thought clarifies how it arrives at each conclusion.

- Multi-Agent Systems

- Coordinate robotics, autonomous vehicles, or simulation tasks where multiple AI agents must interact seamlessly.

- Scalable Deployments & Edge Scenarios

- Distilled models fit resource-limited environments.

- Large MoE versions can handle enterprise-level volumes and long context queries.

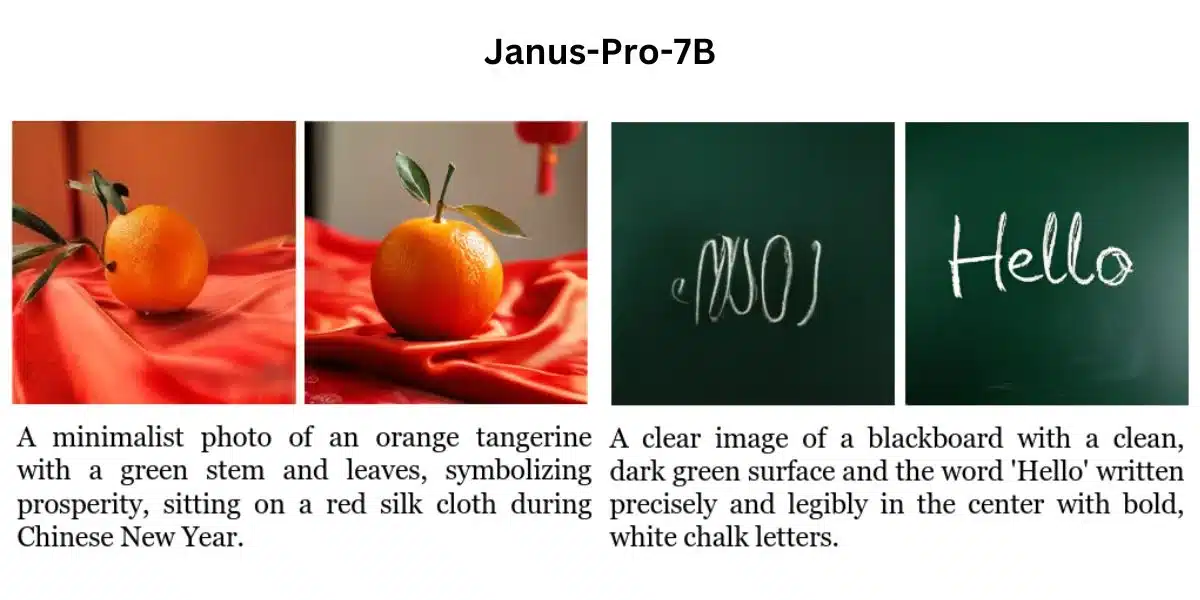

Also Read: Janus-Pro-7B AI Image Generator – By DeepSeek

Access & Pricing

- Website & Chat: chat.deepseek.com (includes “DeepThink” mode for reasoning).

- API: platform.deepseek.com, OpenAI-compatible format.

- Pricing Model:

- Free Chat: Daily cap of ~50 messages.

- Paid API: Billed per 1M tokens, with varying rates for “deepseek-chat” and “deepseek-reasoner.”

- Context Caching: Reduces token usage and total cost for repeated queries.

Future Prospects

- Ongoing Updates: DeepSeek plans to improve multi-agent coordination, reduce latency, and offer more industry-specific modules.

- Strategic Partnerships: Collaboration with cloud giants (AWS, Microsoft, Google Cloud) is in discussion to broaden deployment avenues.

- Growing Global Impact: Having briefly topped the U.S. App Store for free AI apps, DeepSeek is becoming a serious contender and may reshape how open-source AI is adopted worldwide.

Final Thoughts

DeepSeek-R1 represents a significant stride in open-source AI reasoning, with performance rivalling (and sometimes surpassing) OpenAI’s models on math and code tasks. Its reinforcement-learning-first approach, combined with supervised refinements, yields rich, traceable chain-of-thought outputs that appeal to regulated and research-focused fields. Meanwhile, distilled versions put advanced reasoning in reach of smaller teams.

For those interested in mastering these technologies and understanding their full potential, Great Learning’s AI and ML course offers a robust curriculum that blends academic knowledge with practical experience.

Suggested: